Testcontainers and Playwright

Discover how Testcontainers-Playwright simplifies browser automation and testing without local Playwright installations. Learn about its features, limitations, compatibility, and usage with code examples.

Discover how Testcontainers-Playwright simplifies browser automation and testing without local Playwright installations. Learn about its features, limitations, compatibility, and usage with code examples.  Discover how Testcontainers-Playwright simplifies browser automation and testing without local Playwright installations. Learn about its features, limitations, compatibility, and usage with code examples.

Discover how Testcontainers-Playwright simplifies browser automation and testing without local Playwright installations. Learn about its features, limitations, compatibility, and usage with code examples. In today’s world of fast-paced development, setting up a consistent and efficient development environment for all team members is crucial for overall productivity. Although Docker itself is a powerful tool that enhances developer efficiency, configuring a local development environment still can be complex and time-consuming. This is where development containers (or dev containers) come into play.

Dev containers provide an all-encompassing solution, offering everything needed to start working on a feature and running the application seamlessly. In specific terms, dev containers are Docker containers (running locally or remotely) that encapsulate everything necessary for the software development of a given project, including integrated development environments (IDEs), specific software, tools, libraries, and preconfigured services.

This description of an isolated environment can be easily transferred and launched on any computer or cloud infrastructure, allowing developers and teams to abstract away the specifics of their operating systems. The dev container settings are defined in a devcontainer.json file, which is located within a given project, ensuring consistency across different environments.

However, development is only one part of a developer’s workflow. Another critical aspect is testing to ensure that code changes work as expected and do not introduce new issues. If you use Testcontainers for integration testing or rely on Testcontainers-based services to run your application locally, you must have Docker available from within your dev container.

In this post, we will show how you can run Testcontainers-based tests or services from within the dev container and how to leverage Testcontainers Cloud within a dev container securely and efficiently to make interacting with Docker even easier.

To get started with dev containers on your computer using this tutorial, you will need:

There’s no need to preconfigure your project to support development containers; the IDE will do it for you. But, we will need some Testcontainers usage examples to run in the dev container, so let’s use the existing Java Local Development workshop repository. It contains the implementation of a Spring Boot-based microservice application for managing a catalog of products. The demo-state branch contains the implementation of Testcontainers-based integration tests and services for local development.

Although this project typically requires Java 21 and Maven installed locally, we will instead use dev containers to preconfigure all necessary tools and dependencies within the development container.

To begin, clone the project:

git clone https://github.com/testcontainers/java-local-development-workshop.git

Next, open the project in your local IntelliJ IDE and install the Dev Containers plugin (Figure 1).

Next, we will add a .devcontainer/devcontainer.json file with the requirements to the project. In the context menu of the project root, select New > .devcontainer (Figure 2).

.devcontainer.We’ll need Java 21, so let’s use the Java Dev Container Template. Then, select Java version 21 and enable Install Maven (Figure 3).

Select OK, and you’ll see a newly generated devcontainer.json file. Let’s now tweak that a bit more.

Because Testcontainers requires access to Docker, we need to provide some access to Docker inside of the dev container. Let’s use an existing Development Container Feature to do this. Features enhance development capabilities within your dev container by providing self-contained units of specific container configuration including installation steps, environment variables, and other settings.

You can add the Docker-in-Docker feature to your devcontainer.json to install Docker into the dev container itself and thus have a Docker environment available for the Testcontainers tests.

Your devcontainer.json file should now look like the following:

{

"name": "Java Dev Container TCC Demo",

"image": "mcr.microsoft.com/devcontainers/java:1-21-bullseye",

"features": {

"ghcr.io/devcontainers/features/java:1": {

"version": "none",

"installMaven": "true",

"installGradle": "false"

},

"docker-in-docker": {

"version": "latest",

"moby": true,

"dockerDashComposeVersion": "v1"

}

},

"customizations" : {

"jetbrains" : {

"backend" : "IntelliJ"

}

}

}

Now you can run the container. Navigate to devcontainer.json and click on the Dev Containers plugin and select Create Dev Container and Clone Sources. The New Dev Container window will open (Figure 4).

In the New Dev Container window, you can select the Git branch and specify where to create your dev container. By default, it uses the local Docker instance, but you can select the ellipses (…) to add additional Docker servers from the cloud or WSL and configure the connection via SSH.

If the build process is successful, you will be able to select the desired IDE backend, which will be installed and launched within the container (Figure 5).

After you select Continue, a new IDE window will open, allowing you to code as usual. To view the details of the running dev container, execute docker ps in the terminal of your host (Figure 6).

If you run the TestApplication class, your application will start with all required dependencies managed by Testcontainers. (For more implementation details, refer to the “Local development environment with Testcontainers” step on GitHub.) You can see the services running in containers by executing docker ps in your IDE terminal (within the container). See Figure 7.

To reduce the load on local resources and enhance the observability of Testcontainers-based containers, let’s switch from the Docker-in-Docker feature to the Testcontainers Cloud (TCC) feature: ghcr.io/eddumelendez/test-devcontainer/tcc:0.0.2.

This feature will install and run the Testcontainers Cloud agent within the dev container, providing a remote Docker environment for your Testcontainers tests.

To enable this functionality, you’ll need to obtain a valid TC_CLOUD_TOKEN, which the Testcontainers Cloud agent will use to establish the connection. If you don’t already have a Testcontainers Cloud account, you can sign up for a free account. Once logged in, you can create a Service Account to generate the necessary token (Figure 8).

To use the token value, we’ll utilize an .env file. Create an environment file under .devcontainer/devcontainer.env and add your newly generated token value (Figure 9). Be sure to add devcontainer.env to .gitignore to prevent it from being pushed to the remote repository.

devcontainer.env.In your devcontainer.json file, include the following options:

runArgs to specify that the container should use the .env file located at .devcontainer/devcontainer.env. containerEnv to set the environment variables TC_CLOUD_TOKEN and TCC_PROJECT_KEY within the container. The TC_CLOUD_TOKEN variable is dynamically set from the local environment variable.The resulting devcontainer.json file will look like this:

{

"name": "Java Dev Container TCC Demo",

"image": "mcr.microsoft.com/devcontainers/java:21",

"runArgs": [

"--env-file",

".devcontainer/devcontainer.env"

],

"containerEnv": {

"TC_CLOUD_TOKEN": "${localEnv:TC_CLOUD_TOKEN}",

"TCC_PROJECT_KEY": "java-local-development-workshop"

},

"features": {

"ghcr.io/devcontainers/features/java:1": {

"version": "none",

"installMaven": "true",

"installGradle": "false"

},

"ghcr.io/eddumelendez/test-devcontainer/tcc:0.0.2": {}

},

"customizations": {

"jetbrains": {

"backend": "IntelliJ"

}

}

}

Let’s rebuild and start the dev container again. Navigate to devcontainer.json, select the Dev Containers plugin, then select Create Dev Container and Clone Sources, and follow the steps as in the previous example. Once the build process is finished, choose the necessary IDE backend, which will be installed and launched within the container.

To verify that the Testcontainers Cloud agent was successfully installed in your dev container, run the following in your dev container IDE terminal:

cat /usr/local/share/tcc-agent.log

You should see a log line similar to Listening address= if the agent started successfully (Figure 10).

Now you can run your tests. The ProductControllerTest class contains Testcontainers-based integration tests for our application. (For more implementation details, refer to the “Let’s write tests” step on GitHub.)

To view the containers running during the test cycle, navigate to the Testcontainers Cloud dashboard and check the latest session (Figure 11). You will see the name of the Service Account you created earlier in the Account line, and the Project name will correspond to the TCC_PROJECT_KEY defined in the containerEnv section. You can learn more about how to tag your session by project or workflow in the documentation.

If you want to run the application and debug containers, you can Connect to the cloud VM terminal and access the containers via the CLI (Figure 12).

In this article, we’ve explored the benefits of using dev containers to streamline your Testcontainers-based local development environment. Using Testcontainers Cloud enhances this setup further by providing a secure, scalable solution for running Testcontainers-based containers by addressing potential security concerns and resource limitations of Docker-in-Docker approach. This powerful combination simplifies your workflow and boosts productivity and consistency across your projects.

Running your dev containers in the cloud can further reduce the load on local resources and improve performance. Stay tuned for upcoming innovations from Docker that will enhance this capability even further.

Hugging Face now hosts more than 700,000 models, with the number continuously rising. It has become the premier repository for AI/ML models, catering to both general and highly specialized needs.

As the adoption of AI/ML models accelerates, more application developers are eager to integrate them into their projects. However, the entry barrier remains high due to the complexity of setup and lack of developer-friendly tools. Imagine if deploying an AI/ML model could be as straightforward as spinning up a database. Intrigued? Keep reading to find out how.

Recently, Ollama announced support for running models from Hugging Face. This development is exciting because it brings the rich ecosystem of AI/ML components from Hugging Face to Ollama end users, who are often developers.

Testcontainers libraries already provide an Ollama module, making it straightforward to spin up a container with Ollama without needing to know the details of how to run Ollama using Docker:

import org.testcontainers.ollama.OllamaContainer;

var ollama = new OllamaContainer("ollama/ollama:0.1.44");

ollama.start();

These lines of code are all that is needed to have Ollama running inside a Docker container effortlessly.

By default, Ollama does not include any models, so you need to download the one you want to use. With Testcontainers, this step is straightforward by leveraging the execInContainer API provided by Testcontainers:

ollama.execInContainer("ollama", "pull", "moondream");

At this point, you have the moondream model ready to be used via the Ollama API.

Excited to try it out? Hold on for a bit. This model is running in a container, so what happens if the container dies? Will you need to spin up a new container and pull the model again? Ideally not, as these models can be quite large.

Thankfully, Testcontainers makes it easy to handle this scenario, by providing an easy-to-use API to commit a container image programmatically:

public void createImage(String imageName) {

var ollama = new OllamaContainer("ollama/ollama:0.1.44");

ollama.start();

ollama.execInContainer("ollama", "pull", "moondream");

ollama.commitToImage(imageName);

}

This code creates an image from the container with the model included. In subsequent runs, you can create a container from that image, and the model will already be present. Here’s the pattern:

var imageName = "tc-ollama-moondream";

var ollama = new OllamaContainer(DockerImageName.parse(imageName)

.asCompatibleSubstituteFor("ollama/ollama:0.1.44"));

try {

ollama.start();

} catch (ContainerFetchException ex) {

// If image doesn't exist, create it. Subsequent runs will reuse the image.

createImage(imageName);

ollama.start();

}

Now, you have a model ready to be used, and because it is running in Ollama, you can interact with its API:

var image = getImageInBase64("/whale.jpeg");

String response = given()

.baseUri(ollama.getEndpoint())

.header(new Header("Content-Type", "application/json"))

.body(new CompletionRequest("moondream:latest", "Describe the image.", Collections.singletonList(image), false))

.post("/api/generate")

.getBody().as(CompletionResponse.class).response();

System.out.println("Response from LLM " + response);

The previous example demonstrated using a model already provided by Ollama. However, with the ability to use Hugging Face models in Ollama, your available model options have now expanded by thousands.

To use a model from Hugging Face in Ollama, you need a GGUF file for the model. Currently, there are 20,647 models available in GGUF format. How cool is that?

The steps to run a Hugging Face model in Ollama are straightforward, but we’ve simplified the process further by scripting it into a custom OllamaHuggingFaceContainer. Note that this custom container is not part of the default library, so you can copy and paste the implementation of OllamaHuggingFaceContainer and customize it to suit your needs.

To run a Hugging Face model, do the following:

public void createImage(String imageName, String repository, String model) {

var model = new OllamaHuggingFaceContainer.HuggingFaceModel(repository, model);

var huggingFaceContainer = new OllamaHuggingFaceContainer(hfModel);

huggingFaceContainer.start();

huggingFaceContainer.commitToImage(imageName);

}

By providing the repository name and the model file as shown, you can run Hugging Face models in Ollama via Testcontainers.

You can find an example using an embedding model and an example using a chat model on GitHub.

One key strength of using Testcontainers is its flexibility in customizing container setups to fit specific project needs by encapsulating complex setups into manageable containers.

For example, you can create a custom container tailored to your requirements. Here’s an example of TinyLlama, a specialized container for spinning up the DavidAU/DistiLabelOrca-TinyLLama-1.1B-Q8_0-GGUF model from Hugging Face:

public class TinyLlama extends OllamaContainer {

private final String imageName;

public TinyLlama(String imageName) {

super(DockerImageName.parse(imageName)

.asCompatibleSubstituteFor("ollama/ollama:0.1.44"));

this.imageName = imageName;

}

public void createImage(String imageName) {

var ollama = new OllamaContainer("ollama/ollama:0.1.44");

ollama.start();

try {

ollama.execInContainer("apt-get", "update");

ollama.execInContainer("apt-get", "upgrade", "-y");

ollama.execInContainer("apt-get", "install", "-y", "python3-pip");

ollama.execInContainer("pip", "install", "huggingface-hub");

ollama.execInContainer(

"huggingface-cli",

"download",

"DavidAU/DistiLabelOrca-TinyLLama-1.1B-Q8_0-GGUF",

"distilabelorca-tinyllama-1.1b.Q8_0.gguf",

"--local-dir",

"."

);

ollama.execInContainer(

"sh",

"-c",

String.format("echo '%s' > Modelfile", "FROM distilabelorca-tinyllama-1.1b.Q8_0.gguf")

);

ollama.execInContainer("ollama", "create", "distilabelorca-tinyllama-1.1b.Q8_0.gguf", "-f", "Modelfile");

ollama.execInContainer("rm", "distilabelorca-tinyllama-1.1b.Q8_0.gguf");

ollama.commitToImage(imageName);

} catch (IOException | InterruptedException e) {

throw new ContainerFetchException(e.getMessage());

}

}

public String getModelName() {

return "distilabelorca-tinyllama-1.1b.Q8_0.gguf";

}

@Override

public void start() {

try {

super.start();

} catch (ContainerFetchException ex) {

// If image doesn't exist, create it. Subsequent runs will reuse the image.

createImage(imageName);

super.start();

}

}

}

Once defined, you can easily instantiate and utilize your custom container in your application:

var tinyLlama = new TinyLlama("example");

tinyLlama.start();

String response = given()

.baseUri(tinyLlama.getEndpoint())

.header(new Header("Content-Type", "application/json"))

.body(new CompletionRequest(tinyLlama.getModelName() + ":latest", List.of(new Message("user", "What is the capital of France?")), false))

.post("/api/chat")

.getBody().as(ChatResponse.class).message.content;

System.out.println("Response from LLM " + response);

Note how all the implementation details are under the cover of the TinyLlama class, and the end user doesn’t need to know how to actually install the model into Ollama, what GGUF is, or that to get huggingface-cli you need to pip install huggingface-hub.

This approach leverages the strengths of both Hugging Face and Ollama, supported by the automation and encapsulation provided by the Testcontainers module, making powerful AI tools more accessible and manageable for developers across different ecosystems.

Integrating AI models into applications need not be a daunting task. By leveraging Ollama and Testcontainers, developers can seamlessly incorporate Hugging Face models into their projects with minimal effort. This approach not only simplifies the setup of the development environment process but also ensures reproducibility and ease of use. With the ability to programmatically manage models and containerize them for consistent environments, developers can focus on building innovative solutions without getting bogged down by complex setup procedures.

The combination of Ollama’s support for Hugging Face models and Testcontainers’ robust container management capabilities provides a powerful toolkit for modern AI development. As AI continues to evolve and expand, these tools will play a crucial role in making advanced models accessible and manageable for developers across various fields. So, dive in, experiment with different models, and unlock the potential of AI in your applications today.

Stay current on the latest Docker news. Subscribe to the Docker Newsletter.

Developing Kubernetes operators in Java is not yet the norm. So far, Go has been the language of choice here, not least because of its excellent support for writing corresponding tests.

One challenge in developing Java-based projects has been the lack of easy automated integration testing that interacts with a Kubernetes API server. However, thanks to the open source library Kindcontainer, based on the widely used Testcontainers integration test library, this gap can be bridged, enabling easier development of Java-based Kubernetes projects.

In this article, we’ll show how to use Testcontainers to test custom Kubernetes controllers and operators implemented in Java.

Testcontainers allows starting arbitrary infrastructure components and processes running in Docker containers from tests running within a Java virtual machine (JVM). The framework takes care of binding the lifecycle and cleanup of Docker containers to the test execution. Even if the JVM is terminated abruptly during debugging, for example, it ensures that the started Docker containers are also stopped and removed. In addition to a generic class for any Docker image, Testcontainers offers specialized implementations in the form of subclasses — for components with sophisticated configuration options, for example.

These specialized implementations can also be provided by third-party libraries. The open source project Kindcontainer is one such third-party library that provides specialized implementations for various Kubernetes containers based on Testcontainers:

ApiServerContainerK3sContainerKindContainerAlthough ApiServerContainer focuses on providing only a small part of the Kubernetes control plane, namely the Kubernetes API server, K3sContainer and KindContainer launch complete single-node Kubernetes clusters in Docker containers.

This allows for a trade-off depending on the requirements of the respective tests: If only interaction with the API server is necessary for testing, then the significantly faster-starting ApiServerContainer is usually sufficient. However, if testing complex interactions with other components of the Kubernetes control plane or even other operators is in the scope, then the two “larger” implementations provide the necessary tools for that — albeit at the expense of startup time. For perspective, depending on the hardware configuration, startup times can reach a minute or more.

To illustrate how straightforward testing against a Kubernetes container can be, let’s look at an example using JUnit 5:

@Testcontainers

public class SomeApiServerTest {

@Container

public ApiServerContainer<?> K8S = new ApiServerContainer<>();

@Test

public void verify_no_node_is_present() {

Config kubeconfig = Config.fromKubeconfig(K8S.getKubeconfig());

try (KubernetesClient client = new KubernetesClientBuilder()

.withConfig(kubeconfig).build()) {

// Verify that ApiServerContainer has no nodes

assertTrue(client.nodes().list().getItems().isEmpty());

}

}

}

Thanks to the @Testcontainers JUnit 5 extension, lifecycle management of the ApiServerContainer is easily handled by marking the container that should be managed with the @Container annotation. Once the container is started, a YAML document containing the necessary details to establish a connection with the API server can be retrieved via the getKubeconfig() method.

This YAML document represents the standard way of presenting connection information in the Kubernetes world. The fabric8 Kubernetes client used in the example can be configured using Config.fromKubeconfig(). Any other Kubernetes client library will offer similar interfaces. Kindcontainer does not impose any specific requirements in this regard.

All three container implementations rely on a common API. Therefore, if it becomes clear at a later stage of development that one of the heavier implementations is necessary for a test, you can simply switch to it without any further code changes — the already implemented test code can remain unchanged.

In many situations, after the Kubernetes container has started, a lot of preparatory work needs to be done before the actual test case can begin. For an operator, for example, the API server must first be made aware of a Custom Resource Definition (CRD), or another controller must be installed via a Helm chart. What may sound complicated at first is made simple by Kindcontainer along with intuitively usable Fluent APIs for the command-line tools kubectl and helm.

The following listing shows how a CRD is first applied from the test’s classpath using kubectl, followed by the installation of a Helm chart:

@Testcontainers

public class FluentApiTest {

@Container

public static final K3sContainer<?> K3S = new K3sContainer<>()

.withKubectl(kubectl -> {

kubectl.apply.fileFromClasspath(“manifests/mycrd.yaml”).run();

})

.withHelm3(helm -> {

helm.repo.add.run(“repo”, “https://repo.example.com”);

helm.repo.update.run();

helm.install.run(“release”, “repo/chart”);

);

// Tests go here

}

Kindcontainer ensures that all commands are executed before the first test starts. If there are dependencies between the commands, they can be easily resolved; Kindcontainer guarantees that they are executed in the order they are specified.

The Fluent API is translated into calls to the respective command-line tools. These are executed in separate containers, which are automatically started with the necessary connection details and connected to the Kubernetes container via the Docker internal network. This approach avoids dependencies on the Kubernetes image and version conflicts regarding the available tooling within it.

If nothing else is specified by the developer, Kindcontainer starts the latest supported Kubernetes version by default. However, this approach is generally discouraged, so the best practice would require you to explicitly specify one of the supported versions when creating the container, as shown:

@Testcontainers

public class SpecificVersionTest {

@Container

KindContainer<?> container = new KindContainer<>(KindContainerVersion.VERSION_1_24_1);

// Tests go here

}

Each of the three container implementations has its own Enum, through which one of the supported Kubernetes versions can be selected. The test suite of the Kindcontainer project itself ensures — with the help of an elaborate matrix-based integration test setup — that the full feature set can be easily utilized for each of these versions. This elaborate testing process is necessary because the Kubernetes ecosystem evolves rapidly, and different initialization steps need to be performed depending on the Kubernetes version.

Generally, the project places great emphasis on supporting all currently maintained Kubernetes major versions, which are released every 4 months. Older Kubernetes versions are marked as @Deprecated and eventually removed when supporting them in Kindcontainer becomes too burdensome. However, this should only happen at a time when using the respective Kubernetes version is no longer recommended.

Accessing Docker images from public sources is often not straightforward, especially in corporate environments that rely on an internal Docker registry with manual or automated auditing. Kindcontainer allows developers to specify their own coordinates for the Docker images used for this purpose. However, because Kindcontainer still needs to know which Kubernetes version is being used due to potentially different initialization steps, these custom coordinates are appended to the respective Enum value:

@Testcontainers

public class CustomKubernetesImageTest {

@Container

KindContainer<?> container = new KindContainer<>(KindContainerVersion.VERSION_1_24_1

.withImage(“my-registry/kind:1.24.1”));

// Tests go here

}

In addition to the Kubernetes images themselves, Kindcontainer also uses several other Docker images. As already explained, command-line tools such as kubectl and helm are executed in their own containers. Appropriately, the Docker images required for these tools are configurable as well. Fortunately, no version-dependent code paths are needed for their execution.

Therefore, the configuration shown in the following is simpler than in the case of the Kubernetes image:

@Testcontainers

public class CustomFluentApiImageTest {

@Container

KindContainer<?> container = new KindContainer<>()

.withKubectlImage(

DockerImageName

.parse(“my-registry/kubectl:1.21.9-debian-10-r10”))

.withHelm3Image(DockerImageName.parse(“my-registry/helm:3.7.2”));

// Tests go here

}

The coordinates of the images for all other containers started can also be easily chosen manually. However, it is always the developer’s responsibility to ensure the use of the same or at least compatible images. For this purpose, a complete list of the Docker images used and their versions can be found in the documentation of Kindcontainer on GitHub.

For the test scenarios shown so far, the communication direction is clear: A Kubernetes client running in the JVM accesses the locally or remotely running Kubernetes container over the network to communicate with the API server running inside it. Docker makes this standard case incredibly straightforward: A port is opened on the Docker container for the API server, making it accessible.

Kindcontainer automatically performs the necessary configuration steps for this process and provides suitable connection information as Kubeconfig for the respective network configuration.

However, admission controller webhooks present a technically more challenging testing scenario. For these, the API server must be able to communicate with external webhooks via HTTPS when processing manifests. In our case, these webhooks typically run in the JVM where the test logic is executed. However, they may not be easily accessible from the Docker container.

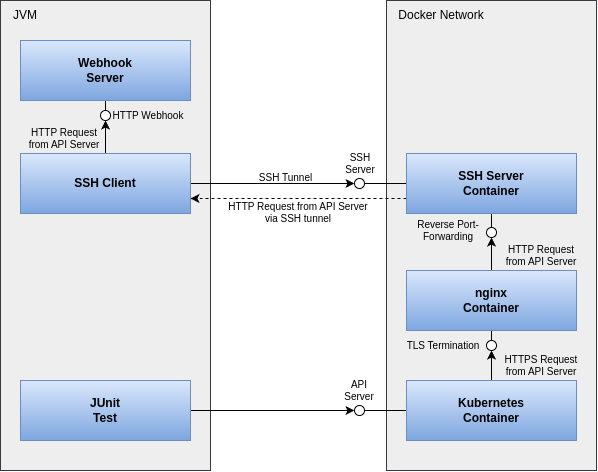

To facilitate testing of these webhooks independently of the network setup, yet still make it simple, Kindcontainer employs a trick. In addition to the Kubernetes container itself, two more containers are started. An SSH server provides the ability to establish a tunnel from the test JVM into the Kubernetes container and set up reverse port forwarding, allowing the API server to communicate back to the JVM.

Because Kubernetes requires TLS-secured communication with webhooks, an Nginx container is also started to handle TLS termination for the webhooks. Kindcontainer manages the administration of the required certificate material for this.

The entire setup of processes, containers, and their network communication is illustrated in Figure 1.

Fortunately, Kindcontainer hides this complexity behind an easy-to-use API:

@Testcontainers

public class WebhookTest {

@Container

ApiServerContainer<?> container = new ApiServerContainer<>()

.withAdmissionController(admission -> {

admission.mutating()

.withNewWebhook("mutating.example.com")

.atPort(webhookPort) // Local port of webhook

.withNewRule()

.withApiGroups("")

.withApiVersions("v1")

.withOperations("CREATE", "UPDATE")

.withResources("configmaps")

.withScope("Namespaced")

.endRule()

.endWebhook()

.build();

});

// Tests go here

}

The developer only needs to provide the port of the locally running webhook along with some necessary information for setting up in Kubernetes. Kindcontainer then automatically handles the configuration of SSH tunneling, TLS termination, and Kubernetes.

Starting from the simple example of a minimal JUnit test, we have shown how to test custom Kubernetes controllers and operators implemented in Java. We have explained how to use familiar command-line tools from the ecosystem with the help of Fluent APIs and how to easily execute integration tests even in restricted network environments. Finally, we have shown how even the technically challenging use case of testing admission controller webhooks can be implemented simply and conveniently with Kindcontainer.

Thanks to these new testing possibilities, we hope more developers will consider Java as the language of choice for their Kubernetes-related projects in the future.

In the vast universe of programming, the era of generative artificial intelligence (GenAI) has marked a turning point, opening up a plethora of possibilities for developers.

Tools such as LangChain4j and Spring AI have democratized access to the creation of GenAI applications in Java, allowing Java developers to dive into this fascinating world. With Langchain4j, for instance, setting up and interacting with large language models (LLMs) has become exceptionally straightforward. Consider the following Java code snippet:

public static void main(String[] args) {

var llm = OpenAiChatModel.builder()

.apiKey("demo")

.modelName("gpt-3.5-turbo")

.build();

System.out.println(llm.generate("Hello, how are you?"));

}

This example illustrates how a developer can quickly instantiate an LLM within a Java application. By simply configuring the model with an API key and specifying the model name, developers can begin generating text responses immediately. This accessibility is pivotal for fostering innovation and exploration within the Java community. More than that, we have a wide range of models that can be run locally, and various vector databases for storing embeddings and performing semantic searches, among other technological marvels.

Despite this progress, however, we are faced with a persistent challenge: the difficulty of testing applications that incorporate artificial intelligence. This aspect seems to be a field where there is still much to explore and develop.

In this article, I will share a methodology that I find promising for testing GenAI applications.

The example project focuses on an application that provides an API for interacting with two AI agents capable of answering questions.

An AI agent is a software entity designed to perform tasks autonomously, using artificial intelligence to simulate human-like interactions and responses.

In this project, one agent uses direct knowledge already contained within the LLM, while the other leverages internal documentation to enrich the LLM through retrieval-augmented generation (RAG). This approach allows the agents to provide precise and contextually relevant answers based on the input they receive.

I prefer to omit the technical details about RAG, as ample information is available elsewhere. I’ll simply note that this example employs a particular variant of RAG, which simplifies the traditional process of generating and storing embeddings for information retrieval.

Instead of dividing documents into chunks and making embeddings of those chunks, in this project, we will use an LLM to generate a summary of the documents. The embedding is generated based on that summary.

When the user writes a question, an embedding of the question will be generated and a semantic search will be performed against the embeddings of the summaries. If a match is found, the user’s message will be augmented with the original document.

This way, there’s no need to deal with the configuration of document chunks, worry about setting the number of chunks to retrieve, or worry about whether the way of augmenting the user’s message makes sense. If there is a document that talks about what the user is asking, it will be included in the message sent to the LLM.

The project is developed in Java and utilizes a Spring Boot application with Testcontainers and LangChain4j.

For setting up the project, I followed the steps outlined in Local Development Environment with Testcontainers and Spring Boot Application Testing and Development with Testcontainers.

I also use Tescontainers Desktop to facilitate database access and to verify the generated embeddings as well as to review the container logs.

The real challenge arises when trying to test the responses generated by language models. Traditionally, we could settle for verifying that the response includes certain keywords, which is insufficient and prone to errors.

static String question = "How I can install Testcontainers Desktop?";

@Test

void verifyRaggedAgentSucceedToAnswerHowToInstallTCD() {

String answer = restTemplate.getForObject("/chat/rag?question={question}", ChatController.ChatResponse.class, question).message();

assertThat(answer).contains("https://testcontainers.com/desktop/");

}

This approach is not only fragile but also lacks the ability to assess the relevance or coherence of the response.

An alternative is to employ cosine similarity to compare the embeddings of a “reference” response and the actual response, providing a more semantic form of evaluation.

This method measures the similarity between two vectors/embeddings by calculating the cosine of the angle between them. If both vectors point in the same direction, it means the “reference” response is semantically the same as the actual response.

static String question = "How I can install Testcontainers Desktop?";

static String reference = """

- Answer must indicate to download Testcontainers Desktop from https://testcontainers.com/desktop/

- Answer must indicate to use brew to install Testcontainers Desktop in MacOS

- Answer must be less than 5 sentences

""";

@Test

void verifyRaggedAgentSucceedToAnswerHowToInstallTCD() {

String answer = restTemplate.getForObject("/chat/rag?question={question}", ChatController.ChatResponse.class, question).message();

double cosineSimilarity = getCosineSimilarity(reference, answer);

assertThat(cosineSimilarity).isGreaterThan(0.8);

}

However, this method introduces the problem of selecting an appropriate threshold to determine the acceptability of the response, in addition to the opacity of the evaluation process.

The real problem here arises from the fact that answers provided by the LLM are in natural language and non-deterministic. Because of this, using current testing methods to verify them is difficult, as these methods are better suited to testing predictable values.

However, we already have a great tool for understanding non-deterministic answers in natural language: LLMs themselves. Thus, the key may lie in using one LLM to evaluate the adequacy of responses generated by another LLM.

This proposal involves defining detailed validation criteria and using an LLM as a “Validator Agent” to determine if the responses meet the specified requirements. This approach can be applied to validate answers to specific questions, drawing on both general knowledge and specialized information

By incorporating detailed instructions and examples, the Validator Agent can provide accurate and justified evaluations, offering clarity on why a response is considered correct or incorrect.

static String question = "How I can install Testcontainers Desktop?";

static String reference = """

- Answer must indicate to download Testcontainers Desktop from https://testcontainers.com/desktop/

- Answer must indicate to use brew to install Testcontainers Desktop in MacOS

- Answer must be less than 5 sentences

""";

@Test

void verifyStraightAgentFailsToAnswerHowToInstallTCD() {

String answer = restTemplate.getForObject("/chat/straight?question={question}", ChatController.ChatResponse.class, question).message();

ValidatorAgent.ValidatorResponse validate = validatorAgent.validate(question, answer, reference);

assertThat(validate.response()).isEqualTo("no");

}

@Test

void verifyRaggedAgentSucceedToAnswerHowToInstallTCD() {

String answer = restTemplate.getForObject("/chat/rag?question={question}", ChatController.ChatResponse.class, question).message();

ValidatorAgent.ValidatorResponse validate = validatorAgent.validate(question, answer, reference);

assertThat(validate.response()).isEqualTo("yes");

}

We can even test more complex responses where the LLM should suggest a better alternative to the user’s question.

static String question = "How I can find the random port of a Testcontainer to connect to it?";

static String reference = """

- Answer must not mention using getMappedPort() method to find the random port of a Testcontainer

- Answer must mention that you don't need to find the random port of a Testcontainer to connect to it

- Answer must indicate that you can use the Testcontainers Desktop app to configure fixed port

- Answer must be less than 5 sentences

""";

@Test

void verifyRaggedAgentSucceedToAnswerHowToDebugWithTCD() {

String answer = restTemplate.getForObject("/chat/rag?question={question}", ChatController.ChatResponse.class, question).message();

ValidatorAgent.ValidatorResponse validate = validatorAgent.validate(question, answer, reference);

assertThat(validate.response()).isEqualTo("yes");

}

The configuration for the Validator Agent doesn’t differ from that of other agents. It is built using the LangChain4j AI Service and a list of specific instructions:

public interface ValidatorAgent {

@SystemMessage("""

### Instructions

You are a strict validator.

You will be provided with a question, an answer, and a reference.

Your task is to validate whether the answer is correct for the given question, based on the reference.

Follow these instructions:

- Respond only 'yes', 'no' or 'unsure' and always include the reason for your response

- Respond with 'yes' if the answer is correct

- Respond with 'no' if the answer is incorrect

- If you are unsure, simply respond with 'unsure'

- Respond with 'no' if the answer is not clear or concise

- Respond with 'no' if the answer is not based on the reference

Your response must be a json object with the following structure:

{

"response": "yes",

"reason": "The answer is correct because it is based on the reference provided."

}

### Example

Question: Is Madrid the capital of Spain?

Answer: No, it's Barcelona.

Reference: The capital of Spain is Madrid

###

Response: {

"response": "no",

"reason": "The answer is incorrect because the reference states that the capital of Spain is Madrid."

}

""")

@UserMessage("""

###

Question: {{question}}

###

Answer: {{answer}}

###

Reference: {{reference}}

###

""")

ValidatorResponse validate(@V("question") String question, @V("answer") String answer, @V("reference") String reference);

record ValidatorResponse(String response, String reason) {}

}

As you can see, I’m using Few-Shot Prompting to guide the LLM on the expected responses. I also request a JSON format for responses to facilitate parsing them into objects, and I specify that the reason for the answer must be included, to better understand the basis of its verdict.

The evolution of GenAI applications brings with it the challenge of developing testing methods that can effectively evaluate the complexity and subtlety of responses generated by advanced artificial intelligences.

The proposal to use an LLM as a Validator Agent represents a promising approach, paving the way towards a new era of software development and evaluation in the field of artificial intelligence. Over time, we hope to see more innovations that allow us to overcome the current challenges and maximize the potential of these transformative technologies.

Many engineering teams follow a similar process when developing Docker images. This process encompasses activities such as development, testing, and building, and the images are then released as the subsequent version of the application. During each of these distinct stages, a significant quantity of data can be gathered, offering valuable insights into the impact of code modifications and providing a clearer understanding of the stability and maturity of the product features.

Observability has made a huge leap in recent years, and advanced technologies such as OpenTelemetry (OTEL) and eBPF have simplified the process of collecting runtime data for applications. Yet, for all that progress, developers may still be on the fence about whether and how to use this new resource. The effort required to transform the raw data into something meaningful and beneficial for the development process may seem to outweigh the advantages offered by these technologies.

The practice of continuous feedback envisions a development flow in which this particular problem has been solved. By making the evaluation of code runtime data continuous, developers can benefit from shorter feedback loops and tighter control of their codebase. Instead of waiting for issues to develop in production systems, or even surface in testing, various developer tools can watch the code observability data for you and provide early warning and rigorous linting for regressions, code smells, or issues.

At the same time, gathering information back into the code from multiple deployment environments, such as the test or production environment, can help developers understand how a specific function, query, or event is performing in the real world at a single glance.

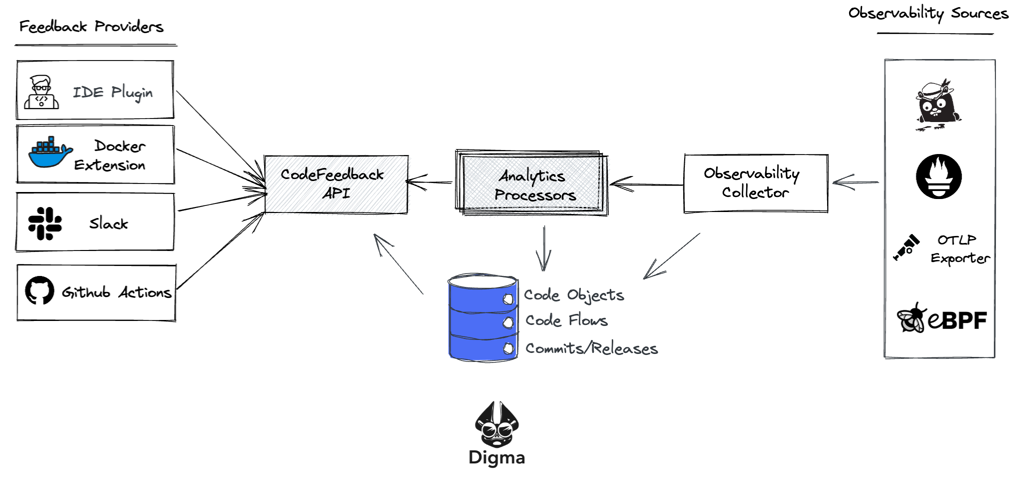

Digma is a free developer tool that was created to bridge the continuous feedback gap in the DevOps loop. It aims to make sense of the gazillion metrics traces and logs the code is spewing out — so that developers won’t have to. And, it does this continuously and automatically.

To make the tool even more practical, Digma processes the observability data and uses it to lint the source code itself, right in the IDE. From a developer’s perspective, this means a whole new level of information about the code is revealed — allowing for better code design based on real-world feedback, as well as quick turnaround for fixing regression or avoiding common pitfalls and anti-patterns.

Digma is packaged as a self-contained Docker extension to make it easy for developers to evaluate their code without registration to an external service or sharing any data that might not be allowed by corporate policy (Figure 1). As such, Digma acts as your own intelligent agent for monitoring code execution, especially in development and testing. The backend component is able to track execution over time and highlight any inadvertent changes to behavior, performance, or error patterns that should be addressed.

To collect data about the code, behind the scenes Digma leverages OpenTelemetry, a widely used open standard for modern observability. To make getting started easier, Digma takes care of the configuration work for you, so from the developer’s point of view, getting started with continuous feedback is equivalent to flipping a switch.

Once the information is collected using OTEL, Digma runs it through what could be described as a reverse pipeline, aggregating the data, analyzing it and providing it via an API to multiple outlets including the IDE plugin, Jaeger, and the Docker extension dashboard that we’ll describe in this example.

Note: Currently, Digma supports Java applications running on IntelliJ IDEA; however, support for additional languages and platforms will be rolling out in the coming months.

Prerequisites: Docker Desktop 4.8 or later.

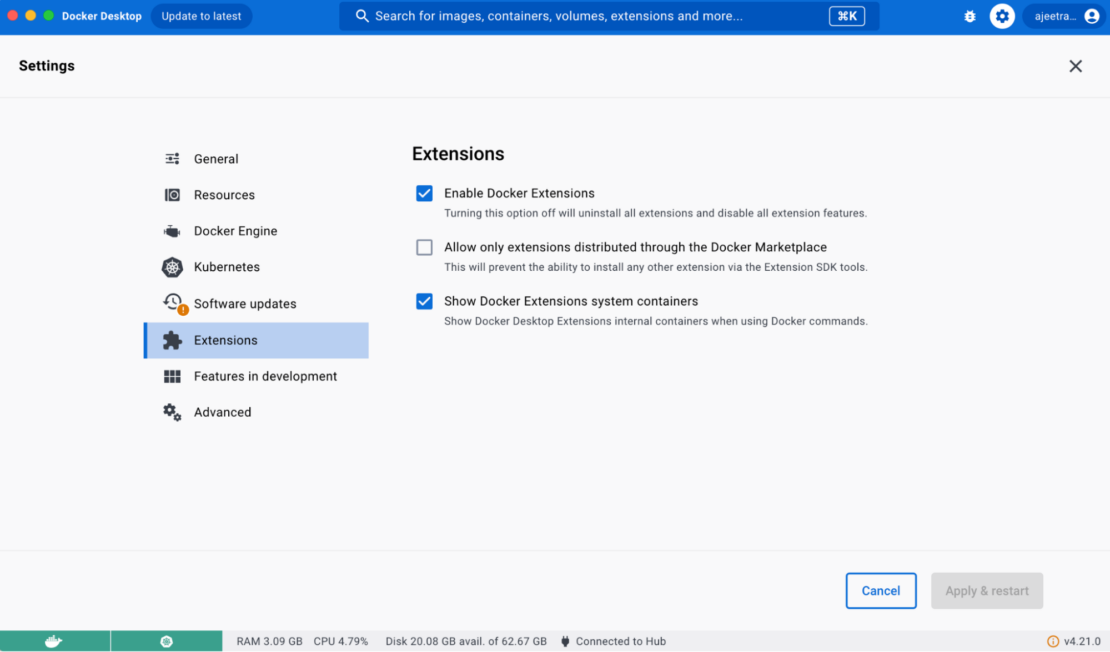

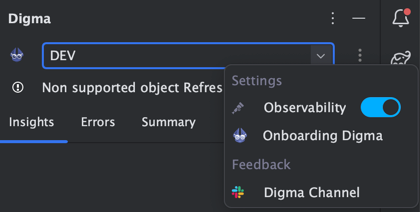

Note: You must ensure that the Docker Extension is enabled (Figure 2).

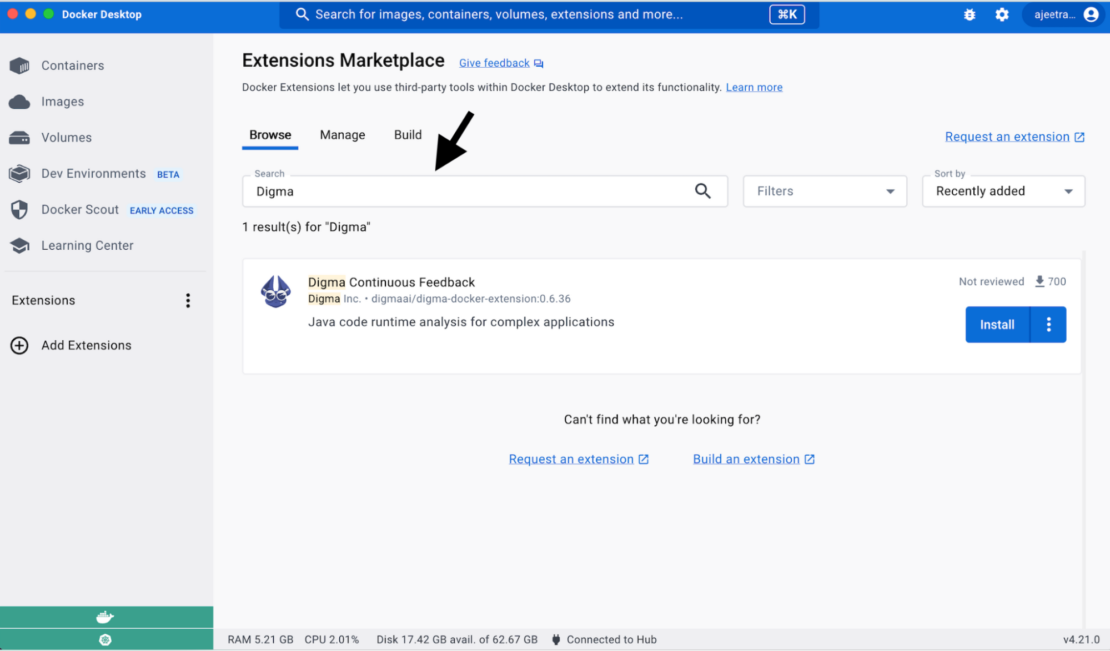

In the Extensions Marketplace, search for Digma and select Install (Figure 3).

After installing the Digma extension from the marketplace, you’ll be directed to a quickstart page that will guide you through the next steps to collect feedback about your code. Digma can collect information from two different sources:

Digma’s IDE extension makes the process of collecting data about your code in the IDE trivial. The entire setup is reduced to a single toggle button click (Figure 4). Behind the scenes, Digma adds variables to the runtime configuration, so that it uses OpenTelemetry for observability. No prior knowledge or observability is needed, and no code changes are necessary to get this to work.

With the observability toggle enabled, you’ll start getting immediate feedback as you run your code locally, or even when you execute your tests. Digma will be on the lookout for any regressions and will automatically spot code smells and issues.

In addition to the IDE, you can also choose to collect data from any running container on your machine running Java code. The process to do that is extremely simple and also documented in the Digma extension Getting Started page. It does not involve changing any Dockerfile or code. Basically, you download the OTEL agent, mount it to a volume on the container, and set some environment variables that point the Java process to use it.

curl --create-dirs -O -L --output-dir ./otel

https://github.com/open-telemetry/opentelemetry-java-instrumentation/releases/latest/download/opentelemetry-javaagent.jar

curl --create-dirs -O -L --output-dir ./otel

https://github.com/digma-ai/otel-java-instrumentation/releases/latest/download/digma-otel-agent-extension.jar

export JAVA_TOOL_OPTIONS="-javaagent:/otel/javaagent.jar -Dotel.exporter.otlp.endpoint=http://localhost:5050 -Dotel.javaagent.extensions=/otel/digma-otel-agent-extension.jar"

export OTEL_SERVICE_NAME={--ENTER YOUR SERVICE NAME HERE--}

export DEPLOYMENT_ENV=LOCAL_DOCKER

docker run -d -v "/$(pwd)/otel:/otel" --env JAVA_TOOL_OPTIONS --env OTEL_SERVICE_NAME --env DEPLOYMENT_ENV {-- APPEND PARAMS AND REPO/IMAGE --}

Whether you collect data from running containers or in the IDE, Digma will continuously analyze the code behavior and make the analysis results available both as code annotations in the IDE and as dashboards in the Digma extension itself. To demonstrate a live example, we’ve created a sample application based on the Java Spring Boot PetClinic app.

In this scenario, we’ll clone the repo and run the code from the IDE, triggering a few actions to see what they reveal about the code. For convenience, we’ve created run configurations to simulate common API calls and create interesting data:

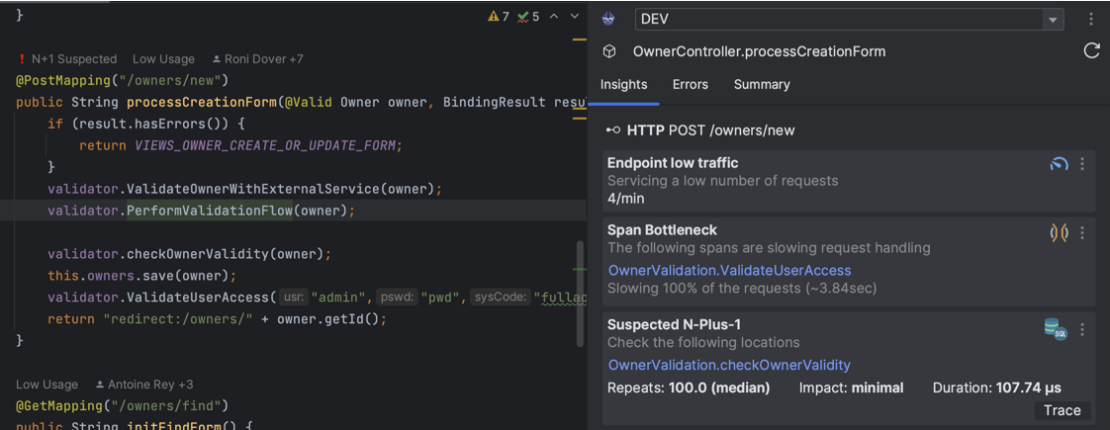

Almost immediately, the result of the dynamic linting analysis will start appearing over the code in the IDE (Figure 5):

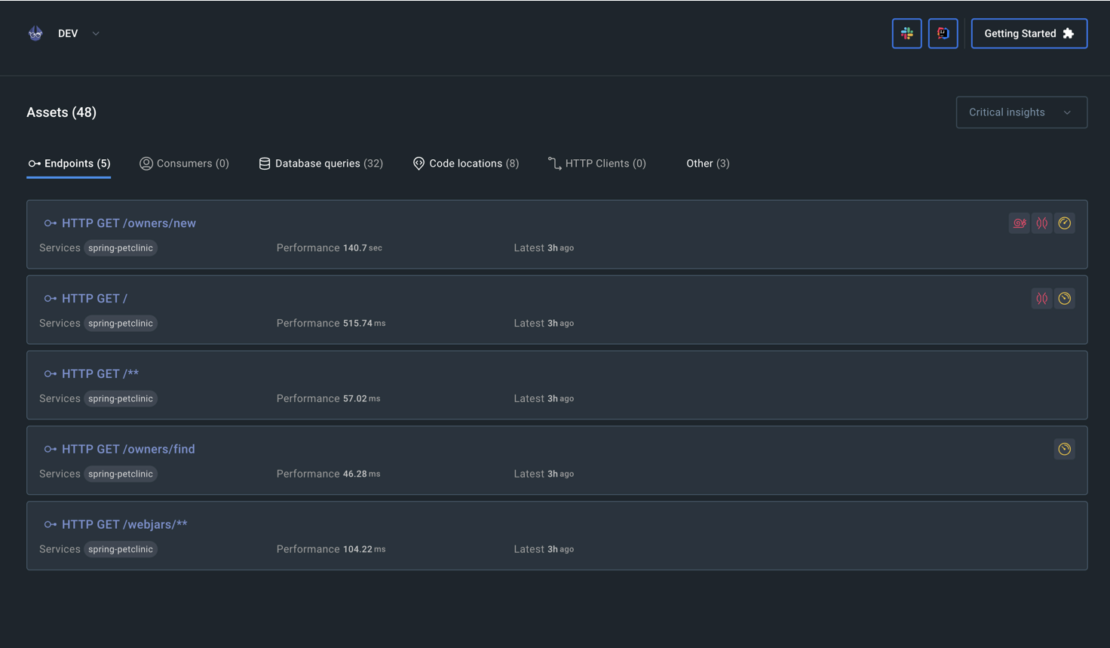

At the same time, the Digma extension itself will present a dashboard cataloging all of the assets that have been identified, including code locations, API endpoints, database queries, and more (Figure 6). For each asset, you’ll be able to access basic statistics about its performance as well as a more detailed list of issues of concern that may need to be tracked about their runtime behavior.

One of the main problems Digma tries to solve is not how to collect observability data but how to turn it into a useful and practical asset that can speed up development processes and improve the code. Digma’s insights can be directly applied during design time, based on existing data, as well as to validate changes as they are being run in dev/test/prod and to get early feedback and shorter loops into the development process.

Examples of design time planning code insights:

Examples of runtime code validations for shorter feedback loops:

As Digma evolves, it continues to track and provide clear visibility into specific areas that are important to developers with the goal of having complete clarity about how each piece of code is behaving in real-world scenarios and catching issues and regressions much earlier in the process.

Unlike many other observability solutions, Digma is code first and developer first. Digma is completely free for developers, does not require any code changes or sharing data, and will get you from zero to impactful data about your code within minutes. If you are working on Java code, use the JetBrains IDE, and you want to improve your code with actual execution data, you can get started by picking up the Digma extension from the marketplace.

You can provide feedback in our Slack channel and tell us where Digma improved your dev cycle.