Vue lecture

Building RAG Applications with Ollama and Python: Complete 2025 Tutorial

AI in Real-World Applications: Beyond Code Generation

Agentic AI in Customer Service: The Complete Technical Implementation Guide for 2025

10 Agentic AI Tools That Will Replace ChatGPT in 2025

Ollama vs ChatGPT 2025: Complete Technical Comparison Guide

Best Ollama Models 2025: Performance Comparison Guide

Understanding the n8 app and Its Solutions

LM Studio vs Ollama: Picking the Right Tool for Local LLM Use

How to Build, Run, and Package AI Models Locally with Docker Model Runner

Introduction

As a Senior DevOps Engineer and Docker Captain, I’ve helped build AI systems for everything from retail personalization to medical imaging. One truth stands out: AI capabilities are core to modern infrastructure.

This guide will show you how to run and package local AI models with Docker Model Runner — a lightweight, developer-friendly tool for working with AI models pulled from Docker Hub or Hugging Face. You’ll learn how to run models in the CLI or via API, publish your own model artifacts, and do it all without setting up Python environments or web servers.

What is AI in Development?

Artificial Intelligence (AI) refers to systems that mimic human intelligence, including:

- Making decisions via machine learning

- Understanding language through NLP

- Recognizing images with computer vision

- Learning from new data automatically

Common Types of AI in Development:

- Machine Learning (ML): Learns from structured and unstructured data

- Deep Learning: Neural networks for pattern recognition

- Natural Language Processing (NLP): Understands/generates human language

- Computer Vision: Recognizes and interprets images

Why Package and Run Your Own AI Model?

Local model packaging and execution offer full control over your AI workflows. Instead of relying on external APIs, you can run models directly on your machine — unlocking:

- Faster inference with local compute (no latency from API calls)

- Greater privacy by keeping data and prompts on your own hardware

- Customization through packaging and versioning your own models

- Seamless CI/CD integration with tools like Docker and GitHub Actions

- Offline capabilities for edge use cases or constrained environments

Platforms like Docker and Hugging Face make cutting-edge AI models instantly accessible without building from scratch. Running them locally means lower latency, better privacy, and faster iteration.

Real-World Use Cases for AI

- Chatbots & Virtual Assistants: Automate support (e.g., ChatGPT, Alexa)

- Generative AI: Create text, art, music (e.g., Midjourney, Lensa)

- Dev Tools: Autocomplete and debug code (e.g., GitHub Copilot)

- Retail Intelligence: Recommend products based on behavior

- Medical Imaging: Analyze scans for faster diagnosis

How to Package and Run AI Models Locally with Docker Model Runner

Prerequisites:

- Docker Desktop 4.40+ installed

- Experimental features and Model Runner enabled in Docker Desktop settings

- (Recommended) Windows 11 with NVIDIA GPU or Mac with Apple Silicon

- Internet access for downloading models from Docker Hub or Hugging Face

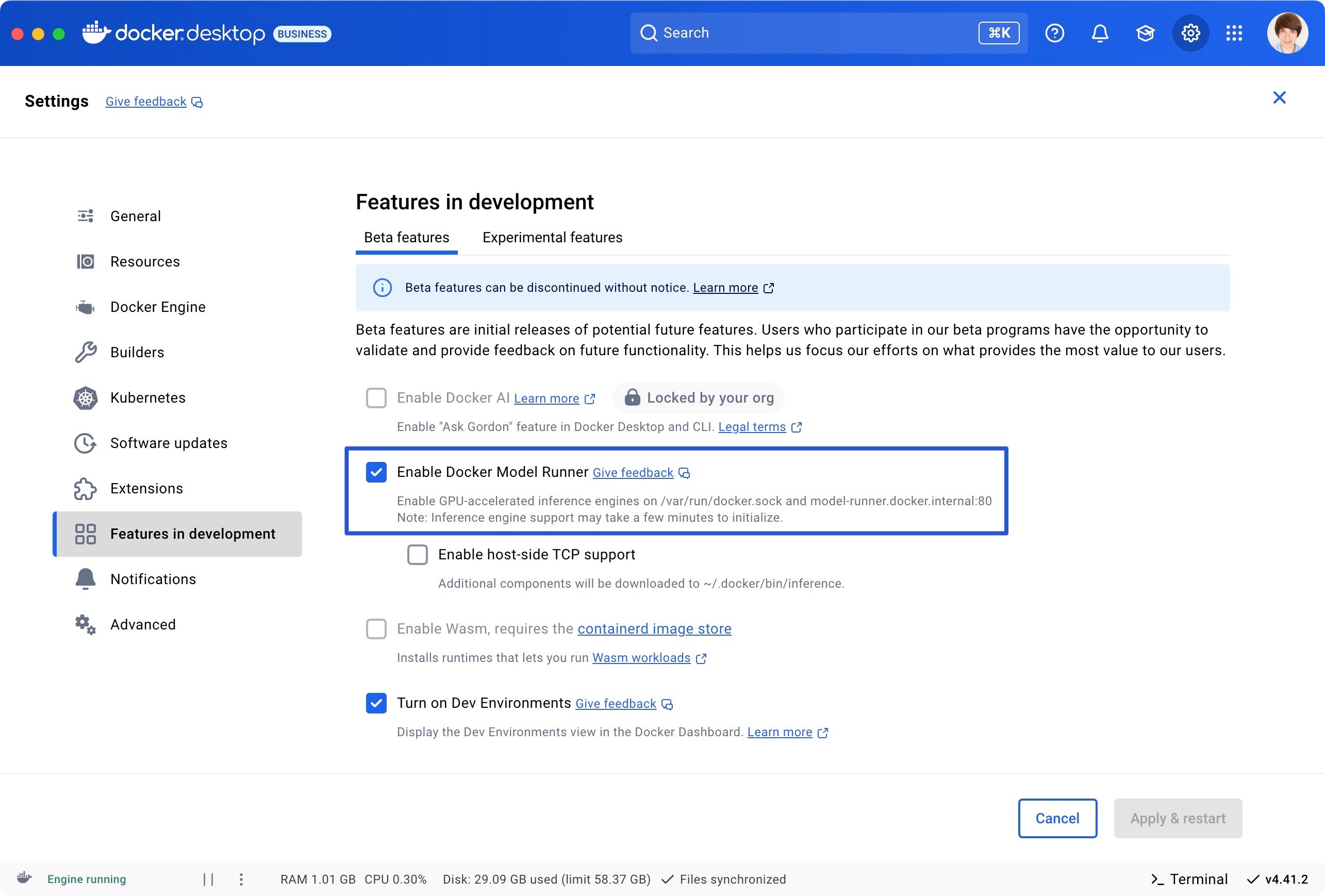

Step 0 — Enable Docker Model Runner

Open Docker Desktop

Go to Settings → Features in development

Under the Experimental features tab, enable Access experimental features

Click Apply and restart

Quit and reopen Docker Desktop to ensure changes take effect

Reopen Settings → Features in development

Switch to the Beta tab and check Enable Docker Model Runner

(Optional) Enable host-side TCP support to access the API from localhost

Once enabled, you can use the docker model CLI and manage models in the Models tab.

Screenshot of Docker Desktop’s Features in development tab with Docker Model Runner and Dev Environments enabled.

Step 1: Pull a Model

From Docker Hub:

docker model pull ai/smollm2

Or from Hugging Face (GGUF format):

docker model pull hf.co/bartowski/Llama-3.2-1B-Instruct-GGUF

Note: Only GGUF models are supported. GGUF (GPT-style General Use Format) is a lightweight binary file format designed for efficient local inference, especially with CPU-optimized runtimes like llama.cpp. It includes the model weights, tokenizer, and metadata all in one place, making it ideal for packaging and distributing LLMs in containerized environments.

Step 2: Tag and Push to Local Registry (Optional)

If you want to push models to a private or local registry:

Tag model with your registry’s address:

docker model tag hf.co/bartowski/Llama-3.2-1B-Instruct-GGUF localhost:5000/foobar

Run a local Docker registry:

docker run -d -p 6000:5000 --name registry registry:2

Push the model to the local registry:

docker model push localhost:6000/foobar

Check your local models with:

docker model list

Step 3: Run the Model

Run a prompt (one-shot)

docker model run ai/smollm2 "What is Docker?"

Interactive chat mode

docker model run ai/smollm2

Note: Models are loaded into memory on demand and unloaded after 5 minutes of inactivity.

Step 4: Test via OpenAI-Compatible API

To call the model from the host:

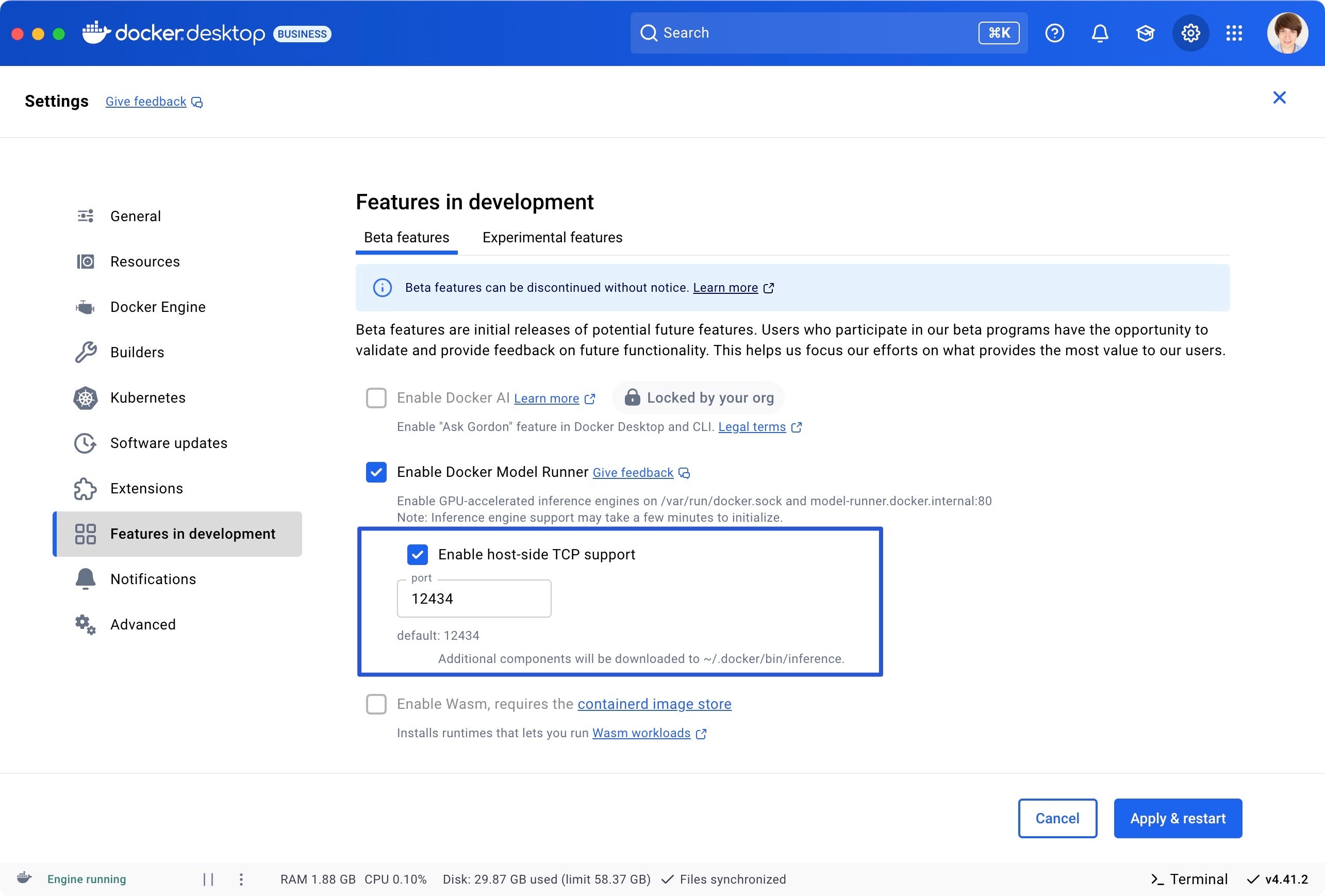

- Enable TCP host access for Model Runner (via Docker Desktop GUI or CLI)

Screenshot of Docker Desktop’s Features in development tab showing host-side TCP support enabled for Docker Model Runner.

docker desktop enable model-runner --tcp 12434

- Send a prompt using the OpenAI-compatible chat endpoint:

curl http://localhost:12434/engines/llama.cpp/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "ai/smollm2",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Tell me about the fall of Rome."}

]

}'

Note: No API key required — this runs locally and securely on your machine.

Step 5: Package Your Own Model

You can package your own pre-trained GGUF model as a Docker-compatible artifact if you already have a .gguf file — such as one downloaded from Hugging Face or converted using tools like llama.cpp.

Note: This guide assumes you already have a .gguf model file. It does not cover how to train or convert models to GGUF.

docker model package \ --gguf "$(pwd)/model.gguf" \ --license "$(pwd)/LICENSE.txt" \ --push registry.example.com/ai/custom-llm:v1

This is ideal for custom-trained or private models. You can now pull it like any other model:

docker model pull registry.example.com/ai/custom-llm:v1

Step 6: Optimize & Iterate

- Use

docker model logsto monitor model usage and debug issues - Set up CI/CD to automate pulls, scans, and packaging

- Track model lineage and training versions to ensure consistency

- Use semantic versioning (

:v1, :2025-05, etc.) instead oflatestwhen packaging custom models - Only one model can be loaded at a time; requesting a new model will unload the previous one.

Compose Integration (Optional)

Docker Compose v2.35+ (included in Docker Desktop 4.41+) introduces support for AI model services using a new provider.type: model. You can define models directly in your compose.yml and reference them in app services using depends_on.

During docker compose up, Docker Model Runner automatically pulls the model and starts it on the host system, then injects connection details into dependent services using environment variables such as MY_MODEL_URL and MY_MODEL_MODEL, where MY_MODEL matches the name of the model service.

This enables seamless multi-container AI applications — with zero extra glue code. Learn more.

Navigating AI Development Challenges

- Latency: Use quantized GGUF models

- Security: Never run unknown models; validate sources and attach licenses

- Compliance: Mask PII, respect data consent

- Costs: Run locally to avoid cloud compute bills

Best Practices

- Prefer GGUF models for optimal CPU inference

- Use the

--licenseflag when packaging custom models to ensure compliance - Use versioned tags (e.g.,

:v1, :2025-05) instead oflatest - Monitor model logs using

docker model logs - Validate model sources before pulling or packaging

- Only pull models from trusted sources (e.g., Docker Hub’s ai/ namespace or verified Hugging Face repos).

- Review the license and usage terms for each model before packaging or deploying.

The Road Ahead

- Support for Retrieval-Augmented Generation (RAG)

- Expanded multimodal support (text + images, video, audio)

- LLMs as services in Docker Compose (Requires Docker Compose v2.35+)

- More granular Model Dashboard features in Docker Desktop

- Secure packaging and deployment pipelines for private AI models

Docker Model Runner lets DevOps teams treat models like any other artifact — pulled, tagged, versioned, tested, and deployed.

Final Thoughts

You don’t need a GPU cluster or external API to build AI apps. Learn more and explore everything you can do with Docker Model Runner:

- Pull prebuilt models from Docker Hub or Hugging Face

- Run them locally using the CLI, API, or Docker Desktop’s Model tab

- Package and push your own models as OCI artifacts

- Integrate with your CI/CD pipelines securely

You can also find other helpful information to get started at:

- Docker Hub – AI Namespace

- Docker Model Runner Docs

- OpenAI-Compatible API Guide

- hello-genai Sample App

You’re not just deploying containers — you’re delivering intelligence.

Learn more

- Read our quickstart guide to Docker Model Runner.

- Find documentation for Model Runner.

- Subscribe to the Docker Navigator Newsletter.

- New to Docker? Create an account.

- Have questions? The Docker community is here to help.