Vue lecture

Docker Scout Tutorial: Build Secure Container Images

Docker Desktop 4.42: Native IPv6, Built-In MCP, and Better Model Packaging

Docker Desktop 4.42 introduces powerful new capabilities that enhance network flexibility, improve security, and deepen AI toolchain integration, all while reducing setup friction. With native IPv6 support, a fully integrated MCP Toolkit, and major upgrades to Docker Model Runner and our AI agent Gordon, this release continues our commitment to helping developers move faster, ship smarter, and build securely across any environment. Whether you’re managing enterprise-grade networks or experimenting with agentic workflows, Docker Desktop 4.42 brings the tools you need right into your development workflows.

IPv6 support

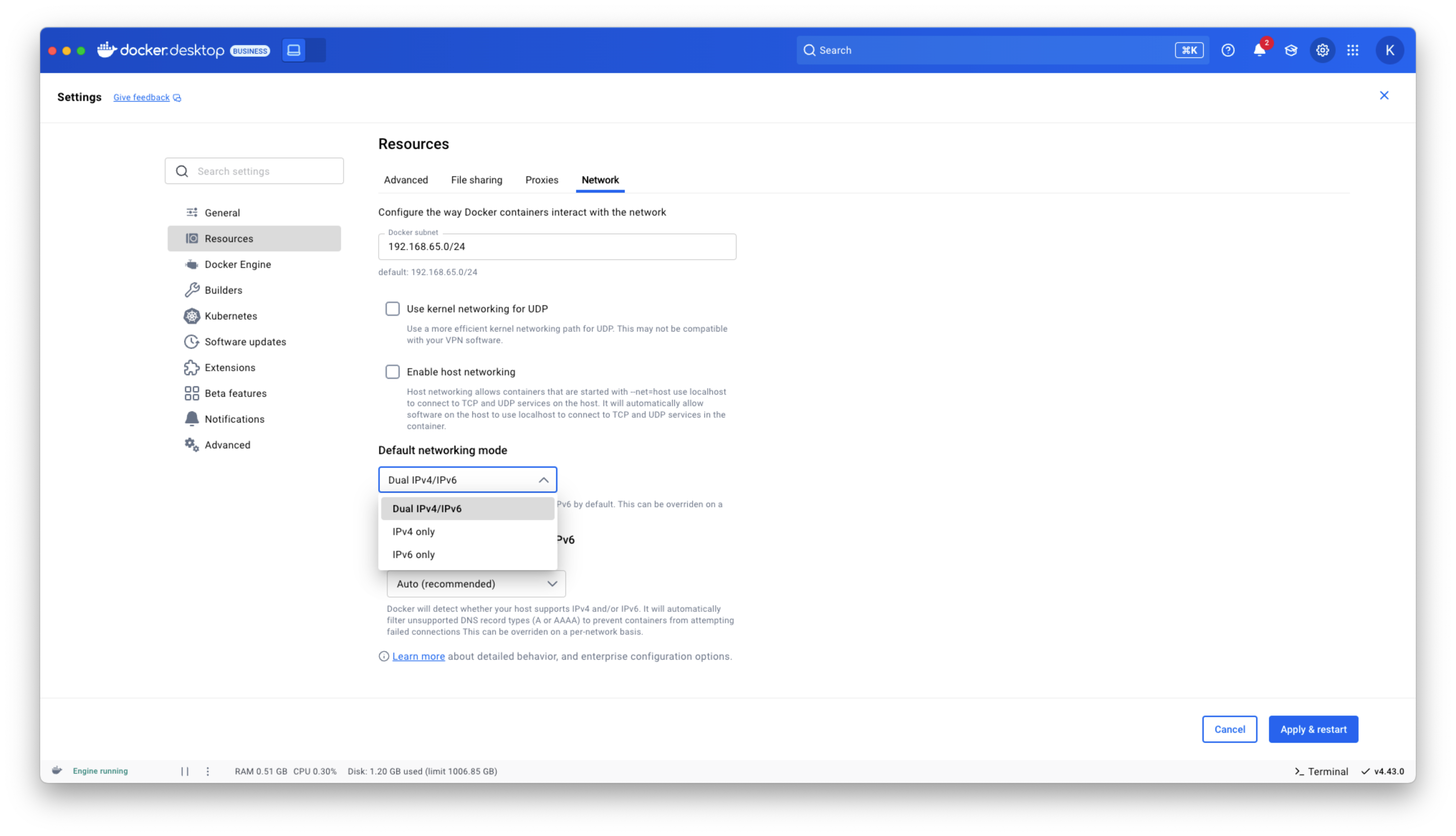

Docker Desktop now provides IPv6 networking capabilities with customization options to better support diverse network environments. You can now choose between dual IPv4/IPv6 (default), IPv4-only, or IPv6-only networking modes to align with your organization’s network requirements. The new intelligent DNS resolution behavior automatically detects your host’s network stack and filters unsupported record types, preventing connectivity timeouts in IPv4-only or IPv6-only environments.

These ipv6 settings are available in Docker Desktop Settings > Resources > Network section and can be enforced across teams using Settings Management, making Docker Desktop more reliable in complex enterprise network configurations including IPv6-only deployments.

Figure 1: Docker Desktop IPv6 settings

Docker MCP Toolkit integrated into Docker Desktop

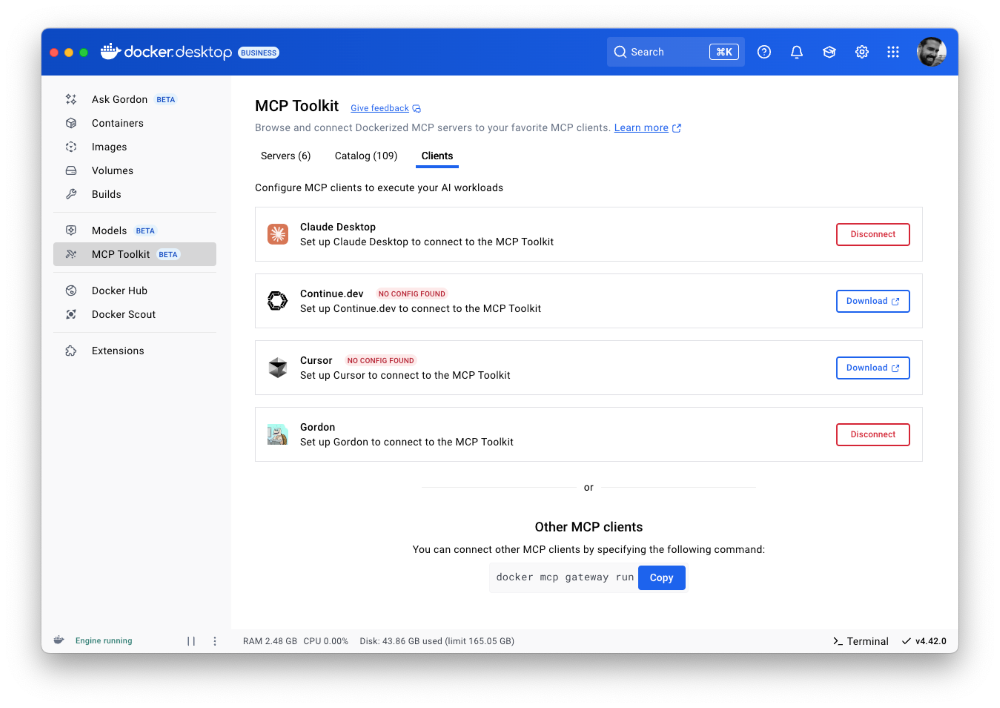

Last month, we launched the Docker MCP Catalog and Toolkit to help developers easily discover MCP servers and securely connect them to their favorite clients and agentic apps. We’re humbled by the incredible support from the community. User growth is up by over 50%, and we’ve crossed 1 million pulls! Now, we’re excited to share that the MCP Toolkit is built right into Docker Desktop, no separate extension required.

You can now access more than 100 MCP servers, including GitHub, MongoDB, Hashicorp, and more, directly from Docker Desktop – just enable the servers you need, configure them, and connect to clients like Claude Desktop, Cursor, Continue.dev, or Docker’s AI agent Gordon.

Unlike typical setups that run MCP servers via npx or uvx processes with broad access to the host system, Docker Desktop runs these servers inside isolated containers with well-defined security boundaries. All container images are cryptographically signed, with proper isolation of secrets and configuration data.

Figure 2: Docker MCP Toolkit is now integrated natively into Docker Desktop

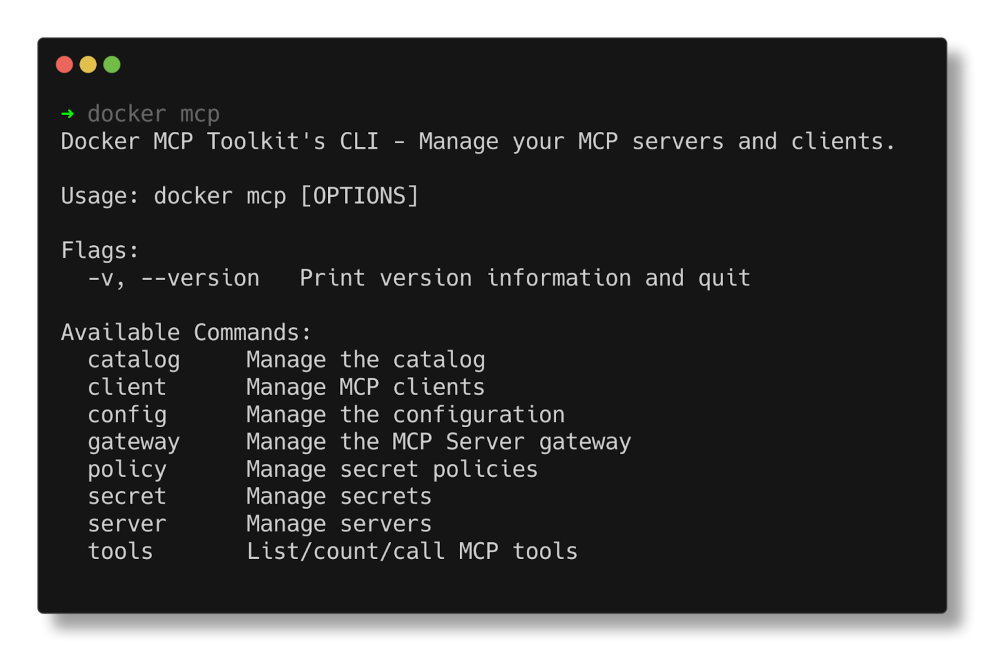

To meet developers where they are, we’re bringing Docker MCP support to the CLI, using the same command structure you’re already familiar with. With the new docker mcp commands, you can launch, configure, and manage MCP servers directly from the terminal. The CLI plugin offers comprehensive functionality, including catalog management, client connection setup, and secret management.

Figure 3: Docker MCP CLI commands.

Docker AI Agent Gordon Now Supports MCP Toolkit Integration

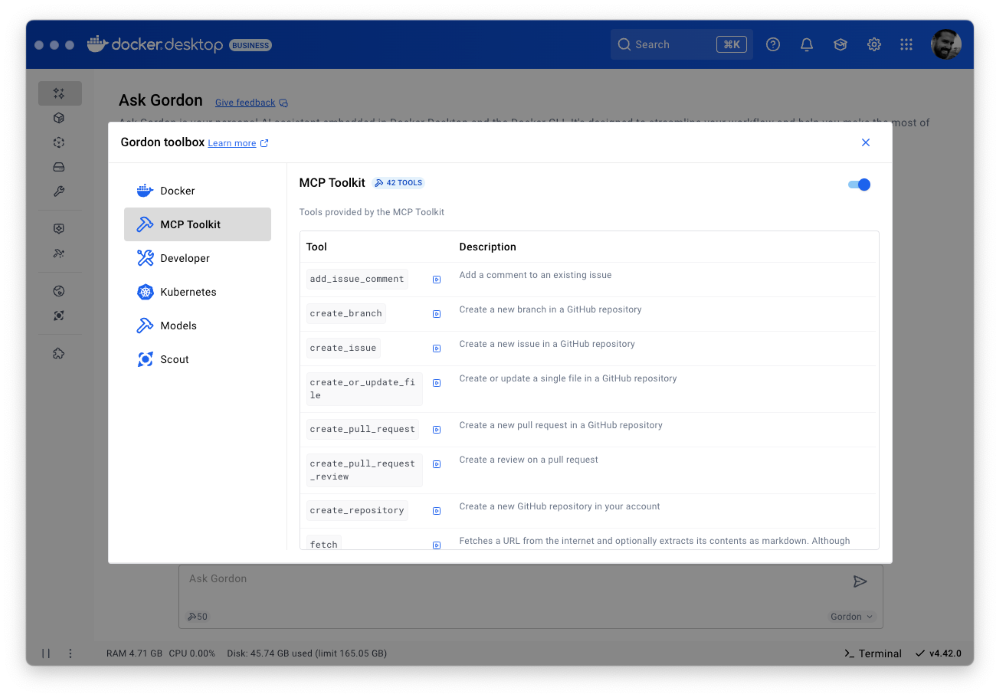

In this release, we’ve upgraded Gordon, Docker’s AI agent, with direct integration to the MCP Toolkit in Docker Desktop. To enable it, open Gordon, click the “Tools” button, and toggle on the “MCP” Toolkit option. Once activated, the MCP Toolkit tab will display tools available from any MCP servers you’ve configured.

Figure 4: Docker’s AI Agent Gordon now integrates with Docker’s MCP Toolkit, bringing 100+ MCP servers

This integration gives you immediate access to 100+ MCP servers with no extra setup, letting you experiment with AI capabilities directly in your Docker workflow. Gordon now acts as a bridge between Docker’s native tooling and the broader AI ecosystem, letting you leverage specialized tools for everything from screenshot capture to data analysis and API interactions – all from a consistent, unified interface.

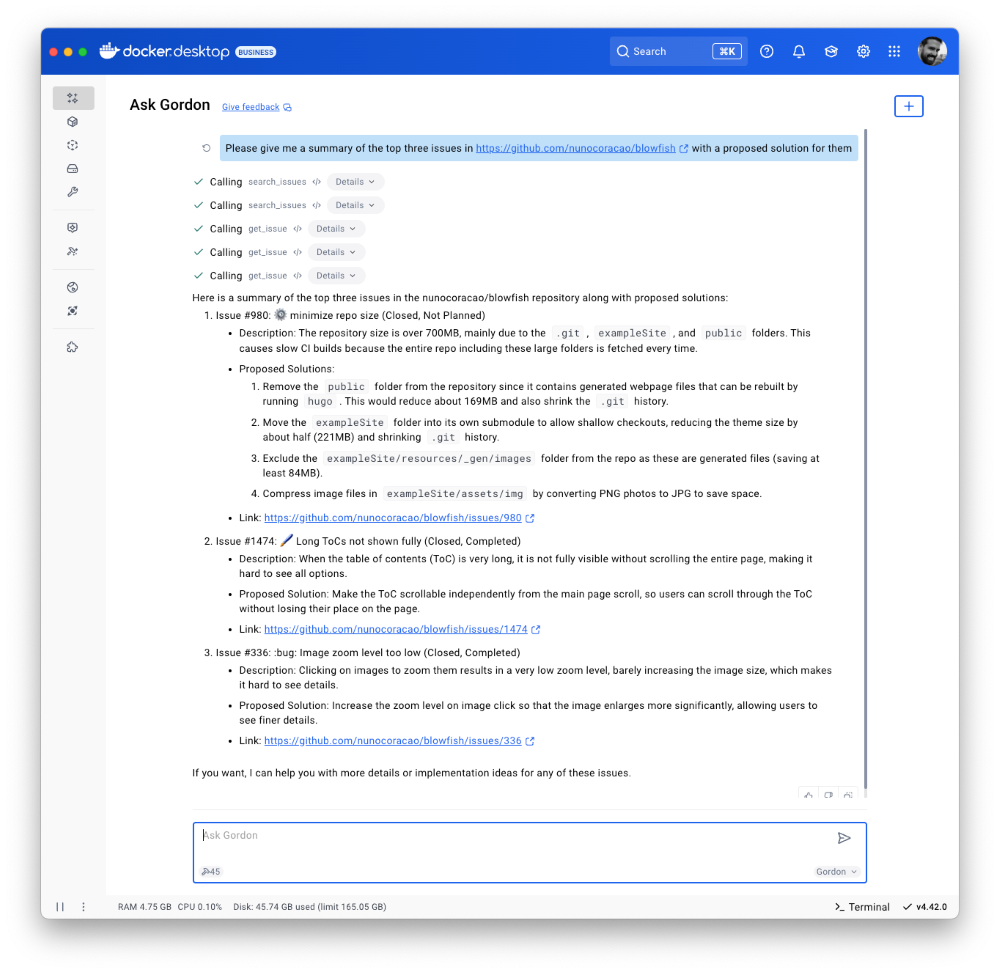

Figure 5: Docker’s AI Agent Gordon uses the GitHub MCP server to pull issues and suggest solutions.

Finally, we’ve also improved the Dockerize feature with expanded support for Java, Kotlin, Gradle, and Maven projects. These improvements make it easier to containerize a wider range of applications with minimal configuration. With expanded containerization capabilities and integrated access to the MCP Toolkit, Gordon is more powerful than ever. It streamlines container workflows, reduces repetitive tasks, and gives you access to specialized tools, so you can stay focused on building, shipping, and running your applications efficiently.

Docker Model Runner adds Qualcomm support, Docker Engine Integration, and UX Upgrades

Staying true to our philosophy of giving developers more flexibility and meeting them where they are, the latest version of Docker Model Runner adds broader OS support, deeper integration with popular Docker tools, and improvements in both performance and usability.

In addition to supporting Apple Silicon and Windows systems with NVIDIA GPUs, Docker Model Runner now works on Windows devices with Qualcomm chipsets. Under the hood, we’ve upgraded our inference engine to use the latest version of llama.cpp, bringing significantly enhanced tool calling capabilities to your AI applications.Docker Model Runner can now be installed directly in Docker Engine Community Edition across multiple Linux distributions supported by Docker Engine. This integration is particularly valuable for developers looking to incorporate AI capabilities into their CI/CD pipelines and automated testing workflows. To get started, check out our documentation for the setup guide.

Get Up and Running with Models Faster

The Docker Model Runner user experience has been upgraded with expanded GUI functionality in Docker Desktop. All of these UI enhancements are designed to help you get started with Model Runner quickly and build applications faster. A dedicated interface now includes three new tabs that simplify model discovery, management, and streamline troubleshooting workflows. Additionally, Docker Desktop’s updated GUI introduces a more intuitive onboarding experience with streamlined “two-click” actions.

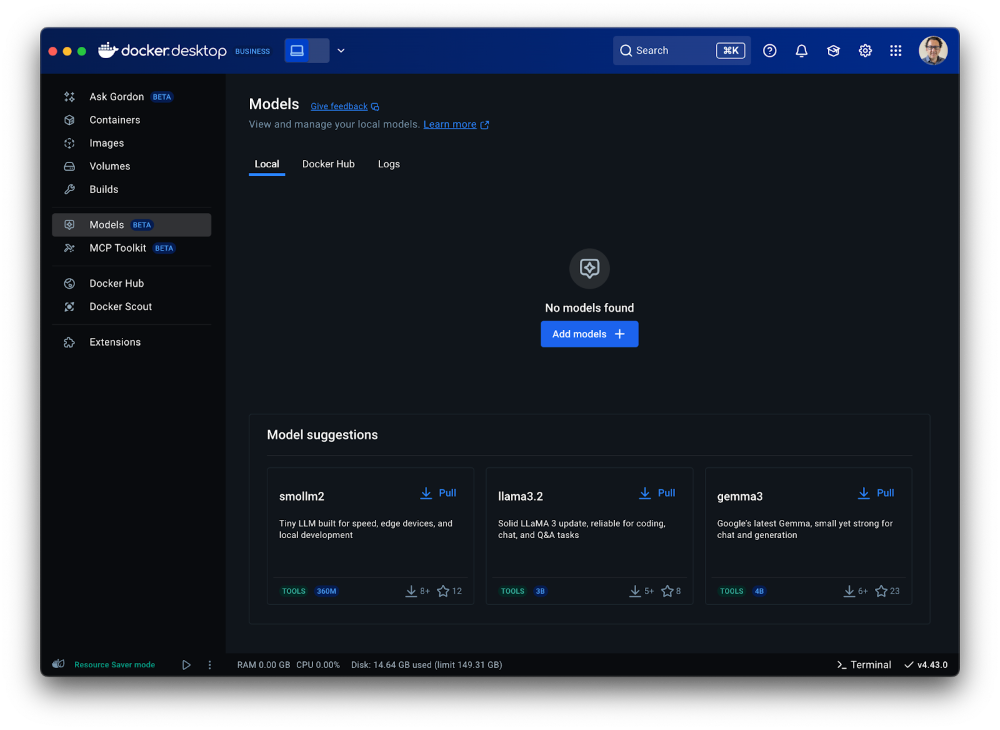

After clicking on the Model tab, you’ll see three new sub-tabs. The first, labeled “Local,” displays a set of models in various sizes that you can quickly pull. Once a model is pulled, you can launch a chat interface to test and experiment with it immediately.

Figure 6: Access a set of models of various sizes to get quickly started in Models menu of Docker Desktop

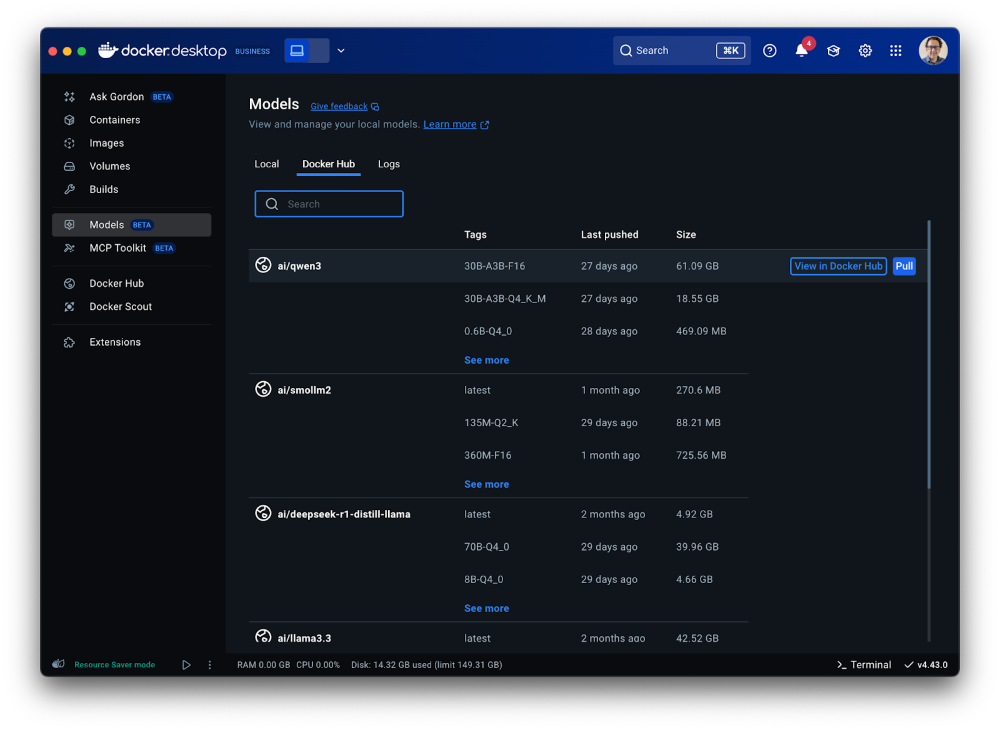

The second tab ”Docker Hub” offers a comprehensive view for browsing and pulling models from Docker Hub’s AI Catalog, making it easy to get started directly within Docker Desktop, without switching contexts.

Figure 7: A shortcut to the Model catalog from Docker Hub in Models menu of Docker Desktop

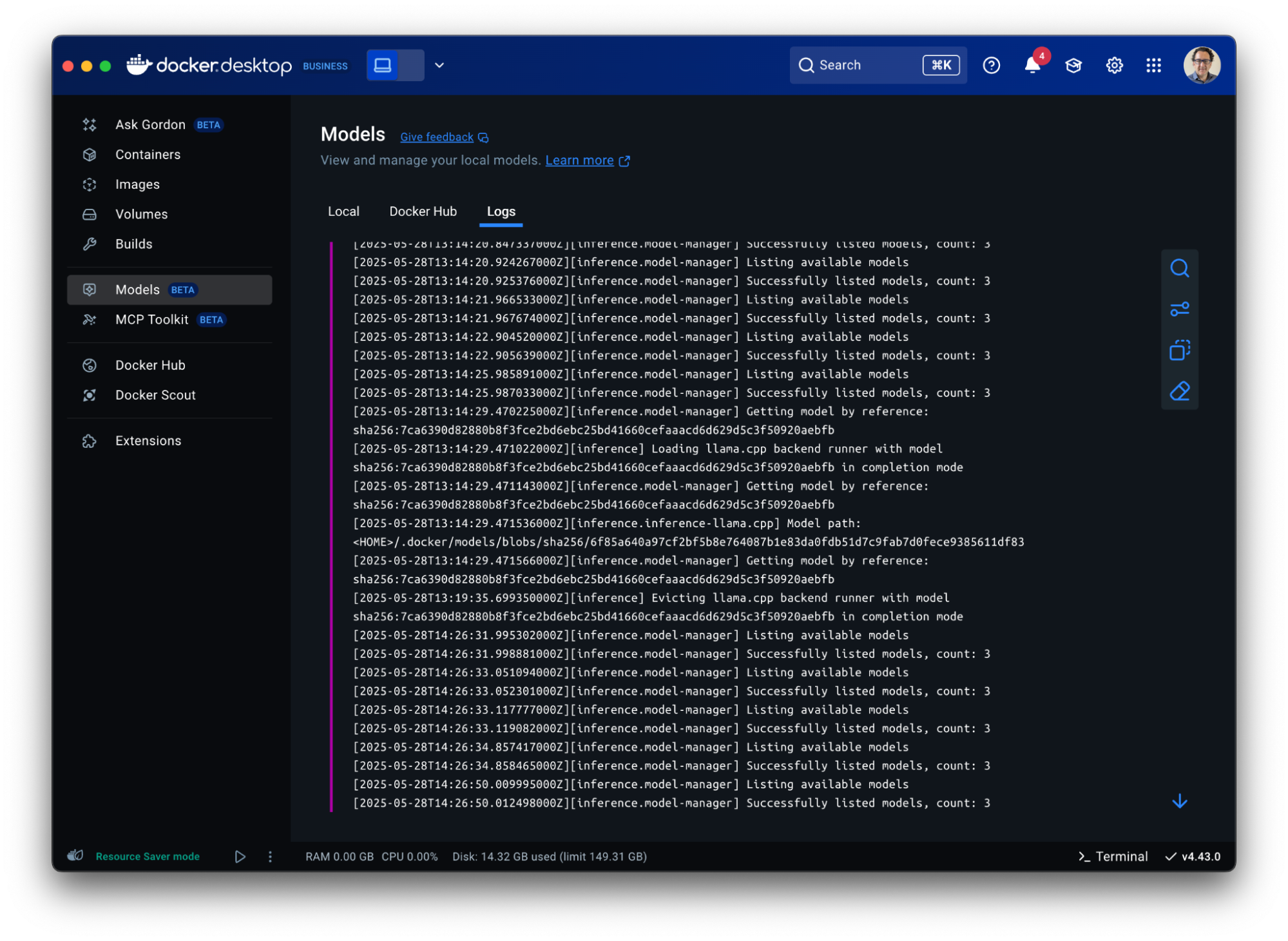

The third tab “Logs” offers real-time access to the inference engine’s log tail, giving developers immediate visibility into model execution status and debugging information directly within the Docker Desktop interface.

Figure 8: Gain visibility into model execution status and debugging information in Docker Desktop

Model Packaging Made Simple via CLI

As part of the Docker Model CLI, the most significant enhancement is the introduction of the docker model package command. This new command enables developers to package their models from GGUF format into OCI-compliant artifacts, fundamentally transforming how AI models are distributed and shared. It enables seamless publishing to both public and private and OCI-compatible repositories such as Docker Hub and establishes a standardized, secure workflow for model distribution, using the same trusted Docker tools developers already rely on. See our docs for more details.

Conclusion

From intelligent networking enhancements to seamless AI integrations, Docker Desktop 4.42 makes it easier than ever to build with confidence. With native support for IPv6, in-app access to 100+ MCP servers, and expanded platform compatibility for Docker Model Runner, this release is all about meeting developers where they are and equipping them with the tools to take their work further. Update to the latest version today and unlock everything Docker Desktop 4.42 has to offer.

Learn more

- Authenticate and update today to receive your subscription level’s newest Docker Desktop features.

- Subscribe to the Docker Navigator Newsletter.

- Learn about our sign-in enforcement options.

- New to Docker? Create an account.

- Have questions? The Docker community is here to help.

Docker Desktop 4.41: Docker Model Runner supports Windows, Compose, and Testcontainers integrations, Docker Desktop on the Microsoft Store

Big things are happening in Docker Desktop 4.41! Whether you’re building the next AI breakthrough or managing development environments at scale, this release is packed with tools to help you move faster and collaborate smarter. From bringing Docker Model Runner to Windows (with NVIDIA GPU acceleration!), Compose and Testcontainers, to new ways to manage models in Docker Desktop, we’re making AI development more accessible than ever. Plus, we’ve got fresh updates for your favorite workflows — like a new Docker DX Extension for Visual Studio Code, a speed boost for Mac users, and even a new location for Docker Desktop on the Microsoft Store. Also, we’re enabling ACH transfer as a payment option for self-serve customers. Let’s dive into what’s new!

Docker Model Runner now supports Windows, Compose & Testcontainers

This release brings Docker Model Runner to Windows users with NVIDIA GPU support. We’ve also introduced improvements that make it easier to manage, push, and share models on Docker Hub and integrate with familiar tools like Docker Compose and Testcontainers. Docker Model Runner works with Docker Compose projects for orchestrating model pulls and injecting model runner services, and Testcontainers via its libraries. These updates continue our focus on helping developers build AI applications faster using existing tools and workflows.

In addition to CLI support for managing models, Docker Desktop now includes a dedicated “Models” section in the GUI. This gives developers more flexibility to browse, run, and manage models visually, right alongside their containers, volumes, and images.

Figure 1: Easily browse, run, and manage models from Docker Desktop

Further extending the developer experience, you can now push models directly to Docker Hub, just like you would with container images. This creates a consistent, unified workflow for storing, sharing, and collaborating on models across teams. With models treated as first-class artifacts, developers can version, distribute, and deploy them using the same trusted Docker tooling they already use for containers — no extra infrastructure or custom registries required.

docker model push <model>

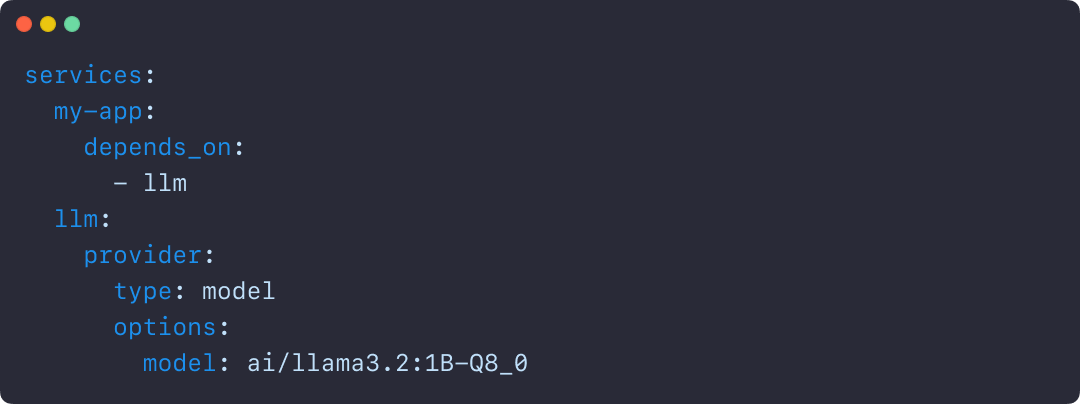

The Docker Compose integration makes it easy to define, configure, and run AI applications alongside traditional microservices within a single Compose file. This removes the need for separate tools or custom configurations, so teams can treat models like any other service in their dev environment.

Figure 2: Using Docker Compose to declare services, including running AI models

Similarly, the Testcontainers integration extends testing to AI models, with initial support for Java and Go and more languages on the way. This allows developers to run applications and create automated tests using AI services powered by Docker Model Runner. By enabling full end-to-end testing with Large Language Models, teams can confidently validate application logic, their integration code, and drive high-quality releases.

String modelName = "ai/gemma3";

DockerModelRunnerContainer modelRunnerContainer = new DockerModelRunnerContainer()

.withModel(modelName);

modelRunnerContainer.start();

OpenAiChatModel model = OpenAiChatModel.builder()

.baseUrl(modelRunnerContainer.getOpenAIEndpoint())

.modelName(modelName)

.logRequests(true)

.logResponses(true)

.build();

String answer = model.chat("Give me a fact about Whales.");

System.out.println(answer);

Docker DX Extension in Visual Studio: Catch issues early, code with confidence

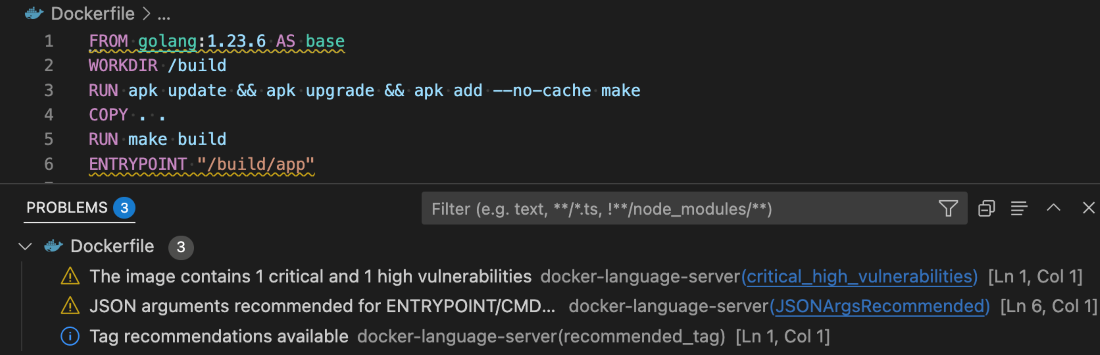

The Docker DX Extension is now live on the Visual Studio Marketplace. This extension streamlines your container development workflow with rich editing, linting features, and built-in vulnerability scanning. You’ll get inline warnings and best-practice recommendations for your Dockerfiles, powered by Build Check — a feature we introduced last year.

It also flags known vulnerabilities in container image references, helping you catch issues early in the dev cycle. For Bake files, it offers completion, variable navigation, and inline suggestions based on your Dockerfile stages. And for those managing complex Docker Compose setups, an outline view makes it easier to navigate and understand services at a glance.

Figure 3: Docker DX Extension in Visual Studio provides actionable recommendations for fixing vulnerabilities and optimizing Dockerfiles

Read more about this in our announcement blog and GitHub repo. Get started today by installing Docker DX – Visual Studio Marketplace

MacOS QEMU virtualization option deprecation

The QEMU virtualization option in Docker Desktop for Mac will be deprecated on July 14, 2025.

With the new Apple Virtualization Framework, you’ll experience improved performance, stability, and compatibility with macOS updates as well as tighter integration with Apple Silicon architecture.

What this means for you:

- If you’re using QEMU as your virtualization backend on macOS, you’ll need to switch to either Apple Virtualization Framework (default) or Docker VMM (beta) options.

- This does NOT affect QEMU’s role in emulating non-native architectures for multi-platform builds.

- Your multi-architecture builds will continue to work as before.

For complete details, please see our official announcement.

Introducing Docker Desktop in the Microsoft Store

Docker Desktop is now available for download from the Microsoft Store! We’re rolling out an EXE-based installer for Docker Desktop on Windows. This new distribution channel provides an enhanced installation and update experience for Windows users while simplifying deployment management for IT administrators across enterprise environments.

Key benefits

For developers:

- Automatic Updates: The Microsoft Store handles all update processes automatically, ensuring you’re always running the latest version without manual intervention.

- Streamlined Installation: Experience a more reliable setup process with fewer startup errors.

- Simplified Management: Manage Docker Desktop alongside your other applications in one familiar interface.

For IT admins:

- Native Intune MDM Integration: Deploy Docker Desktop across your organization with Microsoft’s native management tools.

- Centralized Deployment Control: Roll out Docker Desktop more easily through the Microsoft Store’s enterprise distribution channels.

- Automatic Updates Regardless of Security Settings: Updates are handled automatically by the Microsoft Store infrastructure, even in organizations where users don’t have direct store access.

- Familiar Process: The update mechanism maps to the widget command, providing consistency with other enterprise software management tools.

This new distribution option represents our commitment to improving the Docker experience for Windows users while providing enterprise IT teams with the management capabilities they need.

Unlock greater flexibility: Enable ACH transfer as a payment option for self-serve customers

We’re focused on making it easier for teams to scale, grow, and innovate. All on their own terms. That’s why we’re excited to announce an upgrade to the self-serve purchasing experience: customers can pay via ACH transfer starting on 4/30/25.

Historically, self-serve purchases were limited to credit card payments, forcing many customers who could not use credit cards into manual sales processes, even for small seat expansions. With the introduction of an ACH transfer payment option, customers can choose the payment method that works best for their business. Fewer delays and less unnecessary friction.

This payment option upgrade empowers customers to:

- Purchase more independently without engaging sales

- Choose between credit card or ACH transfer with a verified bank account

By empowering enterprises and developers, we’re freeing up your time, and ours, to focus on what matters most: building, scaling, and succeeding with Docker.

Visit our documentation to explore the new payment options, or log in to your Docker account to get started today!

Wrapping up

With Docker Desktop 4.41, we’re continuing to meet developers where they are — making it easier to build, test, and ship innovative apps, no matter your stack or setup. Whether you’re pushing AI models to Docker Hub, catching issues early with the Docker DX Extension, or enjoying faster virtualization on macOS, these updates are all about helping you do your best work with the tools you already know and love. We can’t wait to see what you build next!

Learn more

- Authenticate and update today to receive your subscription level’s newest Docker Desktop features.

- Subscribe to the Docker Navigator Newsletter.

- Learn about our sign-in enforcement options.

- New to Docker? Create an account.

- Have questions? The Docker community is here to help.

8 Ways to Empower Engineering Teams to Balance Productivity, Security, and Innovation

This post was contributed by Lance Haig, a solutions engineer at Docker.

In today’s fast-paced development environments, balancing productivity with security while rapidly innovating is a constant juggle for senior leaders. Slow feedback loops, inconsistent environments, and cumbersome tooling can derail progress. As a solutions engineer at Docker, I’ve learned from my conversations with industry leaders that a key focus for senior leaders is on creating processes and providing tools that let developers move faster without compromising quality or security.

Let’s explore how Docker’s suite of products and Docker Business empowers industry leaders and their development teams to innovate faster, stay secure, and deliver impactful results.

1. Create a foundation for reliable workflows

A recurring pain point I’ve heard from senior leaders is the delay between code commits and feedback. One leader described how their team’s feedback loops stretched to eight hours, causing delays, frustration, and escalating costs.

Optimizing feedback cycles often involves localizing testing environments and offloading heavy build tasks. Teams leveraging containerized test environments — like Testcontainers Cloud — reduce this feedback loop to minutes, accelerating developer output. Similarly, offloading complex builds to managed cloud services ensures infrastructure constraints don’t block developers. The time saved here is directly reinvested in faster iteration cycles.

Incorporating Docker’s suite of products can significantly enhance development efficiency by reducing feedback loops. For instance, The Warehouse Group, New Zealand’s largest retail chain, transformed its development process by adopting Docker. This shift enabled developers to test applications locally, decreasing feedback loops from days to minutes. Consequently, deployments that previously took weeks were streamlined to occur within an hour of code submission.

2. Shorten feedback cycles to drive results

Inconsistent development environments continue to plague engineering organizations. These mismatches lead to wasted time troubleshooting “works-on-my-machine” errors or inefficiencies across CI/CD pipelines. Organizations achieve consistent environments across local, staging, and production setups by implementing uniform tooling, such as Docker Desktop.

For senior leaders, the impact isn’t just technical: predictable workflows simplify onboarding, reduce new hires’ time to productivity, and establish an engineering culture focused on output rather than firefighting.

For example, Ataccama, a data management company, leveraged Docker to expedite its deployment process. With containerized applications, Ataccama reduced application deployment lead times by 75%, achieving a 50% faster transition from development to production. By reducing setup time and simplifying environment configuration, Docker allows the team to spin up new containers instantly and shift focus to delivering value. This efficiency gain allowed the team to focus more on delivering value and less on managing infrastructure.

3. Empower teams to collaborate in distributed workflows

Today’s hybrid and remote workforces make developer collaboration more complex. Secure, pre-configured environments help eliminate blockers when working across teams. Leaders who adopt centralized, standardized configurations — even in zero-trust environments — reduce setup time and help teams remain focused.

Docker Build Cloud further simplifies collaboration in distributed workflows by enabling developers to offload resource-intensive builds to a secure, managed cloud environment. Teams can leverage parallel builds, shared caching, and multi-architecture support to streamline workflows, ensuring that builds are consistent and fast across team members regardless of their location or platform. By eliminating the need for complex local build setups, Docker Build Cloud allows developers to focus on delivering high-quality code, not managing infrastructure.

Beyond tools, fostering collaboration requires a mix of practices: sharing containerized services, automating repetitive tasks, and enabling quick rollbacks. The right combination allows engineering teams to align better, focus on goals, and deliver outcomes quickly.

Empowering engineering teams with streamlined workflows and collaborative tools is only part of the equation. Leaders must also evaluate how these efficiencies translate into tangible cost savings, ensuring their investments drive measurable business value.

To learn more about how Docker simplifies the complex, read From Legacy to Cloud-Native: How Docker Simplifies Complexity and Boosts Developer Productivity.

4. Reduce costs

Every organization feels pressured to manage budgets effectively while delivering on demanding expectations. However, leaders can realize cost savings in unexpected areas, including hiring, attrition, and infrastructure optimization, by adopting consumption-based pricing models, streamlining operations, and leveraging modern tooling.

Easy access to all Docker products provides flexibility and scalability

Updated Docker plans make it easier for development teams to access everything they need under one subscription. Consumption is included for each new product, and more can be added as needed. This allows organizations to scale resources as their needs evolve and effectively manage their budgets.

Cost savings through streamlined operations

Organizations adopting Docker Business have reported significant reductions in infrastructure costs. For instance, a leading beauty company achieved a 25% reduction in infrastructure expenses by transitioning to a container-first development approach with Docker.

Bitso, a leading financial services company powered by cryptocurrency, switched to Docker Business from an alternative solution and reduced onboarding time from two weeks to a few hours per engineer, saving an estimated 7,700 hours in the eight months while scaling the team. Returning to Docker after spending almost two years with the alternative open-source solution proved more cost-effective, decreasing the time spent onboarding, troubleshooting, and debugging. Further, after transitioning back to Docker, Bitso has experienced zero new support tickets related to Docker, significantly reducing the platform support burden.

Read the Bitso case study to learn why Bitso returned to Docker Business.

Reducing infrastructure costs with modern tooling

Organizations that adopt Docker’s modern tooling realize significant infrastructure cost savings by optimizing resource usage, reducing operational overhead, and eliminating inefficiencies tied to legacy processes.

By leveraging Docker Build Cloud, offloading resource-intensive builds to a managed cloud service, and leveraging shared cache, teams can achieve builds up to 39 times faster, saving approximately one hour per day per developer. For example, one customer told us they saw their overall build times improve considerably through the shared cache feature. Previously on their local machine, builds took 15-20 minutes. Now, with Docker Build Cloud, it’s down to 110 seconds — a massive improvement.

Check out our calculator to estimate your savings with Build Cloud.

5. Retain talent through frictionless environments

High developer turnover is expensive and often linked to frustration with outdated or inefficient tools. I’ve heard countless examples of developers leaving not because of the work but due to the processes and tooling surrounding it. Providing modern, efficient environments that allow experimentation while safeguarding guardrails improves satisfaction and retention.

Year after year, developers rank Docker as their favorite developer tool. For example, more than 65,000 developers participated in Stack Overflow’s 2024 Developer Survey, which recognized Docker as the most-used and most-desired developer tool for the second consecutive year, and as the most-admired developer tool.

Providing modern, efficient environments with Docker tools can enhance developer satisfaction and retention. While specific metrics vary, streamlined workflows and reduced friction are commonly cited as factors that improve team morale and reduce turnover. Retaining experienced developers not only preserves institutional knowledge but also reduces the financial burden of hiring and onboarding replacements.

6. Efficiently manage infrastructure

Consolidating development and operational tooling reduces redundancy and lowers overall IT spend. Organizations that migrate to standardized platforms see a decrease in toolchain maintenance costs and fewer internal support tickets. Simplified workflows mean IT and DevOps teams spend less time managing environments and more time delivering strategic value.

Some leaders, however, attempt to build rather than buy solutions for developer workflows, seeing it as cost-saving. This strategy carries risks: reliance on a single person or small team to maintain open-source tooling can result in technical debt, escalating costs, and subpar security. By contrast, platforms like Docker Business offer comprehensive protection and support, reducing long-term risks.

Cost management and operational efficiency go hand-in-hand with another top priority: security. As development environments grow more sophisticated, ensuring airtight security becomes critical — not just for protecting assets but also for maintaining business continuity and customer trust.

7. Secure developer environments

Security remains a top priority for all senior leaders. As organizations transition to zero-trust architectures, the role of developer workstations within this model grows. Developer systems, while powerful, are not exempt from being targets for potential vulnerabilities. Securing developer environments without stifling productivity is an ongoing leadership challenge.

Tightening endpoint security without reducing autonomy

Endpoint security starts with visibility, and Docker makes it seamless. With Image Access Management, Docker ensures that only trusted and compliant images are used throughout your development lifecycle, reducing exposure to vulnerabilities. However, these solutions are only effective if they don’t create bottlenecks for developers.

Recently, a business leader told me that taking over a team without visibility into developer environments and security revealed significant risks. Developers were operating without clear controls, exposing the organization to potential vulnerabilities and inefficiencies. By implementing better security practices and centralized oversight, the leaders improved visibility and reduced operational risks, enabling a more secure and productive environment for developer teams. This shift also addressed compliance concerns by ensuring the organization could effectively meet regulatory requirements and demonstrate policy adherence.

Securing the software supply chain

From trusted content repositories to real-time SBOM insights, securing dependencies is critical for reducing attack surfaces. In conversations with security-focused leaders, the message is clear: Supply chain vulnerabilities are both a priority and a pain point. Leaders are finding success when embedding security directly into developer workflows rather than adding it as a reactive step. Tools like Docker Scout provide real-time visibility into vulnerabilities within your software supply chain, enabling teams to address risks before they escalate.

Securing developer environments strengthens the foundation of your engineering workflows. But for many industries, these efforts must also align with compliance requirements, where visibility and control over processes can mean the difference between growth and risk.

Improving compliance

Compliance may feel like an operational requirement, but for senior leadership, it’s a strategic asset. In regulated industries, compliance enables growth. In less regulated sectors, it builds customer trust. Regardless of the driver, visibility, and control are the cornerstones of effective compliance.

Proactive compliance, not reactive audits

Audits shouldn’t feel like fire drills. Proactive compliance ensures teams stay ahead of risks and disruptions. With the right processes in place — automated logging, integrated open-source software license checks, and clear policy enforcement — audit readiness becomes a part of daily operations. This proactive approach ensures teams stay ahead of compliance risks while reducing unnecessary disruptions.

While compliance ensures a stable and trusted operational baseline, innovation drives competitive advantage. Forward-thinking leaders understand that fostering creativity within a secure and compliant framework is the key to sustained growth.

8. Accelerating innovation

Every senior leader seeks to balance operational excellence and fostering innovation. Enabling engineers to move fast requires addressing two critical tensions: reducing barriers to experimentation and providing guardrails that maintain focus.

Building a culture of safe experimentation

Experimentation thrives in environments where developers feel supported and unencumbered. By establishing trusted guardrails — such as pre-approved images and automated rollbacks — teams gain the confidence to test bold ideas without introducing unnecessary risks.

From MVP to market quickly

Reducing friction in prototyping accelerates the time-to-market for Minimum Viable Products (MVPs). Leaders prioritizing local testing environments and streamlined approval processes create conditions where engineering creativity translates directly into a competitive advantage.

Innovation is no longer just about moving fast; it’s about moving deliberately. Senior leaders must champion the tools, practices, and environments that unlock their teams’ full potential.

Unlock the full potential of your teams

As a senior leader, you have a unique position to balance productivity, security, and innovation within your teams. Reflect on your current workflows and ask: Are your developers empowered with the right tools to innovate securely and efficiently? How does your organization approach compliance and risk management without stifling creativity?

Tools like Docker Business can be a strategic enabler, helping you address these challenges while maintaining focus on your goals.

Learn more

- Docker Scout: Integrates seamlessly into your development lifecycle, delivering vulnerability scans, image analysis, and actionable recommendations to address issues before they reach production.

- Docker Health Scores: A security grading system for container images that offers teams clear insights into their image security posture.

- Docker Hub: Access trusted, verified content, including Docker Official Images (DOI), to build secure and compliant software applications.

- Docker Official Images (DOI): A curated set of high-quality images that provide a secure foundation for containerized applications.

- Image Access Management (IAM): Enforce image-sharing policies and restrict access to sensitive components, ensuring only trusted team members access critical assets.

- Hardened Docker Desktop: A tamper-proof, enterprise-grade development environment that aligns with security standards to minimize risks from local development.

Shift-Left Testing with Testcontainers: Catching Bugs Early with Local Integration Tests

Modern software development emphasizes speed and agility, making efficient testing crucial. DORA research reveals that elite teams thrive with both high performance and reliability. They can achieve 127x faster lead times, 182x more deployments per year, 8x lower change failure rates and most impressively, 2,293x faster recovery times after incidents. The secret sauce is they “shift left.”

Shift-Left is a practice that moves integration activities like testing and security earlier in the development cycle, allowing teams to detect and fix issues before they reach production. By incorporating local and integration tests early, developers can prevent costly late-stage defects, accelerate development, and improve software quality.

In this article, you’ll learn how integration tests can help you catch defects earlier in the development inner loop and how Testcontainers can make them feel as lightweight and easy as unit tests. Finally, we’ll break down the impact that shifting left integration tests has on the development process velocity and lead time for changes according to DORA metrics.

Real-world example: Case sensitivity bug in user registration

In a traditional workflow, integration and E2E tests are often executed in the outer loop of the development cycle, leading to delayed bug detection and expensive fixes. For example, if you are building a user registration service where users enter their email addresses, you must ensure that the emails are case-insensitive and not duplicated when stored.

If case sensitivity is not handled properly and is assumed to be managed by the database, testing a scenario where users can register with duplicate emails differing only in letter case would only occur during E2E tests or manual checks. At that stage, it’s too late in the SDLC and can result in costly fixes.

By shifting testing earlier and enabling developers to spin up real services locally — such as databases, message brokers, cloud emulators, or other microservices — the testing process becomes significantly faster. This allows developers to detect and resolve defects sooner, preventing expensive late-stage fixes.

Let’s dive deep into this example scenario and how different types of tests would handle it.

Scenario

A new developer is implementing a user registration service and preparing for production deployment.

Code Example of the registerUser method

async registerUser(email: string, username: string): Promise<User> {

const existingUser = await this.userRepository.findOne({

where: {

email: email

}

});

if (existingUser) {

throw new Error("Email already exists");

}

...

}

The Bug

The registerUser method doesn’t handle case sensitivity properly and relies on the database or the UI framework to handle case insensitivity by default. So, in practice, users can register duplicate emails with both lower and upper letters (e.g., user@example.com and USER@example.com).

Impact

- Authentication issues arise because email case mismatches cause login failures.

- Security vulnerabilities appear due to duplicate user identities.

- Data inconsistencies complicate user identity management.

Testing method 1: Unit tests.

These tests only validate the code itself, so email case sensitivity verification relies on the database where SQL queries are executed. Since unit tests don’t run against a real database, they can’t catch issues like case sensitivity.

Testing method 2: End-to-end test or manual checks.

These verifications will only catch the issue after the code is deployed to a staging environment. While automation can help, detecting issues this late in the development cycle delays feedback to developers and makes fixes more time-consuming and costly.

Testing method 3: Using mocks to simulate database interactions with Unit Tests.

One approach that could work and allow us to iterate quickly would be to mock the database layer and define a mock repository that responds with the error. Then, we could write a unit test that executes really fast:

test('should prevent registration with same email in different case', async () => {

const userService = new UserRegistrationService(new MockRepository());

await userService.registerUser({ email: 'user@example.com', password: 'password123' });

await expect(userService.registerUser({ email: 'USER@example.com', password: 'password123' }))

.rejects.toThrow('Email already exists');

});

In the above example, the User service is created with a mock repository that’ll hold an in-memory representation of the database, i.e. as a map of users. This mock repository will detect if a user has passed twice, probably using the username as a non-case-sensitive key, returning the expected error.

Here, we have to code the validation logic in the mock, replicating what the User service or the database should do. Whenever the user’s validation needs a change, e.g. not including special characters, we have to change the mock too. Otherwise, our tests will assert against an outdated state of the validations. If the usage of mocks is spread across the entire codebase, this maintenance could be very hard to do.

To avoid that, we consider that integration tests with real representations of the services we depend on. In the above example, using the database repository is much better than mocks, because it provides us with more confidence on what we are testing.

Testing method 4: Shift-left local integration tests with Testcontainers

Instead of using mocks, or waiting for staging to run the integration or E2E tests, we can detect the issue earlier. This is achieved by enabling developers to run the integration tests for the project locally in the developer’s inner loop, using Testcontainers with a real PostgreSQL database.

Benefits

- Time Savings: Tests run in seconds, catching the bug early.

- More Realistic Testing: Uses an actual database instead of mocks.

- Confidence in Production Readiness: Ensures business-critical logic behaves as expected.

Example integration test

First, let’s set up a PostgreSQL container using the Testcontainers library and create a userRepository to connect to this PostgreSQL instance:

let userService: UserRegistrationService;

beforeAll(async () => {

container = await new PostgreSqlContainer("postgres:16")

.start();

dataSource = new DataSource({

type: "postgres",

host: container.getHost(),

port: container.getMappedPort(5432),

username: container.getUsername(),

password: container.getPassword(),

database: container.getDatabase(),

entities: [User],

synchronize: true,

logging: true,

connectTimeoutMS: 5000

});

await dataSource.initialize();

const userRepository = dataSource.getRepository(User);

userService = new UserRegistrationService(userRepository);

}, 30000);

Now, with initialized userService, we can use the registerUser method to test user registration with the real PostgreSQL instance:

test('should prevent registration with same email in different case', async () => {

await userService.registerUser({ email: 'user@example.com', password: 'password123' });

await expect(userService.registerUser({ email: 'USER@example.com', password: 'password123' }))

.rejects.toThrow('Email already exists');

});

Why This Works

- Uses a real PostgreSQL database via Testcontainers

- Validates case-insensitive email uniqueness

- Verifies email storage format

How Testcontainers helps

Testcontainers modules provide preconfigured implementations for the most popular technologies, making it easier than ever to write robust tests. Whether your application relies on databases, message brokers, cloud services like AWS (via LocalStack), or other microservices, Testcontainers has a module to streamline your testing workflow.

With Testcontainers, you can also mock and simulate service-level interactions or use contract tests to verify how your services interact with others. Combining this approach with local testing against real dependencies, Testcontainers provides a comprehensive solution for local integration testing and eliminates the need for shared integration testing environments, which are often difficult and costly to set up and manage. To run Testcontainers tests, you need a Docker context to spin up containers. Docker Desktop ensures seamless compatibility with Testcontainers for local testing.

Testcontainers Cloud: Scalable Testing for High-Performing Teams

Testcontainers is a great solution to enable integration testing with real dependencies locally. If you want to take testing a step further — scaling Testcontainers usage across teams, monitoring images used for testing, or seamlessly running Testcontainers tests in CI — you should consider using Testcontainers Cloud. It provides ephemeral environments without the overhead of managing dedicated test infrastructure. Using Testcontainers Cloud locally and in CI ensures consistent testing outcomes, giving you greater confidence in your code changes. Additionally, Testcontainers Cloud allows you to seamlessly run integration tests in CI across multiple pipelines, helping to maintain high-quality standards at scale. Finally, Testcontainers Cloud is more secure and ideal for teams and enterprises who have more stringent requirements for containers’ security mechanisms.

Measuring the business impact of shift-left testing

As we have seen, shift-left testing with Testcontainers significantly improves defect detection rate and time and reduces context switching for developers. Let’s take the example above and compare different production deployment workflows and how early-stage testing would impact developer productivity.

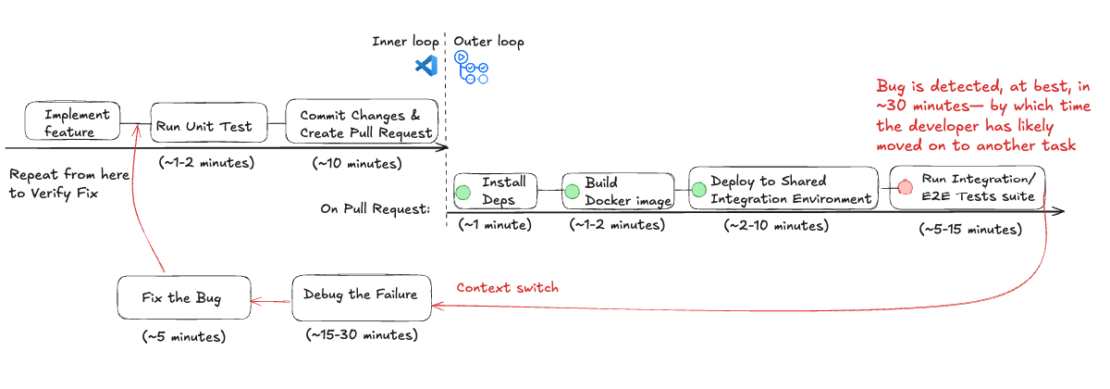

Traditional workflow (shared integration environment)

Process breakdown:

The traditional workflow comprises writing feature code, running unit tests locally, committing changes, and creating pull requests for the verification flow in the outer loop. If a bug is detected in the outer loop, developers have to go back to their IDE and repeat the process of running the unit test locally and other steps to verify the fix.

Figure 1: Workflow of a traditional shared integration environment broken down by time taken for each step.

Lead Time for Changes (LTC): It takes at least 1 to 2 hours to discover and fix the bug (more depending on CI/CD load and established practices). In the best-case scenario, it would take approximately 2 hours from code commit to production deployment. In the worst-case scenario, it may take several hours or even days if multiple iterations are required.

Deployment Frequency (DF) Impact: Since fixing a pipeline failure can take around 2 hours and there’s a daily time constraint (8-hour workday), you can realistically deploy only 3 to 4 times per day. If multiple failures occur, deployment frequency can drop further.

Additional associated costs: Pipeline workers’ runtime minutes and Shared Integration Environment maintenance costs.

Developer Context Switching: Since bug detection occurs about 30 minutes after the code commit, developers lose focus. This leads to an increased cognitive load after they have to constantly context switch, debug, and then context switch again.

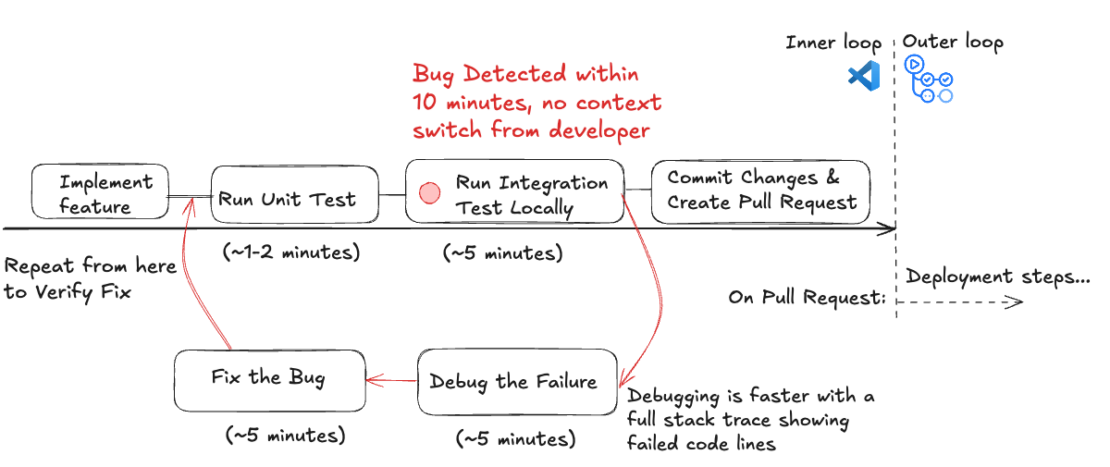

Shift-left workflow (local integration testing with Testcontainers)

Process breakdown:

The shift-left workflow is much simpler and starts with writing code and running unit tests. Instead of running integration tests in the outer loop, developers can run them locally in the inner loop to troubleshoot and fix issues. The changes are verified again before proceeding to the next steps and the outer loop.

Figure 2: Shift-Left Local Integration Testing with Testcontainers workflow broken down by time taken for each step. The feedback loop is much faster and saves developers time and headaches downstream.

Lead Time for Changes (LTC): It takes less than 20 minutes to discover and fix the bug in the developers’ inner loop. Therefore, local integration testing enables at least 65% faster defect identification than testing on a Shared Integration Environment.

Deployment Frequency (DF) Impact: Since the defect was identified and fixed locally within 20 minutes, the pipeline would run to production, allowing for 10 or more deployments daily.

Additional associated costs: 5 Testcontainers Cloud minutes are consumed.

Developer Context Switching: No context switching for the developer, as tests running locally provide immediate feedback on code changes and let the developer stay focused within the IDE and in the inner loop.

Key Takeaways

| Traditional Workflow (Shared Integration Environment) | Shift-Left Workflow (Local Integration Testing with Testcontainers) | Improvements and further references | |

| Faster Lead Time for Changes (LTC | Code changes validated in hours or days. Developers wait for shared CI/CD environments. | Code changes validated in minutes. Testing is immediate and local. | >65% Faster Lead Time for Changes (LTC) – Microsoft reduced lead time from days to hours by adopting shift-left practices. |

| Higher Deployment Frequency (DF) | Deployment happens daily, weekly, or even monthly due to slow validation cycles. | Continuous testing allows multiple deployments per day. | 2x Higher Deployment Frequency – 2024 DORA Report shows shift-left practices more than double deployment frequency. Elite teams deploy 182x more often. |

| Lower Change Failure Rate (CFR) | Bugs that escape into production can lead to costly rollbacks and emergency fixes. | More bugs are caught earlier in CI/CD, reducing production failures. | Lower Change Failure Rate – IBM’s Systems Sciences Institute estimates defects found in production cost 15x more to fix than those caught early. |

| Faster Mean Time to Recovery (MTTR) | Fixes take hours, days, or weeks due to complex debugging in shared environments. | Rapid bug resolution with local testing. Fixes verified in minutes. | Faster MTTR—DORA’s elite performers restore service in less than one hour, compared to weeks to a month for low performers. |

| Cost Savings | Expensive shared environments, slow pipeline runs, high maintenance costs. | Eliminates costly test environments, reducing infrastructure expenses. | Significant Cost Savings – ThoughtWorks Technology Radar highlights shared integration environments as fragile and expensive. |

Table 1: Summary of key metrics improvement by using shifting left workflow with local testing using Testcontainers

Conclusion

Shift-left testing improves software quality by catching issues earlier, reducing debugging effort, enhancing system stability, and overall increasing developer productivity. As we’ve seen, traditional workflows relying on shared integration environments introduce inefficiencies, increasing lead time for changes, deployment delays, and cognitive load due to frequent context switching. In contrast, by introducing Testcontainers for local integration testing, developers can achieve:

- Faster feedback loops – Bugs are identified and resolved within minutes, preventing delays.

- More reliable application behavior – Testing in realistic environments ensures confidence in releases.

- Reduced reliance on expensive staging environments – Minimizing shared infrastructure cuts costs and streamlines the CI/CD process.

- Better developer flow state – Easily setting up local test scenarios and re-running them fast for debugging helps developers stay focused on innovation.

Testcontainers provides an easy and efficient way to test locally and catch expensive issues earlier. To scale across teams, developers can consider using Docker Desktop and Testcontainers Cloud to run unit and integration tests locally, in the CI, or ephemeral environments without the complexity of maintaining dedicated test infrastructure. Learn more about Testcontainers and Testcontainers Cloud in our docs.

Further Reading

- Sign up for a Testcontainers Cloud account.

- Follow the guide: Mastering Testcontainers Cloud by Docker: streamlining integration testing with containers

- Connect on the Testcontainers Slack.

- Get started with the Testcontainers guide.

- Learn about Testcontainers best practices.

- Learn about Spring Boot Application Testing and Development with Testcontainers

- Subscribe to the Docker Newsletter.

- Have questions? The Docker community is here to help.

- New to Docker? Get started.

How to Dockerize a Django App: Step-by-Step Guide for Beginners

One of the best ways to make sure your web apps work well in different environments is to containerize them. Containers let you work in a more controlled way, which makes development and deployment easier. This guide will show you how to containerize a Django web app with Docker and explain why it’s a good idea.

We will walk through creating a Docker container for your Django application. Docker gives you a standardized environment, which makes it easier to get up and running and more productive. This tutorial is aimed at those new to Docker who already have some experience with Django. Let’s get started!

Why containerize your Django application?

Django apps can be put into containers to help you work more productively and consistently. Here are the main reasons why you should use Docker for your Django project:

- Creates a stable environment: Containers provide a stable environment with all dependencies installed, so you don’t have to worry about “it works on my machine” problems. This ensures that you can reproduce the app and use it on any system or server. Docker makes it simple to set up local environments for development, testing, and production.

- Ensures reproducibility and portability: A Dockerized app bundles all the environment variables, dependencies, and configurations, so it always runs the same way. This makes it easier to deploy, especially when you’re moving apps between environments.

- Facilitates collaboration between developers: Docker lets your team work in the same environment, so there’s less chance of conflicts from different setups. Shared Docker images make it simple for your team to get started with fewer setup requirements.

- Speeds up deployment processes: Docker makes it easier for developers to get started with a new project quickly. It removes the hassle of setting up development environments and ensures everyone is working in the same place, which makes it easier to merge changes from different developers.

Getting started with Django and Docker

Setting up a Django app in Docker is straightforward. You don’t need to do much more than add in the basic Django project files.

Tools you’ll need

To follow this guide, make sure you first:

- Install Docker Desktop and Docker Compose on your machine.

- Use a Docker Hub account to store and access Docker images.

- Make sure Django is installed on your system.

If you need help with the installation, you can find detailed instructions on the Docker and Django websites.

How to Dockerize your Django project

The following six steps include code snippets to guide you through the process.

Step 1: Set up your Django project

1. Initialize a Django project.

If you don’t have a Django project set up yet, you can create one with the following commands:

django-admin startproject my_docker_django_app cd my_docker_django_app

2. Create a requirements.txt file.

In your project, create a requirements.txt file to store dependencies:

pip freeze > requirements.txt

3. Update key environment settings.

You need to change some sections in the settings.py file to enable them to be set using environment variables when the container is started. This allows you to change these settings depending on the environment you are working in.

# The secret key

SECRET_KEY = os.environ.get("SECRET_KEY")

DEBUG = bool(os.environ.get("DEBUG", default=0))

ALLOWED_HOSTS = os.environ.get("DJANGO_ALLOWED_HOSTS","127.0.0.1").split(",")

Step 2: Create a Dockerfile

A Dockerfile is a script that tells Docker how to build your Docker image. Put it in the root directory of your Django project. Here’s a basic Dockerfile setup for Django:

# Use the official Python runtime image FROM python:3.13 # Create the app directory RUN mkdir /app # Set the working directory inside the container WORKDIR /app # Set environment variables # Prevents Python from writing pyc files to disk ENV PYTHONDONTWRITEBYTECODE=1 #Prevents Python from buffering stdout and stderr ENV PYTHONUNBUFFERED=1 # Upgrade pip RUN pip install --upgrade pip # Copy the Django project and install dependencies COPY requirements.txt /app/ # run this command to install all dependencies RUN pip install --no-cache-dir -r requirements.txt # Copy the Django project to the container COPY . /app/ # Expose the Django port EXPOSE 8000 # Run Django’s development server CMD ["python", "manage.py", "runserver", "0.0.0.0:8000"]

Each line in the Dockerfile serves a specific purpose:

FROM: Selects the image with the Python version you need.WORKDIR: Sets the working directory of the application within the container.ENV: Sets the environment variables needed to build the applicationRUNandCOPYcommands: Install dependencies and copy project files.EXPOSEandCMD: Expose the Django server port and define the startup command.

You can build the Django Docker container with the following command:

docker build -t django-docker .

To see your image, you can run:

docker image list

The result will look something like this:

REPOSITORY TAG IMAGE ID CREATED SIZE django-docker latest ace73d650ac6 20 seconds ago 1.55GB

Although this is a great start in containerizing the application, you’ll need to make a number of improvements to get it ready for production.

- The CMD

manage.pyis only meant for development purposes and should be changed for a WSGI server. - Reduce the size of the image by using a smaller image.

- Optimize the image by using a multistage build process.

Let’s get started with these improvements.

Update requirements.txt

Make sure to add gunicorn to your requirements.txt. It should look like this:

asgiref==3.8.1 Django==5.1.3 sqlparse==0.5.2 gunicorn==23.0.0 psycopg2-binary==2.9.10

Make improvements to the Dockerfile

The Dockerfile below has changes that solve the three items on the list. The changes to the file are as follows:

- Updated the

FROM python:3.13image toFROM python:3.13-slim. This change reduces the size of the image considerably, as the image now only contains what is needed to run the application. - Added a multi-stage build process to the Dockerfile. When you build applications, there are usually many files left on the file system that are only needed during build time and are not needed once the application is built and running. By adding a build stage, you use one image to build the application and then move the built files to the second image, leaving only the built code. Read more about multi-stage builds in the documentation.

- Add the Gunicorn WSGI server to the server to enable a production-ready deployment of the application.

# Stage 1: Base build stage FROM python:3.13-slim AS builder # Create the app directory RUN mkdir /app # Set the working directory WORKDIR /app # Set environment variables to optimize Python ENV PYTHONDONTWRITEBYTECODE=1 ENV PYTHONUNBUFFERED=1 # Upgrade pip and install dependencies RUN pip install --upgrade pip # Copy the requirements file first (better caching) COPY requirements.txt /app/ # Install Python dependencies RUN pip install --no-cache-dir -r requirements.txt # Stage 2: Production stage FROM python:3.13-slim RUN useradd -m -r appuser && \ mkdir /app && \ chown -R appuser /app # Copy the Python dependencies from the builder stage COPY --from=builder /usr/local/lib/python3.13/site-packages/ /usr/local/lib/python3.13/site-packages/ COPY --from=builder /usr/local/bin/ /usr/local/bin/ # Set the working directory WORKDIR /app # Copy application code COPY --chown=appuser:appuser . . # Set environment variables to optimize Python ENV PYTHONDONTWRITEBYTECODE=1 ENV PYTHONUNBUFFERED=1 # Switch to non-root user USER appuser # Expose the application port EXPOSE 8000 # Start the application using Gunicorn CMD ["gunicorn", "--bind", "0.0.0.0:8000", "--workers", "3", "my_docker_django_app.wsgi:application"]

Build the Docker container image again.

docker build -t django-docker .

After making these changes, we can run a docker image list again:

REPOSITORY TAG IMAGE ID CREATED SIZE django-docker latest 3c62f2376c2c 6 seconds ago 299MB

You can see a significant improvement in the size of the container.

The size was reduced from 1.6 GB to 299MB, which leads to faster a deployment process when images are downloaded and cheaper storage costs when storing images.

You could use docker init as a command to generate the Dockerfile and compose.yml file for your application to get you started.

Step 3: Configure the Docker Compose file

A compose.yml file allows you to manage multi-container applications. Here, we’ll define both a Django container and a PostgreSQL database container.

The compose file makes use of an environment file called .env, which will make it easy to keep the settings separate from the application code. The environment variables listed here are standard for most applications:

services:

db:

image: postgres:17

environment:

POSTGRES_DB: ${DATABASE_NAME}

POSTGRES_USER: ${DATABASE_USERNAME}

POSTGRES_PASSWORD: ${DATABASE_PASSWORD}

ports:

- "5432:5432"

volumes:

- postgres_data:/var/lib/postgresql/data

env_file:

- .env

django-web:

build: .

container_name: django-docker

ports:

- "8000:8000"

depends_on:

- db

environment:

DJANGO_SECRET_KEY: ${DJANGO_SECRET_KEY}

DEBUG: ${DEBUG}

DJANGO_LOGLEVEL: ${DJANGO_LOGLEVEL}

DJANGO_ALLOWED_HOSTS: ${DJANGO_ALLOWED_HOSTS}

DATABASE_ENGINE: ${DATABASE_ENGINE}

DATABASE_NAME: ${DATABASE_NAME}

DATABASE_USERNAME: ${DATABASE_USERNAME}

DATABASE_PASSWORD: ${DATABASE_PASSWORD}

DATABASE_HOST: ${DATABASE_HOST}

DATABASE_PORT: ${DATABASE_PORT}

env_file:

- .env

volumes:

postgres_data:

And the example .env file:

DJANGO_SECRET_KEY=your_secret_key DEBUG=True DJANGO_LOGLEVEL=info DJANGO_ALLOWED_HOSTS=localhost DATABASE_ENGINE=postgresql_psycopg2 DATABASE_NAME=dockerdjango DATABASE_USERNAME=dbuser DATABASE_PASSWORD=dbpassword DATABASE_HOST=db DATABASE_PORT=5432

Step 4: Update Django settings and configuration files

1. Configure database settings.

Update settings.py to use PostgreSQL:

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.{}'.format(

os.getenv('DATABASE_ENGINE', 'sqlite3')

),

'NAME': os.getenv('DATABASE_NAME', 'polls'),

'USER': os.getenv('DATABASE_USERNAME', 'myprojectuser'),

'PASSWORD': os.getenv('DATABASE_PASSWORD', 'password'),

'HOST': os.getenv('DATABASE_HOST', '127.0.0.1'),

'PORT': os.getenv('DATABASE_PORT', 5432),

}

}

2. Set ALLOWED_HOSTS to read from environment files.

In settings.py, set ALLOWED_HOSTS to:

# 'DJANGO_ALLOWED_HOSTS' should be a single string of hosts with a , between each.

# For example: 'DJANGO_ALLOWED_HOSTS=localhost 127.0.0.1,[::1]'

ALLOWED_HOSTS = os.environ.get("DJANGO_ALLOWED_HOSTS","127.0.0.1").split(",")

3. Set the SECRET_KEY to read from environment files.

In settings.py, set SECRET_KEY to:

# SECURITY WARNING: keep the secret key used in production secret!

SECRET_KEY = os.environ.get("DJANGO_SECRET_KEY")

4. Set DEBUG to read from environment files.

In settings.py, set DEBUG to:

# SECURITY WARNING: don't run with debug turned on in production!

DEBUG = bool(os.environ.get("DEBUG", default=0))

Step 5: Build and run your new Django project

To build and start your containers, run:

docker compose up --build

This command will download any necessary Docker images, build the project, and start the containers. Once complete, your Django application should be accessible at http://localhost:8000.

Step 6: Test and access your application

Once the app is running, you can test it by navigating to http://localhost:8000. You should see Django’s welcome page, indicating that your app is up and running. To verify the database connection, try running a migration:

docker compose run django-web python manage.py migrate

Troubleshooting common issues with Docker and Django

Here are some common issues you might encounter and how to solve them:

- Database connection errors: If Django can’t connect to PostgreSQL, verify that your database service name matches in

compose.ymlandsettings.py. - File synchronization issues: Use the

volumesdirective incompose.ymlto sync changes from your local files to the container. - Container restart loops or crashes: Use

docker compose logsto inspect container errors and determine the cause of the crash.

Optimizing your Django web application

To improve your Django Docker setup, consider these optimization tips:

- Automate and secure builds: Use Docker’s multi-stage builds to create leaner images, removing unnecessary files and packages for a more secure and efficient build.

- Optimize database access: Configure database pooling and caching to reduce connection time and boost performance.

- Efficient dependency management: Regularly update and audit dependencies listed in

requirements.txtto ensure efficiency and security.

Take the next step with Docker and Django

Containerizing your Django application with Docker is an effective way to simplify development, ensure consistency across environments, and streamline deployments. By following the steps outlined in this guide, you’ve learned how to set up a Dockerized Django app, optimize your Dockerfile for production, and configure Docker Compose for multi-container setups.

Docker not only helps reduce “it works on my machine” issues but also fosters better collaboration within development teams by standardizing environments. Whether you’re deploying a small project or scaling up for enterprise use, Docker equips you with the tools to build, test, and deploy reliably.

Ready to take the next step? Explore Docker’s powerful tools, like Docker Hub and Docker Scout, to enhance your containerized applications with scalable storage, governance, and continuous security insights.

Learn more

- Subscribe to the Docker Newsletter.

- Learn more about Docker commands, Docker Compose, and security in the Docker Docs.

- Find Dockerized Django projects for inspiration and guidance in GitHub.

- Discover Docker plugins that improve performance, logging, and security.

- Get the latest release of Docker Desktop.

- Have questions? The Docker community is here to help.

- New to Docker? Get started.

Mastering Peak Software Development Efficiency with Docker

In modern software development, businesses are searching for smarter ways to streamline workflows and deliver value faster. For developers, this means tackling challenges like collaboration and security head-on, while driving efficiency that contributes directly to business performance. But how do you address potential roadblocks before they become costly issues in production? The answer lies in optimizing the development inner loop — a core focus for the future of app development.

By identifying and resolving inefficiencies early in the development lifecycle, software development teams can overcome common engineering challenges such as slow dev cycles, spiraling infrastructure costs, and scaling challenges. With Docker’s integrated suite of development tools, developers can achieve new levels of engineering efficiency, creating high-quality software while delivering real business impact.

Let’s explore how Docker is transforming the development process, reducing operational overhead, and empowering teams to innovate faster.

Speed up software development lifecycles: Faster gains with less effort

A fast software development lifecycle is a crucial aspect for delivering value to users, maintaining a competitive edge, and staying ahead of industry trends. To enable this, software developers need workflows that minimize friction and allow them to iterate quickly without sacrificing quality. That’s where Docker makes a difference. By streamlining workflows, eliminating bottlenecks, and automating repetitive tasks, Docker empowers developers to focus on high-impact work that drives results.

Consistency across development environments is critical for improving speed. That’s why Docker helps developers create consistent environments across local, test, and production systems. In fact, a recent study reported developers experiencing a 6% increase in productivity when leveraging Docker Business. This consistency eliminates guesswork, ensuring developers can concentrate on writing code and improving features rather than troubleshooting issues. With Docker, applications behave predictably across every stage of the development lifecycle.

Docker also accelerates development by significantly reducing time spent on iteration and setup. More specifically, organizations leveraging Docker Business achieved a three-month faster time-to-market for revenue-generating applications. Engineering teams can move swiftly through development stages, delivering new features and bug fixes faster. By improving efficiency and adapting to evolving needs, Docker enables development teams to stay agile and respond effectively to business priorities.

Improve scaling agility: Flexibility for every scenario

Scalability is another essential for businesses to meet fluctuating demands and seize opportunities. Whether handling a surge in user traffic or optimizing resources during quieter periods, the ability to scale applications and infrastructure efficiently is a critical advantage. Docker makes this possible by enabling teams to adapt with speed and flexibility.

Docker’s cloud-native approach allows software engineering teams to scale up or down with ease to meet changing requirements. This flexibility supports experimentation with cutting-edge technologies like AI, machine learning, and microservices without disrupting existing workflows. With this added agility, developers can explore new possibilities while maintaining focus on delivering value.

Whether responding to market changes or exploring the potential of emerging tools, Docker equips companies to stay agile and keep evolving, ensuring their development processes are always ready to meet the moment.

Optimize resource efficiency: Get the most out of what you’ve got

Maximizing resource efficiency is crucial for reducing costs and maintaining agility. By making the most of existing infrastructure, businesses can avoid unnecessary expenses and minimize cloud scaling costs, meaning more resources for innovation and growth. Docker empowers teams to achieve this level of efficiency through its lightweight, containerized approach.

Docker containers are designed to be resource-efficient, enabling multiple applications to run in isolated environments on the same system. Unlike traditional virtual machines, containers minimize overhead while maintaining performance, consolidating workloads, and lowering the operational costs of maintaining separate environments. For example, a leading beauty company reduced infrastructure costs by 25% using Docker’s enhanced CPU and memory efficiency. This streamlined approach ensures businesses can scale intelligently while keeping infrastructure lean and effective.

By containerizing applications, businesses can optimize their infrastructure, avoiding costly upgrades while getting more value from their current systems. It’s a smarter, more efficient way to ensure your resources are working at their peak, leaving no capacity underutilized.

Establish cost-effective scaling: Growth without growing pains

Similarly, scaling efficiently is essential for businesses to keep up with growing demands, introduce new features, or adopt emerging technologies. However, traditional scaling methods often come with high upfront costs and complex infrastructure changes. Docker offers a smarter alternative, enabling development teams to scale environments quickly and cost-effectively.

With a containerized model, infrastructure can be dynamically adjusted to match changing needs. Containers are lightweight and portable, making it easy to scale up for spikes in demand or add new capabilities without overhauling existing systems. This flexibility reduces financial strain, allowing businesses to grow sustainably while maximizing the use of cloud resources.

Docker ensures that scaling is responsive and budget-friendly, empowering teams to focus on innovation and delivery rather than infrastructure costs. It’s a practical solution to achieve growth without unnecessary complexity or expense.

Software engineering efficiency at your fingertips

The developer community consistently ranks Docker highly, including choosing it as the most-used and most-admired developer tool in Stack Overflow’s Developer Survey. With Docker’s suite of products, teams can reach a new level of efficient software development by streamlining the dev lifecycle, optimizing resources, and providing agile, cost-effective scaling solutions. By simplifying complex processes in the development inner loop, Docker enables businesses to deliver high-quality software faster while keeping operational costs in check. This allows developers to focus on what they do best: building innovative, impactful applications.

By removing complexity, accelerating development cycles, and maximizing resource usage, Docker helps businesses stay competitive and efficient. And ultimately, their teams can achieve more in less time — meeting market demands with efficiency and quality.

Ready to supercharge your development team’s performance? Download our white paper to see how Docker can help streamline your workflow, improve productivity, and deliver software that stands out in the market.

Learn more

- Find a Docker plan that’s right for you.

- Subscribe to the Docker Newsletter.

- Get the latest release of Docker Desktop.

- New to Docker? Get started.

The Model Context Protocol: Simplifying Building AI apps with Anthropic Claude Desktop and Docker

Anthropic recently unveiled the Model Context Protocol (MCP), a new standard for connecting AI assistants and models to reliable data and tools. However, packaging and distributing MCP servers is very challenging due to complex environment setups across multiple architectures and operating systems. Docker is the perfect solution for this — it allows developers to encapsulate their development environment into containers, ensuring consistency across all team members’ machines and deployments consistent and predictable. In this blog post, we provide a few examples of using Docker to containerize Model Context Protocol (MCP) to simplify building AI applications.

What is Model Context Protocol (MCP)?

MCP (Model Context Protocol), a new protocol open-sourced by Anthropic, provides standardized interfaces for LLM applications to integrate with external data sources and tools. With MCP, your AI-powered applications can retrieve data from external sources, perform operations with third-party services, or even interact with local filesystems.

Among the use cases enabled by this protocol is the ability to expose custom tools to AI models. This provides key capabilities such as:

- Tool discovery: Helping LLMs identify tools available for execution

- Tool invocation: Enabling precise execution with the right context and arguments

Since its release, the developer community has been particularly energized. We asked David Soria Parra, Member of Technical Staff from Anthropic, why he felt MCP was having such an impact: “Our initial developer focus means that we’re no longer bound to one specific tool set. We are giving developers the power to build for their particular workflow.”

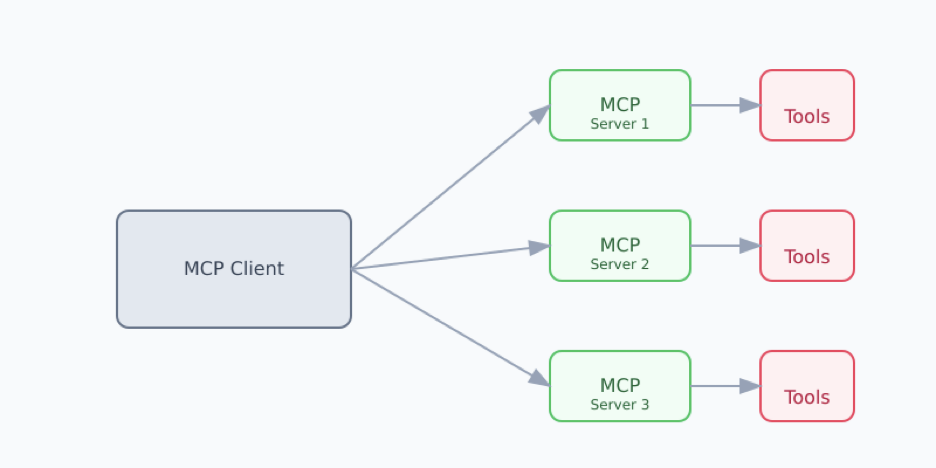

How does MCP work? What challenges exist?

MCP works by introducing the concept of MCP clients and MCP Servers — clients request resources and the servers handle the request and perform the requested action. MCP Clients are often embedded into LLM-based applications, such as the Claude Desktop App. The MCP Servers are launched by the client to then perform the desired work using any additional tools, languages, or processes needed to perform the work.

Examples of tools include filesystem access, GitHub and GitLab repo management, integrations with Slack, or retrieving or modifying state in Kubernetes clusters.

The goal of MCP servers is to provide reusable toolsets and reuse them across clients, like Claude Desktop – write one set of tools and reuse them across many LLM-based applications. But, packaging and distributing these servers is currently a challenge. Specifically:

- Environment conflicts: Installing MCP servers often requires specific versions of Node.js, Python, and other dependencies, which may conflict with existing installations on a user’s machine

- Lack of host isolation: MCP servers currently run on the host, granting access to all host files and resources

- Complex setup: MCP servers currently require users to download and configure all of the code and configure the environment, making adoption difficult

- Cross-platform challenges: Running the servers consistently across different architectures (e.g., x86 vs. ARM, Windows vs Mac) or operating systems introduces additional complexity

- Dependencies: Ensuring that server-specific runtime dependencies are encapsulated and distributed safely.

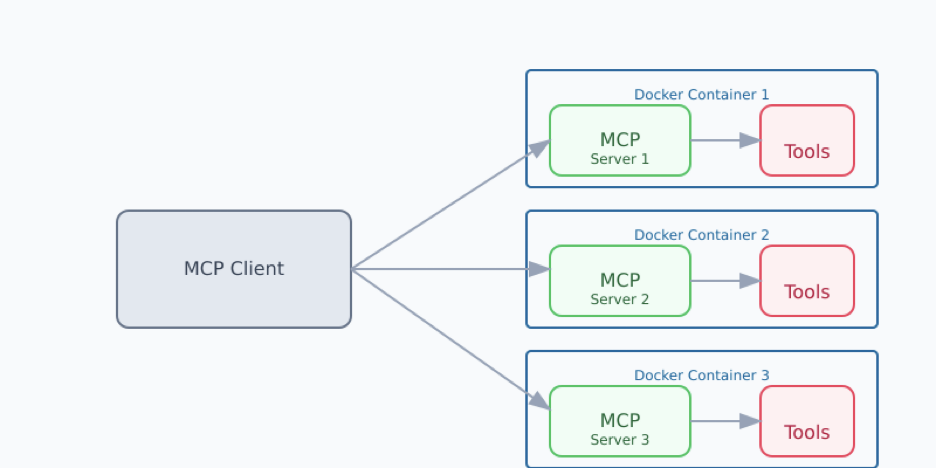

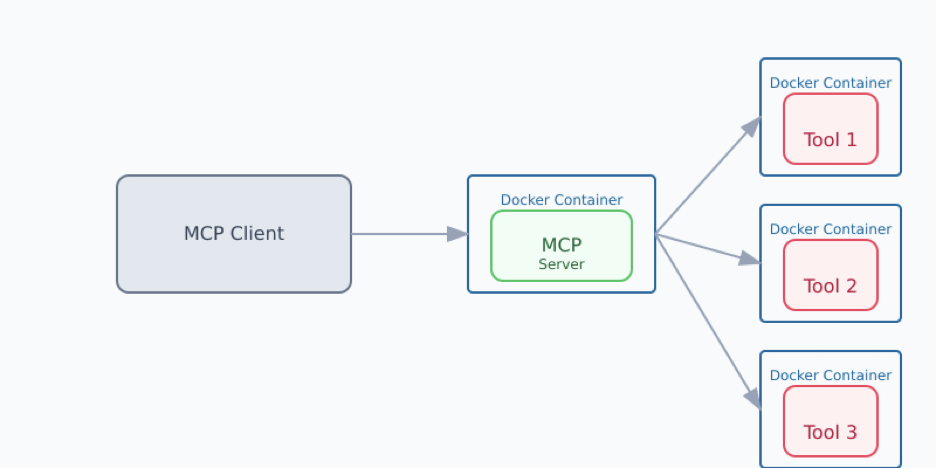

How does Docker help?

Docker solves these challenges by providing a standardized method and tooling to develop, package, and distribute applications, including MCP servers. By packaging these MCP servers as containers, the challenges of isolation or environment differences disappear. Users can simply run a container, rather than spend time installing dependencies and configuring the runtime.

Docker Desktop provides a development platform to build, test, and run these MCP servers. Docker Hub is the world’s largest repository of container images, making it the ideal choice to distribute containerized MCP servers. Docker Scout helps ensure images are kept secure and free of vulnerabilities. Docker Build Cloud helps you build images more quickly and reliably, especially when cross-platform builds are required.

The Docker suite of products brings benefits to both publishers and consumers — publishers can easily package and distribute their servers and consumers can easily download and run them with little to no configuration.

Again quoting David Soria Parra,

“Building an MCP server for ffmpeg would be a tremendously difficult undertaking without Docker. Docker is one of the most widely used packaging solutions for developers. The same way it solved the packaging problem for the cloud, it now has the potential to solve the packaging problem for rich AI agents”.

As we continue to explore how MCP allows us to connect to existing ecosystems of tools, we also envision MCP bridges to existing containerized tools.

Try it yourself with containerized Reference Servers

As part of publishing the specification, Anthropic published an initial set of reference servers. We have worked with the Anthropic team to create Docker images for these servers and make them available from the new Docker Hub mcp namespace.

Developers can try this out today using Claude Desktop as the MCP client and Docker Desktop to run any of the reference servers by updating your claude_desktop_config.json file.

The list of current servers documents how to update the claude_desktop_config.json to activate these MCP server docker containers on your local host.

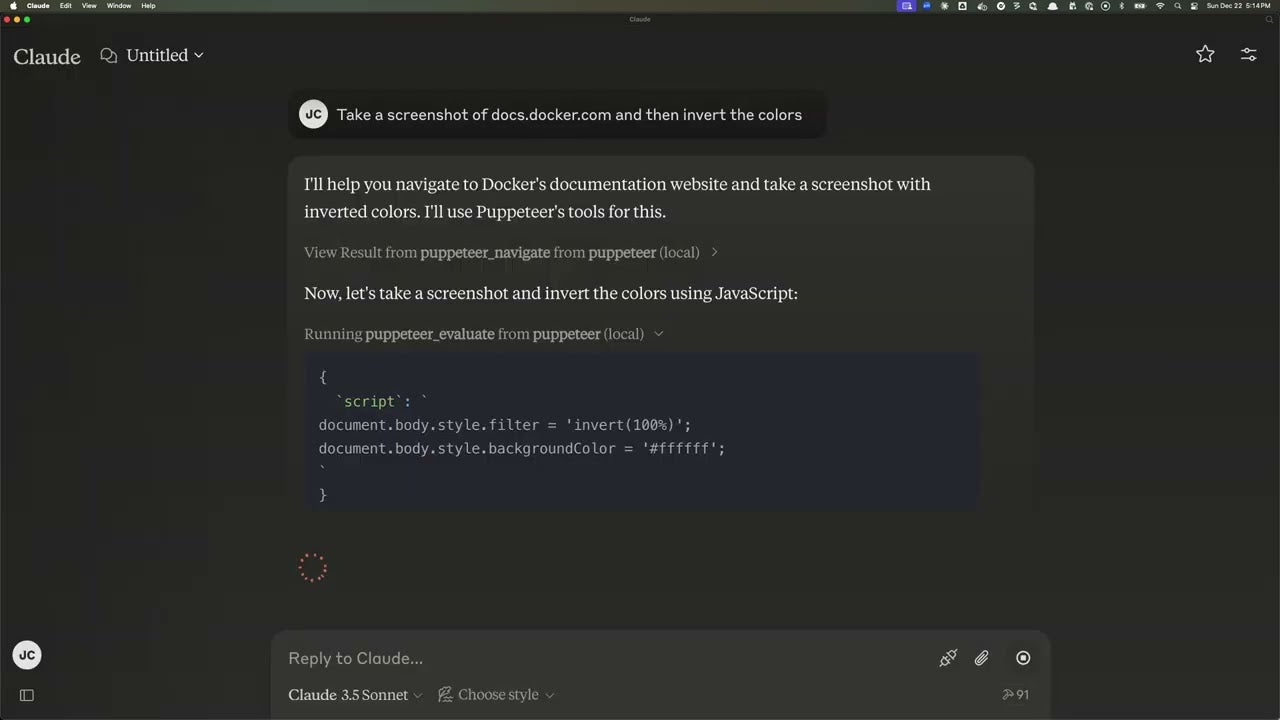

Using Puppeteer to take and modify screenshots using Docker

This demo will use the Puppeteer MCP server to take a screenshot of a website and invert the colors using Claude Desktop and Docker Desktop. Doing this without a containerized environment requires quite a bit of setup, but is fairly trivial using containers.

- Update your claude_desktop_config.json file to include the following configuration:

For example, extending Claude Desktop to use puppeteer for browser automation and web scraping requires the following entry (which is fully documented here):

{

"mcpServers": {

"puppeteer": {

"command": "docker",

"args": ["run", "-i", "--rm", "--init", "-e", "DOCKER_CONTAINER=true", "mcp/puppeteer"]