Vue lecture

Docker Multi-Stage Builds for Python Developers: A Complete Guide

Behind the scenes: How we designed Docker Model Runner and what’s next

The last few years have made it clear that AI models will continue to be a fundamental component of many applications. The catch is that they’re also a fundamentally different type of component, with complex software and hardware requirements that don’t (yet) fit neatly into the constraints of container-oriented development lifecycles and architectures. To help address this problem, Docker launched the Docker Model Runner with Docker Desktop 4.40. Since then, we’ve been working aggressively to expand Docker Model Runner with additional OS and hardware support, deeper integration with popular Docker tools, and improvements to both performance and usability.

For those interested in Docker Model Runner and its future, we offer a behind-the-scenes look at its design, development, and roadmap.

Note: Docker Model Runner is really two components: the model runner and the model distribution specification. In this article, we’ll be covering the former, but be sure to check out the companion blog post by Emily Casey for the equally important distribution side of the story.

Design goals

Docker Model Runner’s primary design goal was to allow users to run AI models locally and to access them from both containers and host processes. While that’s simple enough to articulate, it still leaves an enormous design space in which to find a solution. Fortunately, we had some additional constraints: we were a small engineering team, and we had some ambitious timelines. Most importantly, we didn’t want to compromise on UX, even if we couldn’t deliver it all at once. In the end, this motivated design decisions that have so far allowed us to deliver a viable solution while leaving plenty of room for future improvement.

Multiple backends

One thing we knew early on was that we weren’t going to write our own inference engine (Docker’s wheelhouse is containerized development, not low-level inference engines). We’re also big proponents of open-source, and there were just so many great existing solutions! There’s llama.cpp, vLLM, MLX, ONNX, and PyTorch, just to name a few.

Of course, being spoiled for choice can also be a curse — which to choose? The obvious answer was: as many as possible, but not all at once.

We decided to go with llama.cpp for our initial implementation, but we intentionally designed our APIs with an additional, optional path component (the {name} in /engines/{name}) to allow users to take advantage of multiple future backends. We also designed interfaces and stubbed out implementations for other backends to enforce good development hygiene and to avoid becoming tethered to one “initial” implementation.

OpenAI API compatibility

The second design choice we had to make was how to expose inference to consumers in containers. While there was also a fair amount of choice in the inference API space, we found that the OpenAI API standard seemed to offer the best initial tooling compatibility. We were also motivated by the fact that several teams inside Docker were already using this API for various real-world products. While we may support additional APIs in the future, we’ve so far found that this API surface is sufficient for most applications. One gap that we know exists is full compatibility with this API surface, which is something we’re working on iteratively.

This decision also drove our choice of llama.cpp as our initial backend. The llama.cpp project already offered a turnkey option for OpenAI API compatibility through its server implementation. While we had to make some small modifications (e.g. Unix domain socket support), this offered us the fastest path to a solution. We’ve also started contributing these small patches upstream, and we hope to expand our contributions to these projects in the future.

First-class citizenship for models in the Docker API

While the OpenAI API standard was the most ubiquitous option amongst existing tooling, we also knew that we wanted models to be first-class citizens in the Docker Engine API. Models have a fundamentally different execution lifecycle than the processes that typically make up the ENTRYPOINTs of containers, and thus, they don’t fit well under the standard /containers endpoints of the Docker Engine API. However, much like containers, images, networks, and volumes, models are such a fundamental component that they really deserve their own API resource type. This motivated the addition of a set of /models endpoints, closely modeled after the /images endpoints, but separate for reasons that are best discussed in the distribution blog post.

GPU acceleration

Another critical design goal was support for GPU acceleration of inference operations. Even the smallest useful models are extremely computationally demanding, while more sophisticated models (such as those with tool-calling capabilities) would be a stretch to fit onto local hardware at all. GPU support was going to be non-negotiable for a useful experience.

Unfortunately, passing GPUs across the VM boundary in Docker Desktop, especially in a way that would be reliable across platforms and offer a usable computation API inside containers, was going to be either impossible or very flaky.

As a compromise, we decided to run inference operations outside of the Docker Desktop VM and simply proxy API calls from the VM to the host. While there are some risks with this approach, we are working on initiatives to mitigate these with containerd-hosted sandboxing on macOS and Windows. Moreover, with Docker-provided models and application-provided prompts, the risk is somewhat lower, especially given that inference consists primarily of numerical operations. We assess the risk in Docker Desktop to be about on par with accessing host-side services via host.docker.internal (something already enabled by default).

However, agents that drive tool usage with model output can cause more significant side effects, and that’s something we needed to address. Fortunately, using the Docker MCP Toolkit, we’re able to perform tool invocation inside ephemeral containers, offering reliable encapsulation of the side effects that models might drive. This hybrid approach allows us to offer the best possible local performance with relative peace of mind when using tools.

Outside the context of Docker Desktop, for example, in Docker CE, we’re in a significantly better position due to the lack of a VM boundary (or at least a very transparent VM boundary in the case of a hypervisor) between the host hardware and containers. When running in standalone mode in Docker CE, the Docker Model Runner will have direct access to host hardware (e.g. via the NVIDIA Container Toolkit) and will run inference operations within a container.

Modularity, iteration, and open-sourcing

As previously mentioned, the Docker Model Runner team is relatively small, which meant that we couldn’t rely on a monolithic architecture if we wanted to effectively parallelize the development work for Docker Model Runner. Moreover, we had an early and overarching directive: open-source as much as possible.

We decided on three high-level components around which we could organize development work: the model runner, the model distribution tooling, and the model CLI plugin.

Breaking up these components allowed us to divide work more effectively, iterate faster, and define clean API boundaries between different concerns. While there have been some tricky dependency hurdles (in particular when integrating with closed-source components), we’ve found that the modular approach has facilitated faster incremental changes and support for new platforms.

The High-Level Architecture

At a high level, the Docker Model Runner architecture is composed of the three components mentioned above (the runner, the distribution code, and the CLI), but there are also some interesting sub-components within each:

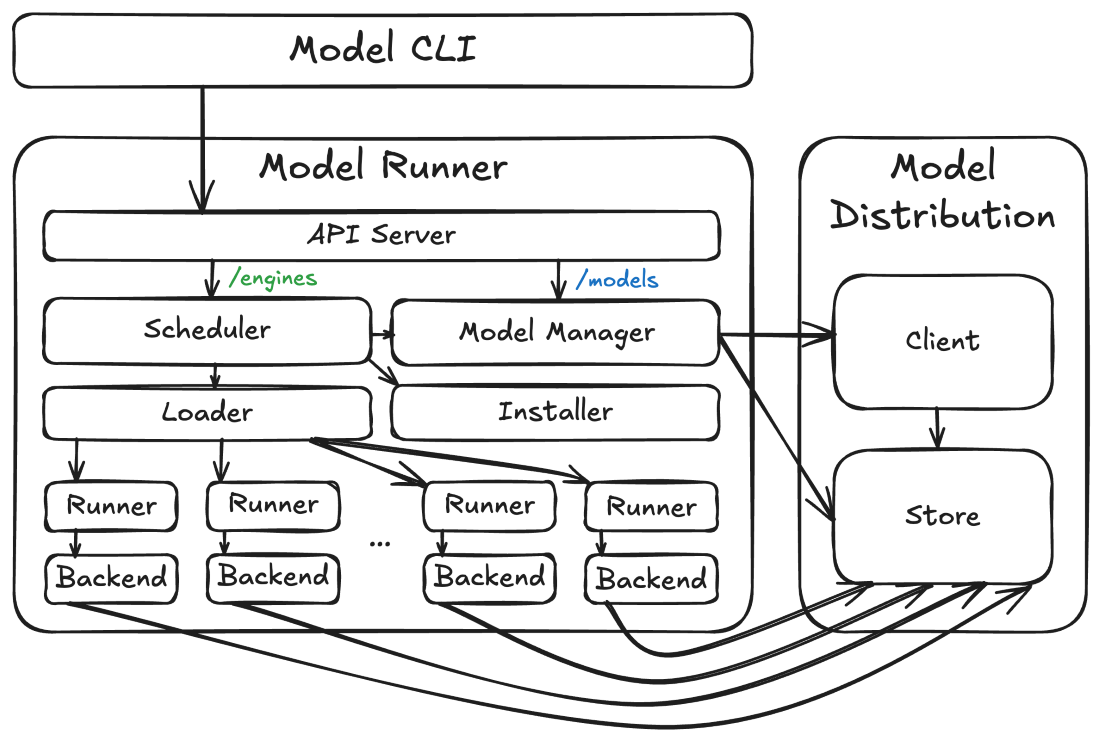

Figure 1: Docker Model Runner high-level architecture

How these components are packaged and hosted (and how they interact) also depends on the platform where they’re deployed. In each case it looks slightly different. Sometimes they run on the host, sometimes they run in a VM, sometimes they run in a container, but the overall architecture looks the same.

Model storage and client

The core architectural component is the model store. This component, provided by the model distribution code, is where the actual model tensor files are stored. These files are stored differently (and separately) from images because (1) they’re high-entropy and not particularly compressible and (2) the inference engine needs direct access to the files so that it can do things like mapping them into its virtual address space via mmap(). For more information, see the accompanying model distribution blog post.

The model distribution code also provides the model distribution client. This component performs operations (such as pulling models) using the model distribution protocol against OCI registries.

Model runner

Built on top of the model store is the model runner. The model runner maps inbound inference API requests (e.g. /v1/chat/completions or /v1/embeddings requests) to processes hosting pairs of inference engines and models. It includes scheduler, loader, and runner components that coordinate the loading of models in and out of memory so that concurrent requests can be serviced, even if models can’t be loaded simultaneously (e.g. due to resource constraints). This makes the execution lifecycle of models different from that of containers, with engines and models operating as ephemeral processes (mostly hidden from users) that can be terminated and unloaded from memory as necessary (or when idle). A different backend process is run for each combination of engine (e.g. llama.cpp) and model (e.g. ai/qwen3:8B-Q4_K_M) as required by inference API requests (though multiple requests targeting the same pair will reuse the same runner and backend processes if possible).

The runner also includes an installer service that can dynamically download backend binaries and libraries, allowing users to selectively enable features (such as CUDA support) that might require downloading hundreds of MBs of dependencies.

Finally, the model runner serves as the central server for all Docker Model Runner APIs, including the /models APIs (which it routes to the model distribution code) and the /engines APIs (which it routes to its scheduler). This API server will always opt to hold in-flight requests until the resources (primarily RAM or VRAM) are available to service them, rather than returning something like a 503 response. This is critical for a number of usage patterns, such multiple agents running with different models or concurrent requests for both embedding and completion.

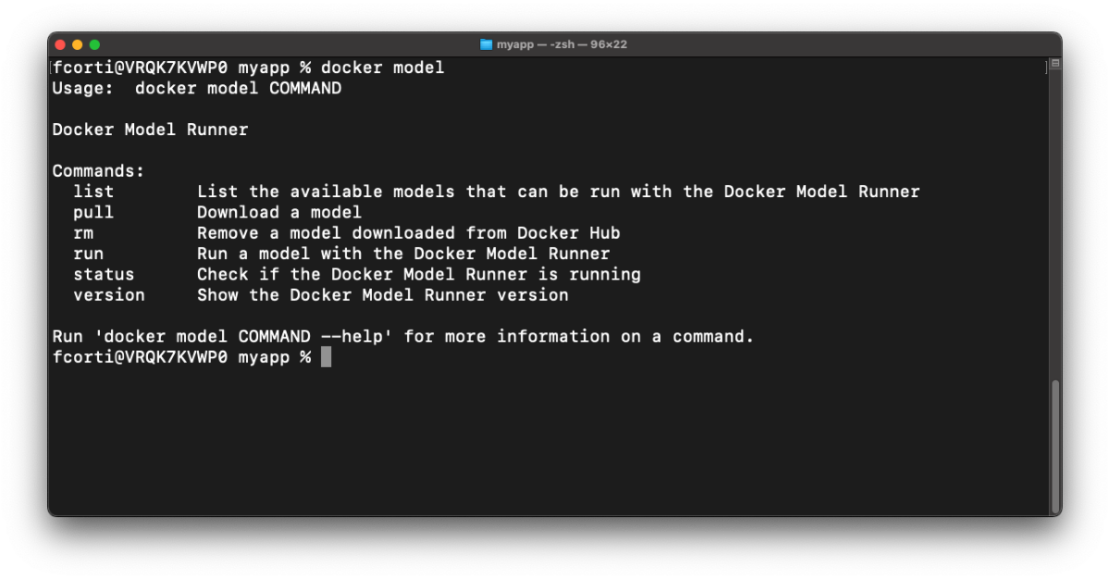

Model CLI

The primary user-facing component of the Docker Model Runner architecture is the model CLI. This component is a standard Docker CLI plugin that offers an interface very similar to the docker image command. While the lifecycle of model execution is different from that of containers, the concepts (such as pushing, pulling, and running) should be familiar enough to existing Docker users.

The model CLI communicates with the model runner’s APIs to perform almost all of its operations (though the transport for that communication varies by platform). The model CLI is context-aware, allowing it to determine if it’s talking to a Docker Desktop model runner, Docker CE model runner, or a model runner on some custom platform. Because we’re using the standard Docker CLI plugin framework, we get all of the standard Docker Context functionality for free, making this detection much easier.

API design and routing

As previously mentioned, the Docker Model Runner comprises two sets of APIs: the Docker-style APIs and the OpenAI-compatible APIs. The Docker-style APIs (modeled after the /image APIs) include the following endpoints:

- POST /models/create (Model pulling)

- GET /models (Model listing)

- GET /models/{namespace}/{name} (Model metadata)

- DELETE /models/{namespace}/{name} (Model deletion)

The bodies for these requests look very similar to their image analogs. There’s no documentation at the moment, but you can get a glimpse of the format by looking at their corresponding Go types.

In contrast, the OpenAI endpoints follow a different but still RESTful convention:

- GET /engines/{engine}/v1/models (OpenAI-format model listing)

- GET /engines/{engine}/v1/models/{namespace}/{name} (OpenAI-format model metadata)

- POST /engines/{engine}/v1/chat/completions (Chat completions)

- POST /engines/{engine}/v1/completions (Chat completions (legacy endpoint))

- POST /engines/{engine}/v1/embeddings (Create embeddings)

At this point in time, only one {engine} value is supported (llama.cpp), and it can also be omitted to use the default (llama.cpp) engine.

We make these APIs available on several different endpoints:

First, in Docker Desktop, they’re available on the Docker socket (/var/run/docker.sock), both inside and outside containers. This is in service of our design goal of having models as a first-class citizen in the Docker Engine API. At the moment, these endpoints are prefixed with a /exp/vDD4.40 path (to avoid dependencies on APIs that may evolve during development), but we’ll likely remove this prefix in the next few releases since these APIs have now mostly stabilized and will evolve in a backward-compatible way.

Second, also in Docker Desktop, we make the APIs available on a special model-runner.docker.internal endpoint that’s accessible just from containers (though not currently from ECI containers, because we want to have inference sandboxing implemented first). This TCP-based endpoint exposes just the /models and /engines API endpoints (not the whole Docker API) and is designed to serve existing tooling (which likely can’t access APIs via a Unix domain socket). No /exp/vDD4.40 prefix is used in this case.

Finally, in both Docker Desktop and Docker CE, we make the /models and /engines API endpoints available on a host TCP endpoint (localhost:12434, by default, again without any /exp/vDD4.40 prefix). In Docker Desktop this is optional and not enabled by default. In Docker CE, it’s a critical component of how the API endpoints are accessed, because we currently lack the integration to add endpoints to Docker CE’s /var/run/docker.sock or to inject a custom model-runner.docker.internal hostname, so we advise using the standard 172.17.0.1 host gateway address to access this localhost-exposed port (e.g. setting your OpenAI API base URL to http://172.17.0.1:12434/engines/v1). Hopefully we’ll be able to unify this across Docker platforms in the near future (see our roadmap below).

First up: Docker Desktop

The natural first step for Docker Model Runner was integration into Docker Desktop. In Docker Desktop, we have more direct control over integration with the Docker Engine, as well as existing processes that we can use to host the model runner components. In this case, the model runner and model distribution components live in the Docker Desktop host backend process (the com.docker.backend process you may have seen running) and we use special middleware and networking magic to route requests on /var/run/docker.sock and model-runner.docker.internal to the model runner’s API server. Since the individual inference backend processes run as subprocesses of com.docker.backend, there’s no risk of a crash in Docker Desktop if, for example, an inference backend is killed by an Out Of Memory (OOM) error.

We started initially with support for macOS on Apple Silicon, because it provided the most uniform platform for developing the model runner functionality, but we implemented most of the functionality along the way to build and test for all Docker Desktop platforms. This made it significantly easier to port to Windows on AMD64 and ARM64 platforms, as well as the GPU variations that we found there.

The one complexity with Windows was the larger size of the supporting library dependencies for the GPU-based backends. It wouldn’t have been feasible (or tolerated) if we added another 500 MB – 1 GB to the Docker Desktop for Windows installer, so we decided to default to a CPU-based backend in Docker Desktop for Windows with optional support for the GPU backend. This was the primary motivating factor for the dynamic installer component of the model runner (in addition to our desire for incremental updates to different backends).

This all sounds like a very well-planned exercise, and we did indeed start with a three-component design and strictly enforced API boundaries, but in truth we started with the model runner service code as a sub-package of the Docker Desktop source code. This made it much easier to iterate quickly, especially as we were exploring the architecture for the different services. Fortunately, by sticking to a relatively strict isolation policy for the code, and enforcing clean dependencies through APIs and interfaces, we were able to easily extract the code (kudos to the excellent git-filter-repo tool) into a separate repository for the purposes of open-sourcing.

Next stop: Docker CE

Aside from Docker’s penchant for open-sourcing, one of the main reasons that we wanted to make the Docker Model Runner source code publicly available was to support integration into Docker CE. Our goal was to package the docker model command in the same way as docker buildx and docker compose.

The trick with Docker CE is that we wanted to ship Docker Model Runner as a “vanilla” Docker CLI plugin (i.e. without any special privileges or API access), which meant that we didn’t have a backend process that could host the model runner service. However, in the Docker CE case, the boundary between host hardware and container processes is much less disruptive, meaning that we could actually run Docker Model Runner in a container and simply make any accelerator hardware available to it directly. So, much like a standalone BuildKit builder container, we run the Docker Model Runner as a standalone container in Docker CE, with a special named volume for model storage (meaning you can uninstall the runner without having to re-pull models). This “installation” is performed by the model CLI automatically (and when necessary) by pulling the docker/model-runner image and starting a container. Explicit configuration for the runner can also be specified using the docker model install-runner command. If you want, you can also remove the model runner (and optionally the model storage) using docker model uninstall-runner.

This unfortunately leads to one small compromise with the UX: we don’t currently support the model runner APIs on /var/run/docker.sock or on the special model-runner.docker.internal URL. Instead, the model runner API server listens on the host system’s loopback interface at localhost:12434 (by default), which is available inside most containers at 172.17.0.1:12434. If desired, users can also make this available on model-runner.docker.internal:12434 by utilizing something like –add-host=model-runner.docker.internal:host-gateway when running docker run or docker create commands. This can also be achieved by using the extra_hosts key in a Compose YAML file. We have plans to make this more ergonomic in future releases.

The road ahead…

The status quo is Docker Model Runner support in Docker Desktop on macOS and Windows and support for Docker CE on Linux (including WSL2), but that’s definitely not the end of the story. Over the next few months, we have a number of initiatives planned that we think will reshape the user experience, performance, and security of Docker Model Runner.

Additional GUI and CLI functionality

The most visible functionality coming out over the next few months will be in the model CLI and the “Models” tab in the Docker Desktop dashboard. Expect to see new commands (such as df, ps, and unload) that will provide more direct support for monitoring and controlling model execution. Also, expect to see new and expanded layouts and functionality in the Models tab.

Expanded OpenAI API support

A less-visible but equally important aspect of the Docker Model Runner user experience is our compatibility with the OpenAI API. There are dozens of endpoints and parameters to support (and we already support many), so we will work to expand API surface compatibility with a focus on practical use cases and prioritization of compatibility with existing tools.

containerd and Moby integration

One of the longer-term initiatives that we’re looking at is integration with containerd. containerd already provides a modular runtime system that allows for task execution coordinated with storage. We believe this is the right way forward and that it will allow us to better codify the relationship between model storage, model execution, and model execution sandboxing.

In combination with the containerd work, we would also like tighter integration with the Moby project. While our existing Docker CE integration offers a viable and performant solution, we believe that better ergonomics could be achieved with more direct integration. In particular, niceties like support for model-runner.docker.internal DNS resolution in Docker CE are on our radar. Perhaps the biggest win from this tighter integration would be to expose Docker Model Runner APIs on the Docker socket and to include the API endpoints (e.g. /models) in the official Docker Engine API documentation.

Kubernetes

One of the product goals for Docker Model Runner was a consistent experience from development inner loop to production, and Kubernetes is inarguably a part of that path. The existing Docker Model Runner images that we’re using for Docker CE will also work within a Kubernetes cluster, and we’re currently developing instructions to set up a Docker Model Runner instance in a Kubernetes cluster. The big difference with Kubernetes is the variety of cluster and application architectures in use, so we’ll likely end up with different “recipes” for how to configure the Docker Model Runner in different scenarios.

vLLM

One of the things we’ve heard from a number of customers is that vLLM forms a core component of their production stack. This was also the first alternate backend that we stubbed out in the model runner repository, and the time has come to start poking at an implementation.

Even more to come…

Finally, there are some bits that we just can’t talk about yet, but they will fundamentally shift the way that developers interact with models. Be sure to tune-in to Docker’s sessions at WeAreDevelopers from July 9–11 for some exciting announcements around AI-related initiatives at Docker.

Learn more

- Explore the story behind our model distribution specification

- Read our quickstart guide to Docker Model Runner.

- Find documentation for Model Runner.

- Subscribe to the Docker Navigator Newsletter.

- New to Docker? Create an account.

- Have questions? The Docker community is here to help.

Docker Desktop 4.42: Native IPv6, Built-In MCP, and Better Model Packaging

Docker Desktop 4.42 introduces powerful new capabilities that enhance network flexibility, improve security, and deepen AI toolchain integration, all while reducing setup friction. With native IPv6 support, a fully integrated MCP Toolkit, and major upgrades to Docker Model Runner and our AI agent Gordon, this release continues our commitment to helping developers move faster, ship smarter, and build securely across any environment. Whether you’re managing enterprise-grade networks or experimenting with agentic workflows, Docker Desktop 4.42 brings the tools you need right into your development workflows.

IPv6 support

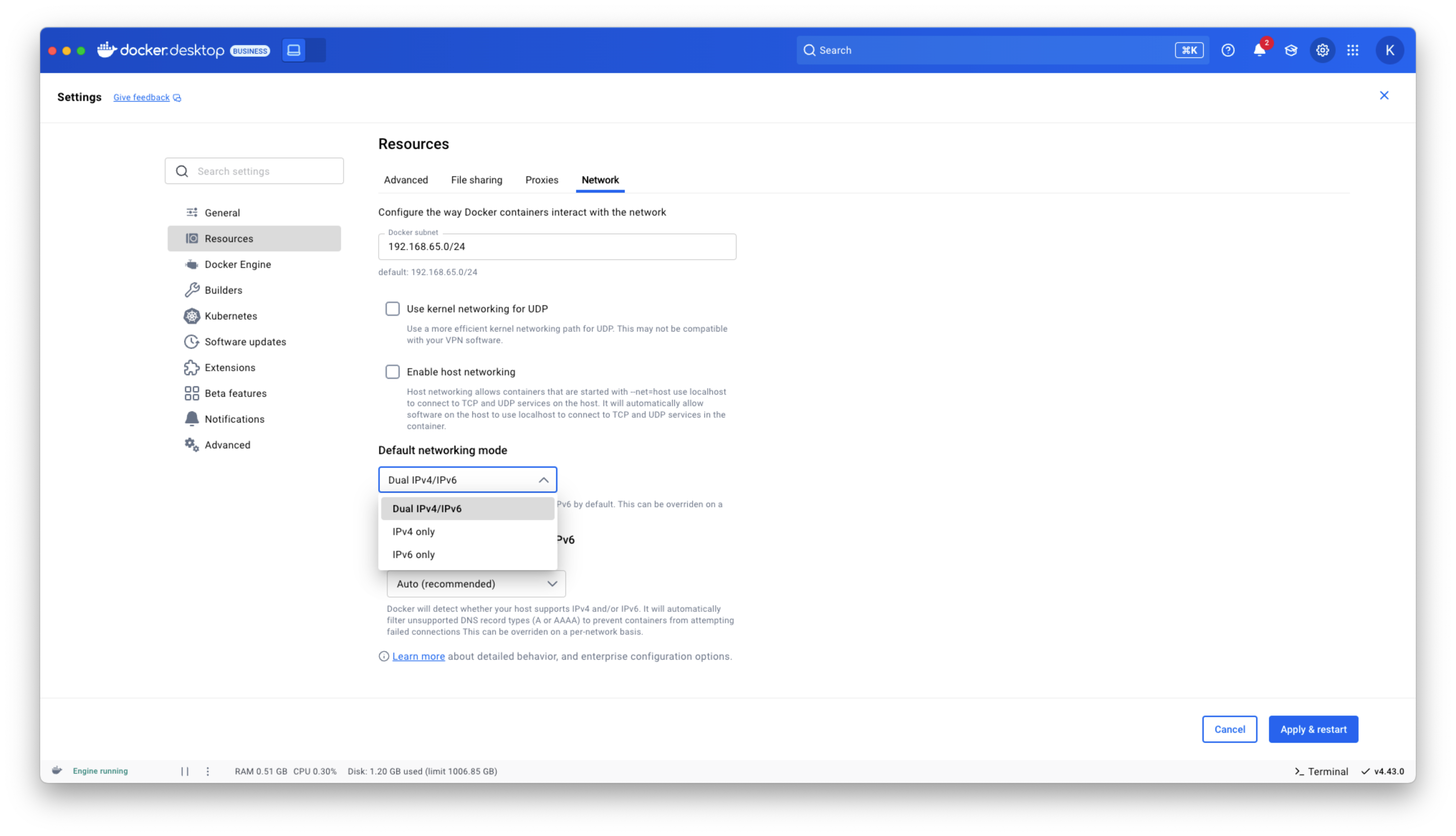

Docker Desktop now provides IPv6 networking capabilities with customization options to better support diverse network environments. You can now choose between dual IPv4/IPv6 (default), IPv4-only, or IPv6-only networking modes to align with your organization’s network requirements. The new intelligent DNS resolution behavior automatically detects your host’s network stack and filters unsupported record types, preventing connectivity timeouts in IPv4-only or IPv6-only environments.

These ipv6 settings are available in Docker Desktop Settings > Resources > Network section and can be enforced across teams using Settings Management, making Docker Desktop more reliable in complex enterprise network configurations including IPv6-only deployments.

Figure 1: Docker Desktop IPv6 settings

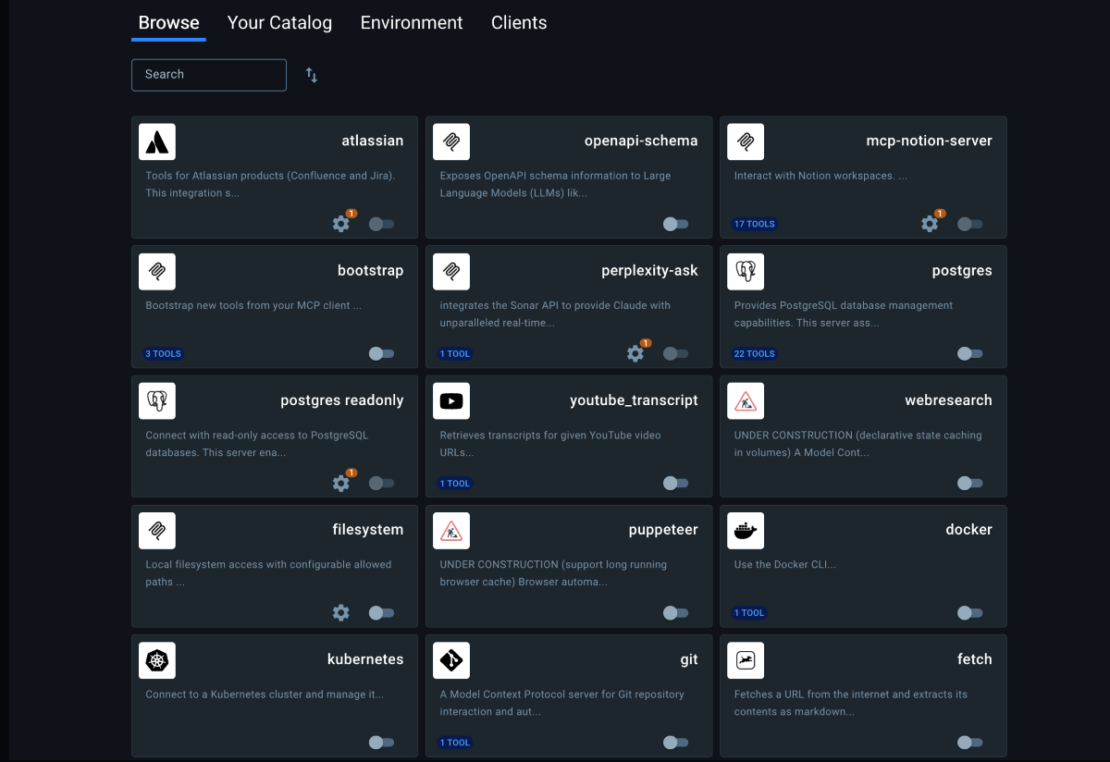

Docker MCP Toolkit integrated into Docker Desktop

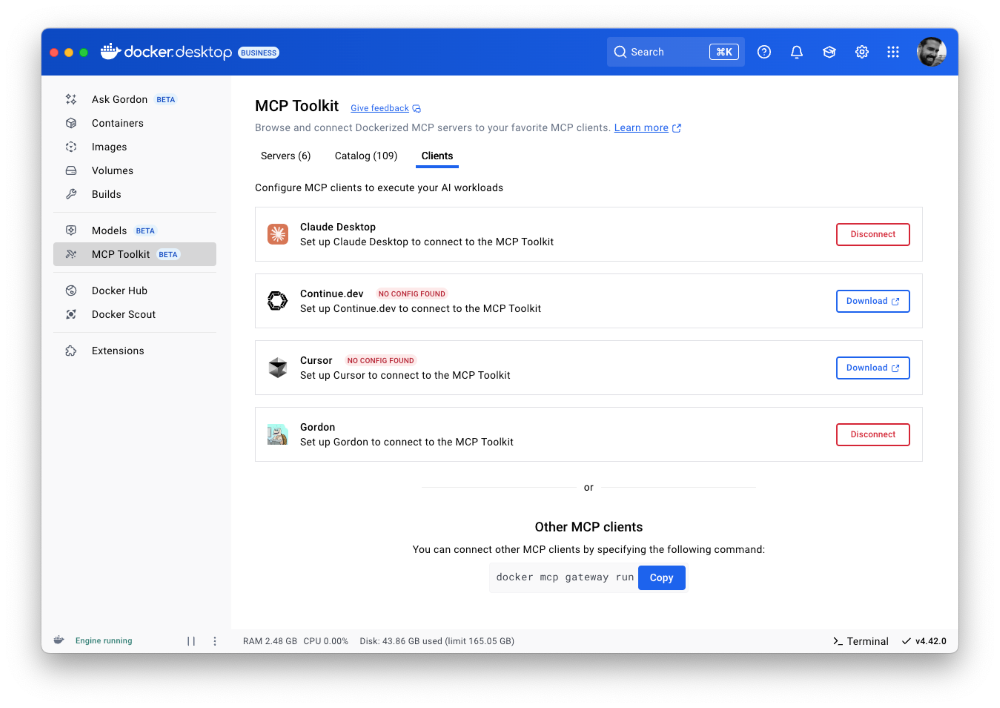

Last month, we launched the Docker MCP Catalog and Toolkit to help developers easily discover MCP servers and securely connect them to their favorite clients and agentic apps. We’re humbled by the incredible support from the community. User growth is up by over 50%, and we’ve crossed 1 million pulls! Now, we’re excited to share that the MCP Toolkit is built right into Docker Desktop, no separate extension required.

You can now access more than 100 MCP servers, including GitHub, MongoDB, Hashicorp, and more, directly from Docker Desktop – just enable the servers you need, configure them, and connect to clients like Claude Desktop, Cursor, Continue.dev, or Docker’s AI agent Gordon.

Unlike typical setups that run MCP servers via npx or uvx processes with broad access to the host system, Docker Desktop runs these servers inside isolated containers with well-defined security boundaries. All container images are cryptographically signed, with proper isolation of secrets and configuration data.

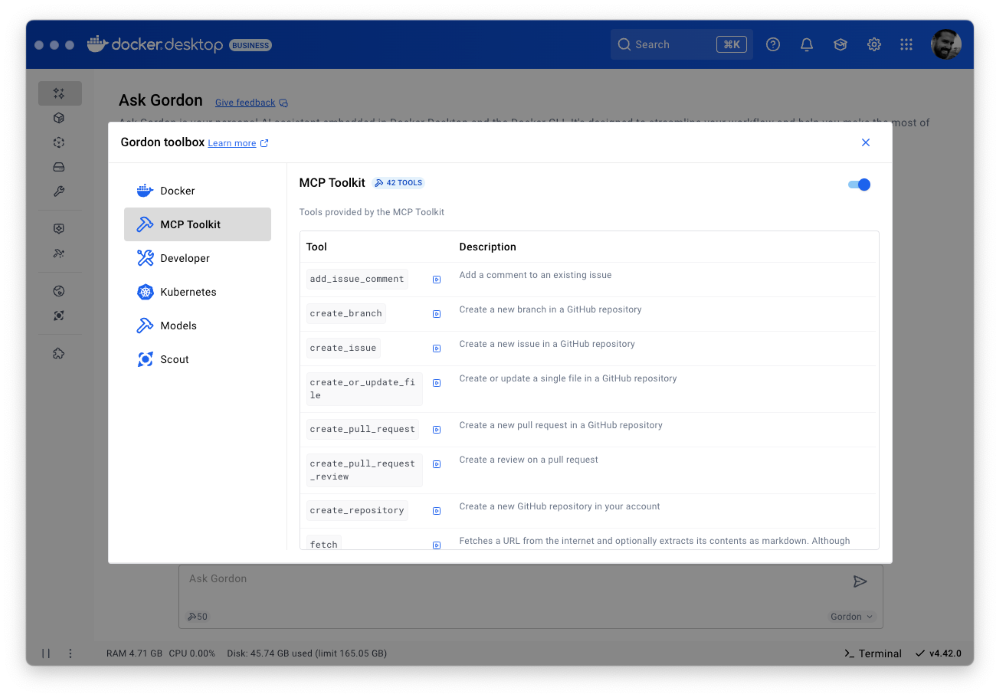

Figure 2: Docker MCP Toolkit is now integrated natively into Docker Desktop

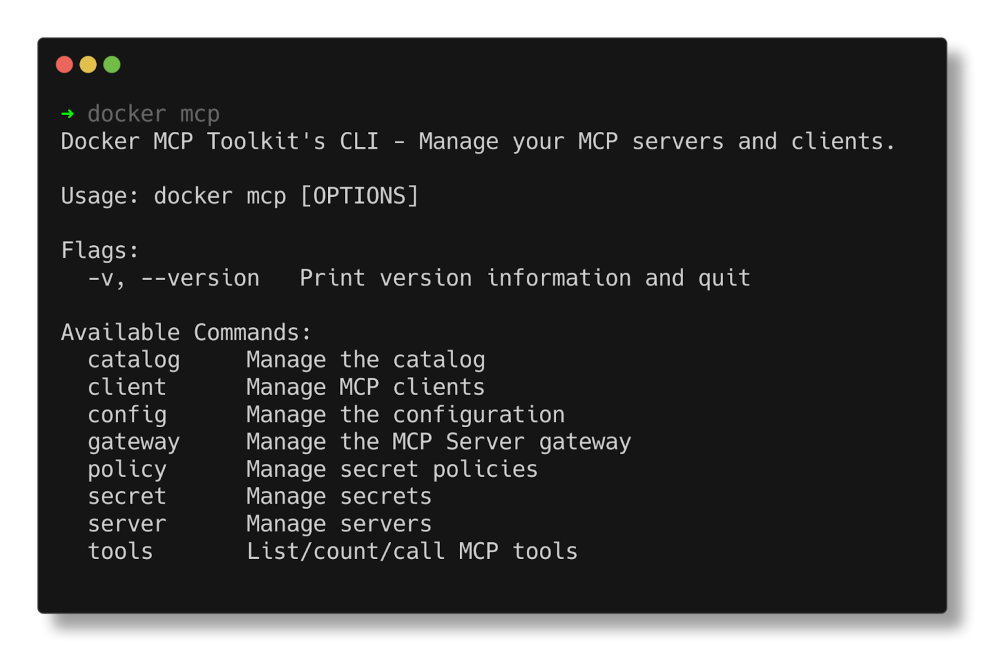

To meet developers where they are, we’re bringing Docker MCP support to the CLI, using the same command structure you’re already familiar with. With the new docker mcp commands, you can launch, configure, and manage MCP servers directly from the terminal. The CLI plugin offers comprehensive functionality, including catalog management, client connection setup, and secret management.

Figure 3: Docker MCP CLI commands.

Docker AI Agent Gordon Now Supports MCP Toolkit Integration

In this release, we’ve upgraded Gordon, Docker’s AI agent, with direct integration to the MCP Toolkit in Docker Desktop. To enable it, open Gordon, click the “Tools” button, and toggle on the “MCP” Toolkit option. Once activated, the MCP Toolkit tab will display tools available from any MCP servers you’ve configured.

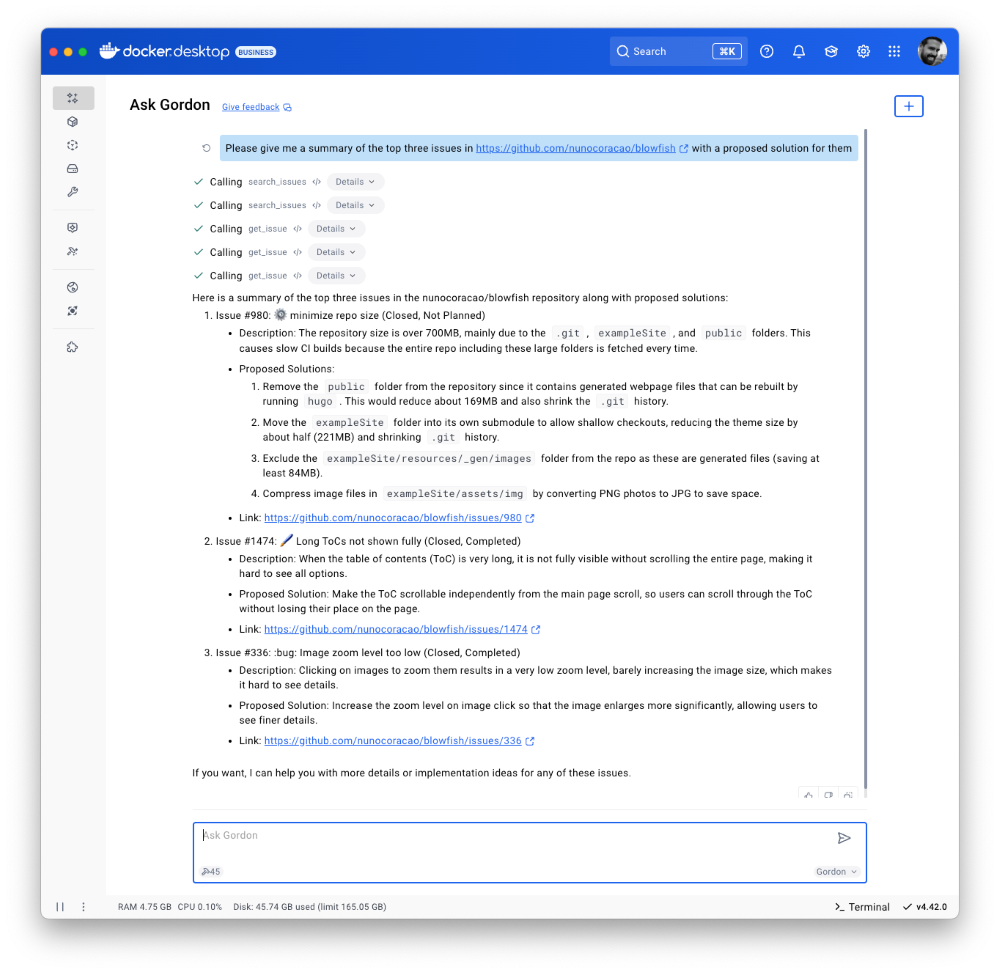

Figure 4: Docker’s AI Agent Gordon now integrates with Docker’s MCP Toolkit, bringing 100+ MCP servers

This integration gives you immediate access to 100+ MCP servers with no extra setup, letting you experiment with AI capabilities directly in your Docker workflow. Gordon now acts as a bridge between Docker’s native tooling and the broader AI ecosystem, letting you leverage specialized tools for everything from screenshot capture to data analysis and API interactions – all from a consistent, unified interface.

Figure 5: Docker’s AI Agent Gordon uses the GitHub MCP server to pull issues and suggest solutions.

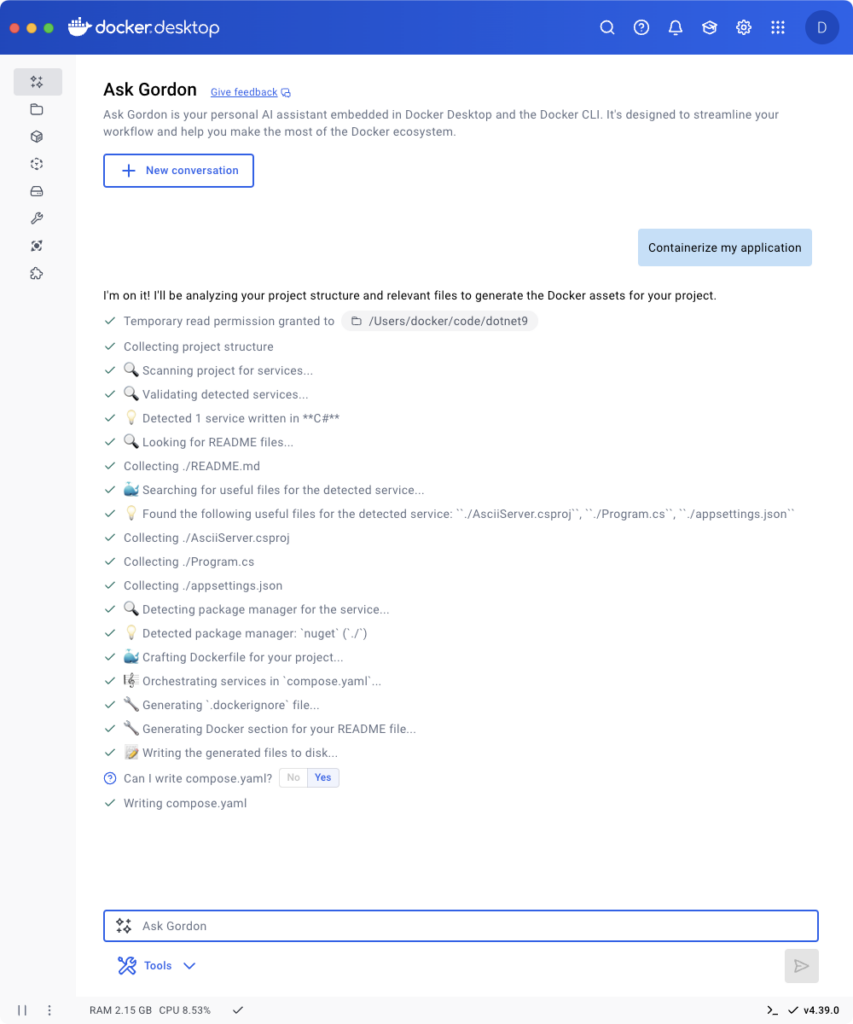

Finally, we’ve also improved the Dockerize feature with expanded support for Java, Kotlin, Gradle, and Maven projects. These improvements make it easier to containerize a wider range of applications with minimal configuration. With expanded containerization capabilities and integrated access to the MCP Toolkit, Gordon is more powerful than ever. It streamlines container workflows, reduces repetitive tasks, and gives you access to specialized tools, so you can stay focused on building, shipping, and running your applications efficiently.

Docker Model Runner adds Qualcomm support, Docker Engine Integration, and UX Upgrades

Staying true to our philosophy of giving developers more flexibility and meeting them where they are, the latest version of Docker Model Runner adds broader OS support, deeper integration with popular Docker tools, and improvements in both performance and usability.

In addition to supporting Apple Silicon and Windows systems with NVIDIA GPUs, Docker Model Runner now works on Windows devices with Qualcomm chipsets. Under the hood, we’ve upgraded our inference engine to use the latest version of llama.cpp, bringing significantly enhanced tool calling capabilities to your AI applications.Docker Model Runner can now be installed directly in Docker Engine Community Edition across multiple Linux distributions supported by Docker Engine. This integration is particularly valuable for developers looking to incorporate AI capabilities into their CI/CD pipelines and automated testing workflows. To get started, check out our documentation for the setup guide.

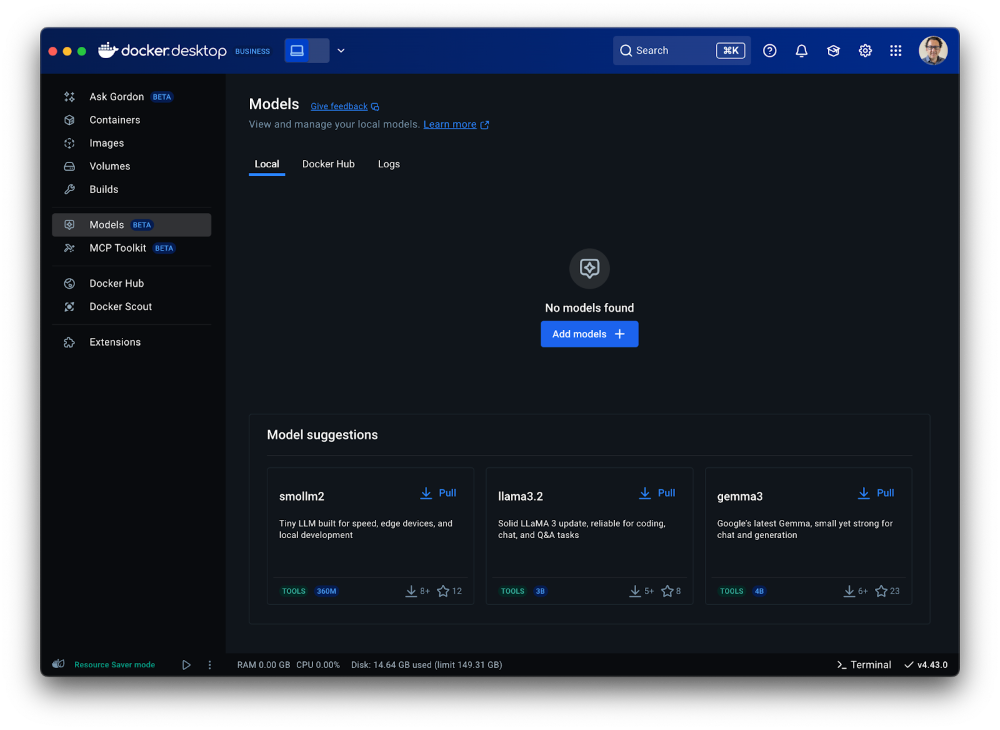

Get Up and Running with Models Faster

The Docker Model Runner user experience has been upgraded with expanded GUI functionality in Docker Desktop. All of these UI enhancements are designed to help you get started with Model Runner quickly and build applications faster. A dedicated interface now includes three new tabs that simplify model discovery, management, and streamline troubleshooting workflows. Additionally, Docker Desktop’s updated GUI introduces a more intuitive onboarding experience with streamlined “two-click” actions.

After clicking on the Model tab, you’ll see three new sub-tabs. The first, labeled “Local,” displays a set of models in various sizes that you can quickly pull. Once a model is pulled, you can launch a chat interface to test and experiment with it immediately.

Figure 6: Access a set of models of various sizes to get quickly started in Models menu of Docker Desktop

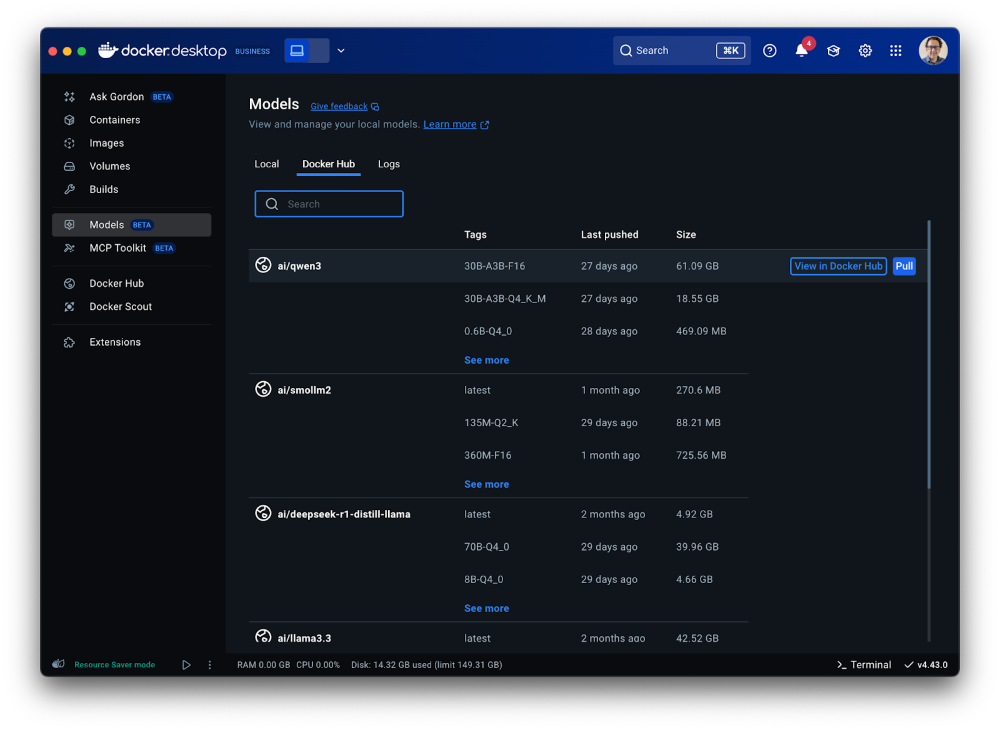

The second tab ”Docker Hub” offers a comprehensive view for browsing and pulling models from Docker Hub’s AI Catalog, making it easy to get started directly within Docker Desktop, without switching contexts.

Figure 7: A shortcut to the Model catalog from Docker Hub in Models menu of Docker Desktop

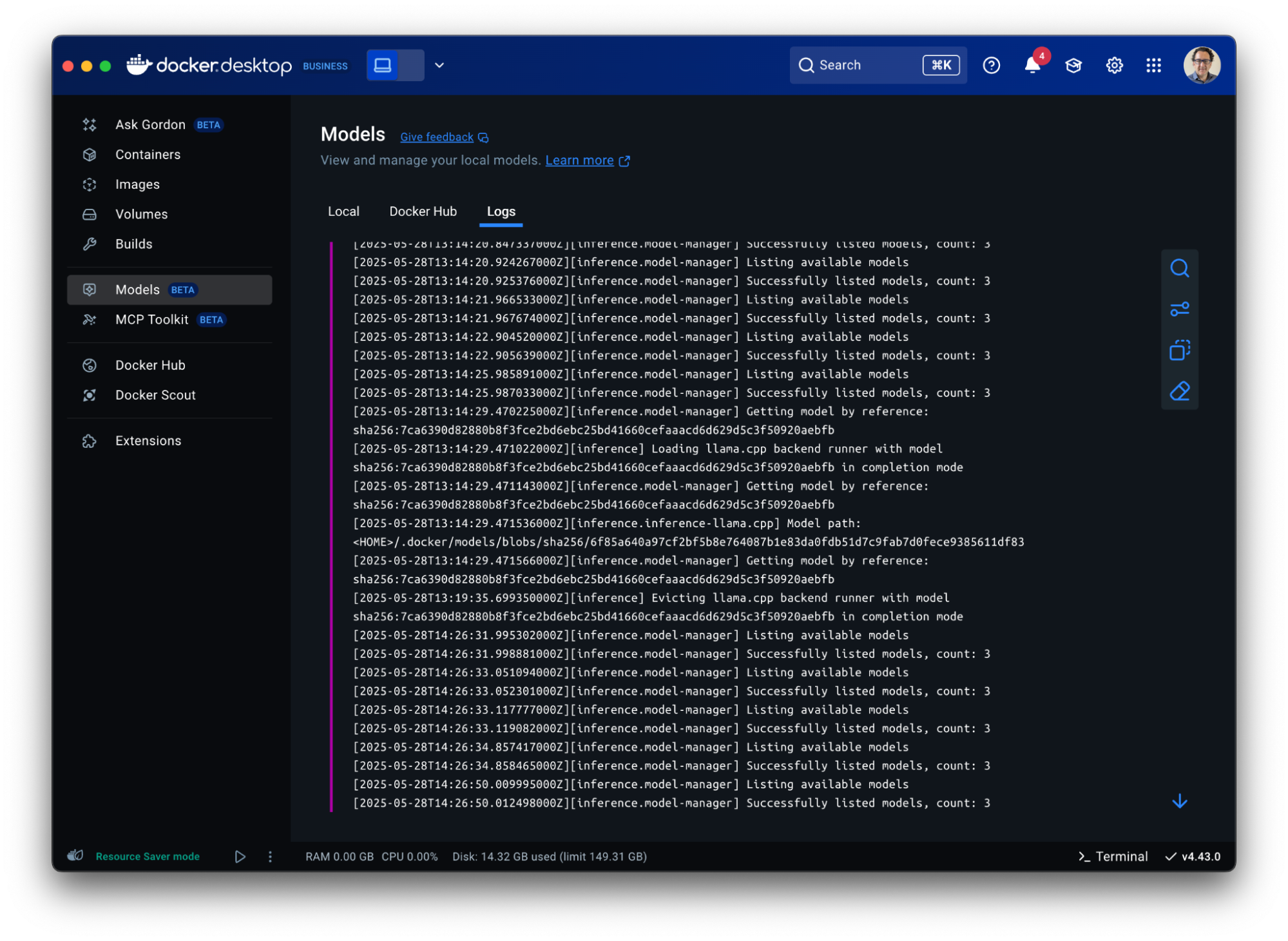

The third tab “Logs” offers real-time access to the inference engine’s log tail, giving developers immediate visibility into model execution status and debugging information directly within the Docker Desktop interface.

Figure 8: Gain visibility into model execution status and debugging information in Docker Desktop

Model Packaging Made Simple via CLI

As part of the Docker Model CLI, the most significant enhancement is the introduction of the docker model package command. This new command enables developers to package their models from GGUF format into OCI-compliant artifacts, fundamentally transforming how AI models are distributed and shared. It enables seamless publishing to both public and private and OCI-compatible repositories such as Docker Hub and establishes a standardized, secure workflow for model distribution, using the same trusted Docker tools developers already rely on. See our docs for more details.

Conclusion

From intelligent networking enhancements to seamless AI integrations, Docker Desktop 4.42 makes it easier than ever to build with confidence. With native support for IPv6, in-app access to 100+ MCP servers, and expanded platform compatibility for Docker Model Runner, this release is all about meeting developers where they are and equipping them with the tools to take their work further. Update to the latest version today and unlock everything Docker Desktop 4.42 has to offer.

Learn more

- Authenticate and update today to receive your subscription level’s newest Docker Desktop features.

- Subscribe to the Docker Navigator Newsletter.

- Learn about our sign-in enforcement options.

- New to Docker? Create an account.

- Have questions? The Docker community is here to help.

Settings Management for Docker Desktop now generally available in the Admin Console

We’re excited to announce that Settings Management for Docker Desktop is now Generally Available! Settings Management can be configured in the Admin Console for customers with a Docker Business subscription. After a successful Early Access period, this powerful administrative solution has been enhanced with new compliance reporting capabilities, completing our vision for centralized Docker Desktop configuration management at scale through the Admin Console.

To add additional context, Docker provides an enterprise-grade integrated solution suite for container development. This includes administration and management capabilities that support enterprise needs for security, governance, compliance, scale, ease of use, control, insights, and observability. The new Settings Management capabilities in the Admin Console for managing Docker Desktop instances are the latest enhancement to this area. This new feature provides organization administrators with a single, unified interface to configure and enforce security policies, and control Docker Desktop settings across all users in their organization. Overall, Settings Management eliminates the need to manually configure each individual Docker machine and ensures consistent compliance and security standards company-wide.

Enterprise-grade management for Docker Desktop

First introduced in Docker Desktop 4.36 as an Early Access feature, Docker Desktop Settings Management enables administrators to centrally deploy and enforce settings policies directly from the Admin Console. From the Docker Admin Console, administrators can configure Docker Desktop settings according to a security policy and select users to whom the policy applies. When users start Docker Desktop, those settings are automatically applied and enforced.

With the addition of Desktop Settings Reporting in Docker Desktop 4.40, the solution offers end-to-end management capabilities from policy creation to compliance verification.

This comprehensive approach to settings management delivers on our promise to simplify Docker Desktop administration while ensuring organizational compliance across diverse enterprise environments.

Complete settings management lifecycle

Desktop Settings Management now offers multiple administration capabilities:

- Admin Console policies: Configure and enforce default Docker Desktop settings directly from the cloud-based Admin Console. There’s no need to distribute admin-settings.json files to local machines via MDM.

- Quick import: Seamlessly migrate existing configurations from admin-settings.json files

- Export and share: Easily share policies as JSON files with security and compliance teams

- Targeted testing: Roll out policies to smaller groups before deploying globally

- Enhanced security: Benefit from improved signing and reporting methods that reduce the risk of tampering with settings

- Settings compliance reporting: Track and verify policy application across all developers in your engineering organization

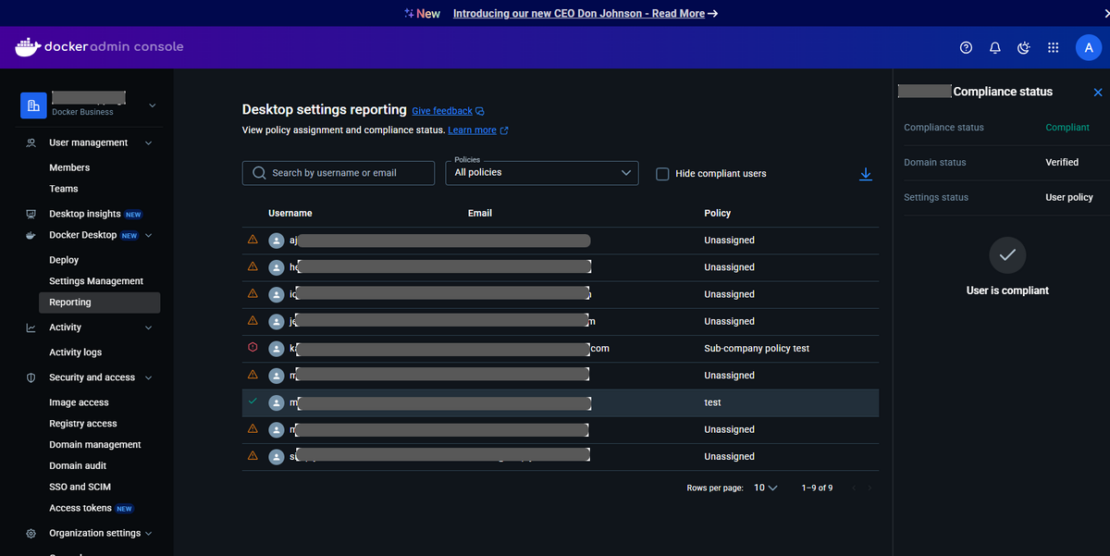

Figure 1: Admin Console Settings Management

New: Desktop Settings Reporting

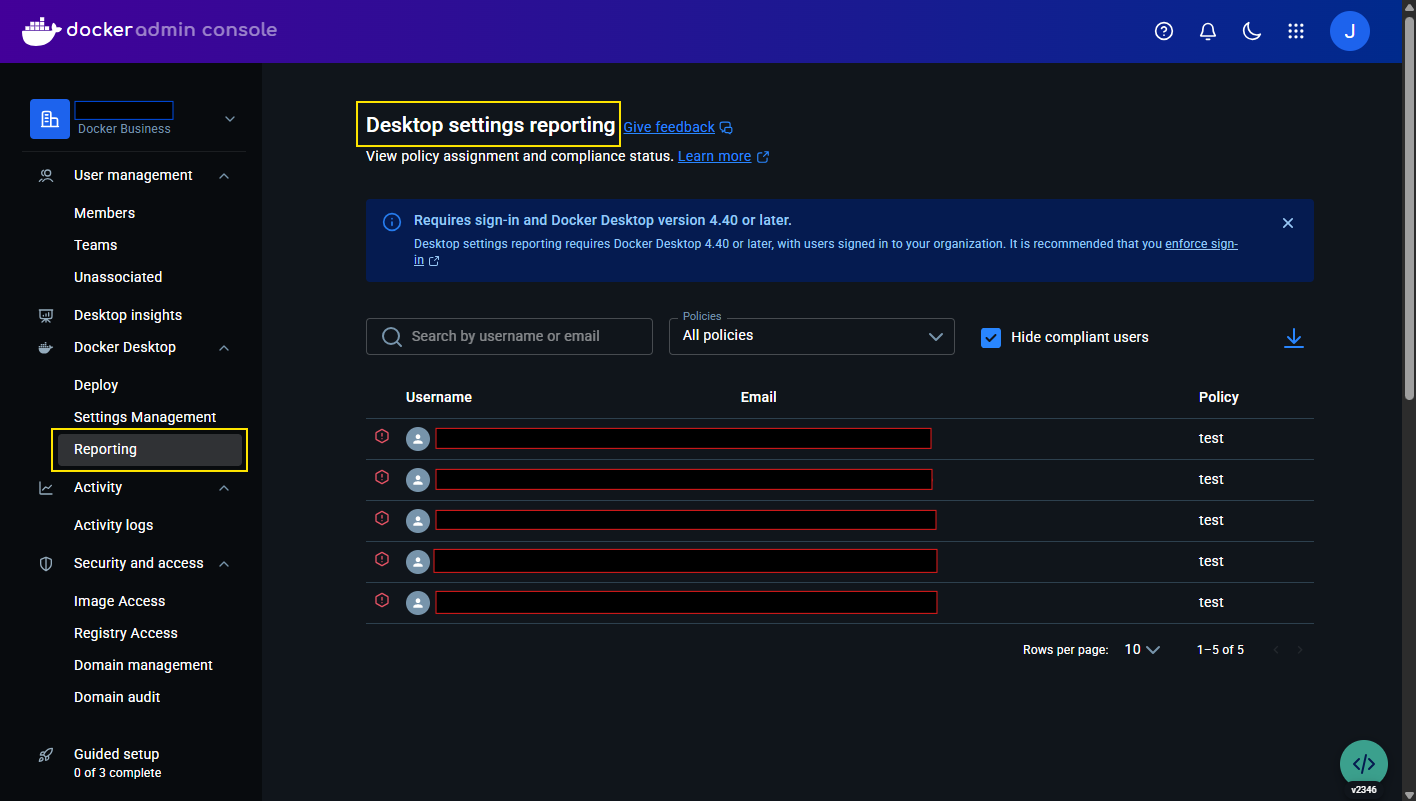

The newly added settings reporting dashboard in the Admin Console provides administrators with crucial visibility into the compliance status of all users:

- Real-time settings compliance tracking: Easily monitor which users are compliant with their assigned settings policies.

- Streamlined troubleshooting: Detailed status information helps administrators diagnose and resolve non-compliance issues.

The settings reporting dashboard is accessible via Admin Console > Docker Desktop > Reporting, offering options to:

- Search by username or email address

- Filter by assigned policies

- Toggle visibility of compliant users to focus on potential issues

- View detailed compliance information for specific users

- Download comprehensive compliance data as a CSV file

For non-compliant users, the settings reporting dashboard provides targeted resolution steps to help administrators quickly address issues and ensure organizational compliance.

Figure 2: Admin Console Settings Reporting

Figure 3: Locked settings in Docker Desktop

Enhanced security through centralized management

Desktop Settings Management is particularly valuable for engineering organizations with strict security and compliance requirements. This GA release enables administrators to:

- Enforce consistent configuration across all Docker Desktop instances, without having to go through complicated and error prone MDM based deployments

- Verify policy application and quickly remediate non-compliant systems

- Reduce the risk of tampering with local settings

- Generate compliance reports for security audits

Getting started

To take advantage of Desktop Settings Management:

- Ensure your Docker Desktop users are signed in on version 4.40 or later

- Log in to the Docker Admin Console

- Navigate to Docker Desktop > Settings Management to create policies

- Navigate to Docker Desktop > Reporting to monitor compliance

For more detailed information, visit our documentation on Settings Management.

What’s next?

Included with Docker Business, the GA release of Settings Management for Docker Desktop represents a significant milestone in our commitment to delivering enterprise-grade management, governance, and administration tools. We’ll continue to enhance these capabilities based on customer feedback, enterprise needs, and evolving security requirements.

We encourage you to explore Settings Management and let us know how it’s helping you manage Docker Desktop instances more efficiently across your development teams and engineering organization.

We’re thrilled to meet the management and administration needs of our customers with these exciting enhancements and we want you to stay connected with us as we build even more administration and management capabilities for development teams and engineering organizations.

Learn more

- Use the Docker Admin Console with your Docker Business subscription

- Read documentation on Desktop Settings Management

- Configure Settings Management with the Admin Console

- Subscribe to the Docker Navigator Newsletter.

- New to Docker? Create an account.

- Have questions? The Docker community is here to help.

Thank you!

Update on the Docker DX extension for VS Code

It’s now been a couple of weeks since we released the new Docker DX extension for Visual Studio Code. This launch reflects a deeper collaboration between Docker and Microsoft to better support developers building containerized applications.

Over the past few weeks, you may have noticed some changes to your Docker extension in VS Code. We want to take a moment to explain what’s happening—and where we’re headed next.

What’s Changing?

The original Docker extension in VS Code is being migrated to the new Container Tools extension, maintained by Microsoft. It’s designed to make it easier to build, manage, and deploy containers—streamlining the container development experience directly inside VS Code.

As part of this partnership, it was decided to bundle the new Docker DX extension with the existing Docker extension, so that it would install automatically to make the process seamless.

While the automatic installation was intended to simplify the experience, we realize it may have caught some users off guard. To provide more clarity and choice, the next release will make Docker DX Extension an opt-in installation, giving you full control over when and how you want to use it.

What’s New from Docker?

Docker is introducing the new Docker DX extension, focused on delivering a best-in-class authoring experience for Dockerfiles, Compose files, and Bake files

Key features include:

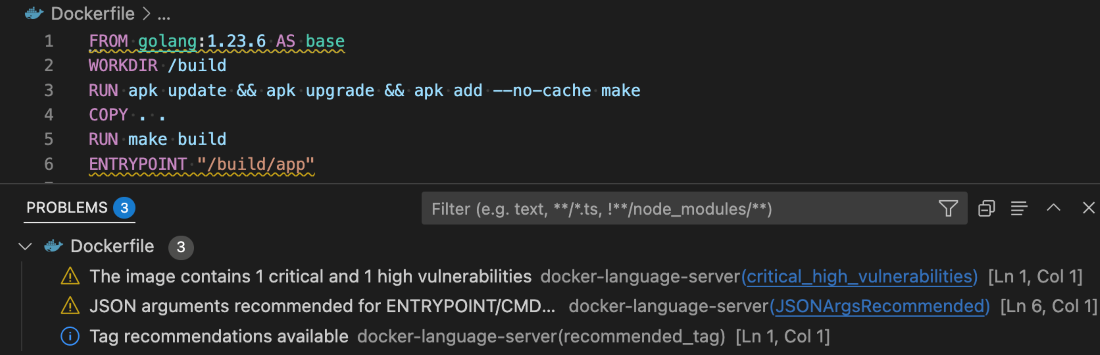

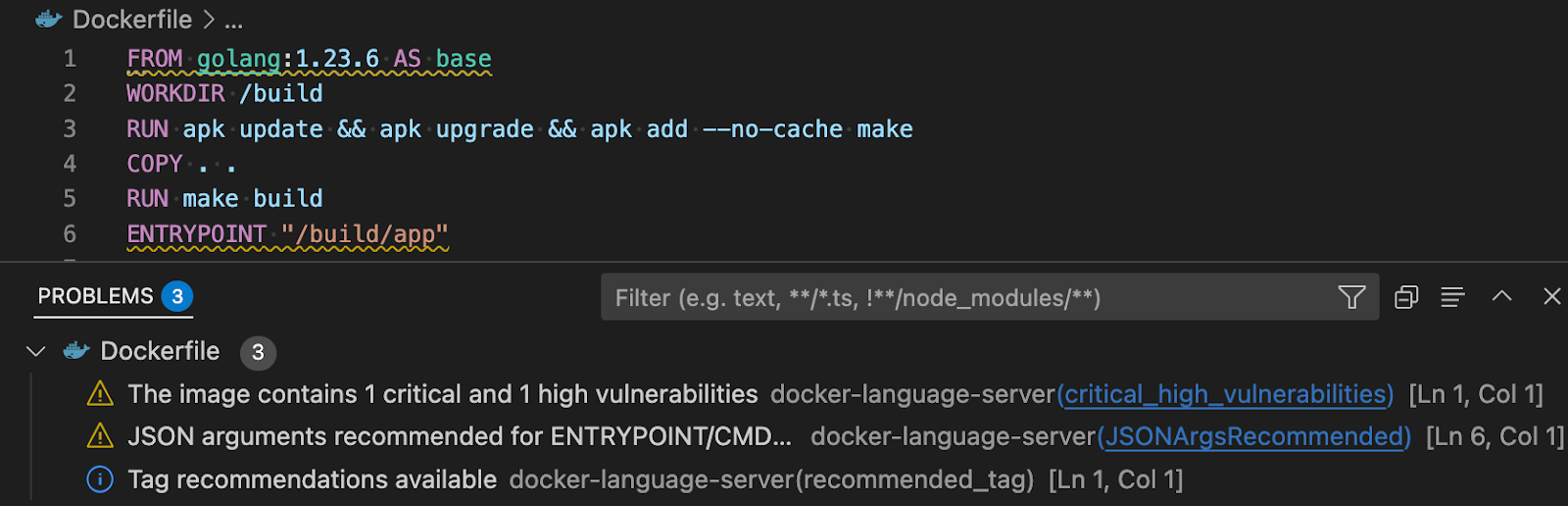

- Dockerfile linting: Get build warnings and best-practice suggestions directly from BuildKit and Buildx—so you can catch issues early, right inside your editor.

- Image vulnerability remediation (experimental): Automatically flag references to container images with known vulnerabilities, directly in your Dockerfiles.

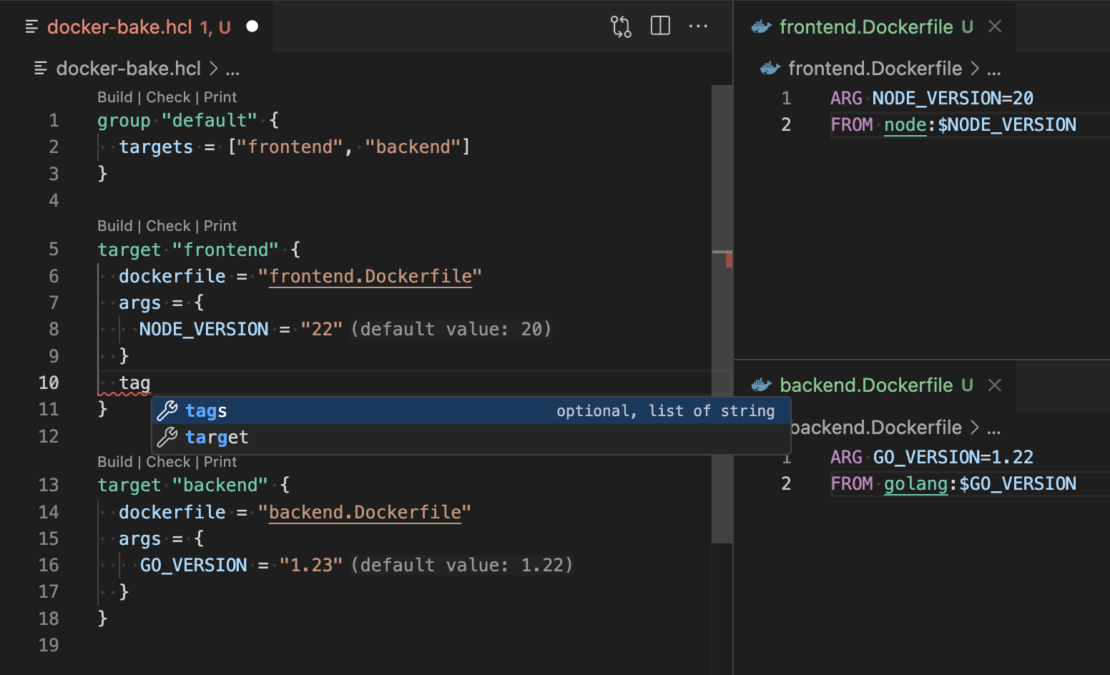

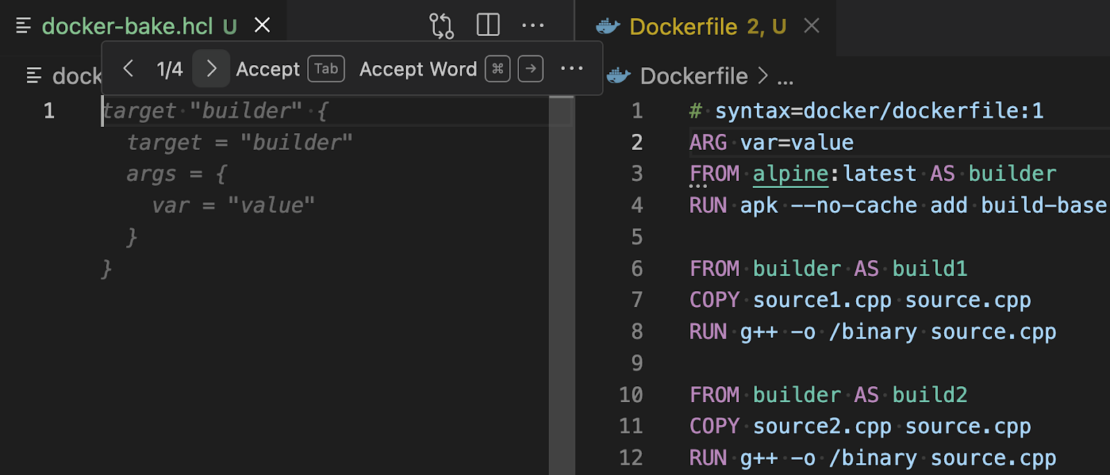

- Bake file support: Enjoy code completion, variable navigation, and inline suggestions when authoring Bake files—including the ability to generate targets based on your Dockerfile stages.

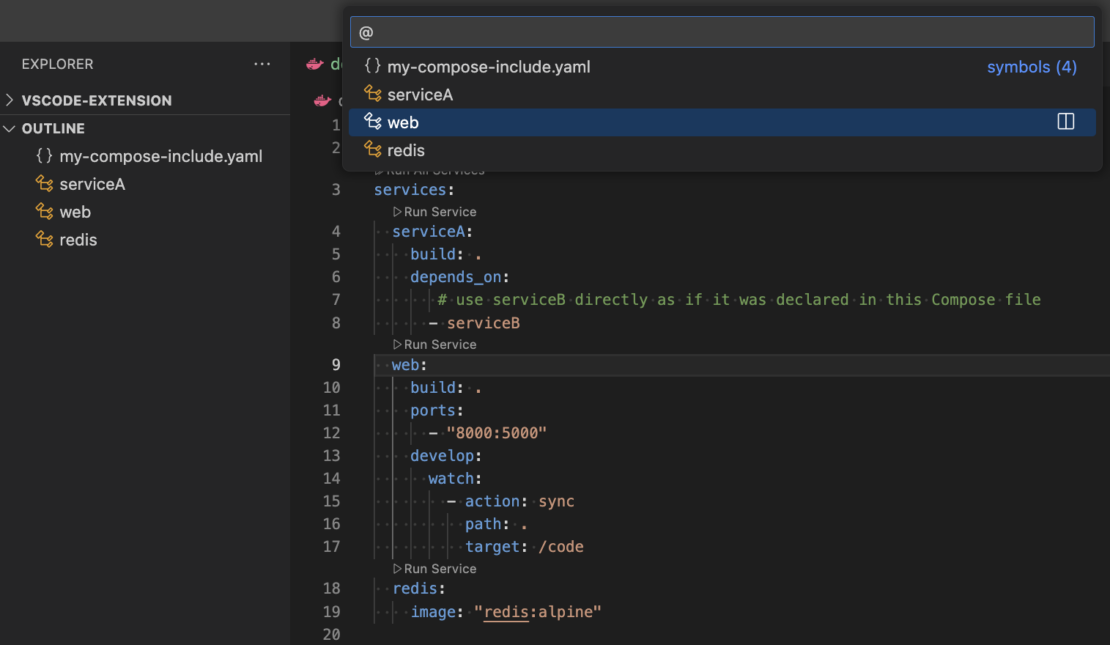

- Compose file outline: Easily navigate and understand complex Compose files with a new outline view in the editor.

Better Together

These two extensions are designed to work side-by-side, giving you the best of both worlds:

- Powerful tooling to build, manage, and deploy your containers

- Smart, contextual authoring support for Dockerfiles, Compose files, and Bake files

And the best part? Both extensions are free and fully open source.

Thank You for Your Patience

We know changes like this can be disruptive. While our goal was to make the transition as seamless as possible, we recognize that the approach caused some confusion, and we sincerely apologize for the lack of early communication.

The teams at Docker and Microsoft are committed to delivering the best container development experience possible—and this is just the beginning.

Where Docker DX is Going Next

At Docker, we’re proud of the contributions we’ve made to the container ecosystem, including Dockerfiles, Compose, and Bake.

We’re committed to ensuring the best possible experience when editing these files in your IDE, with instant feedback while you work.

Here’s a glimpse of what’s coming:

- Expanded Dockerfile checks: More best-practice validations, actionable tips, and guidance—surfaced right when you need them.

- Stronger security insights: Deeper visibility into vulnerabilities across your Dockerfiles, Compose files, and Bake configurations.

- Improved debugging and troubleshooting: Soon, you’ll be able to live debug Docker builds—step through your Dockerfile line-by-line, inspect the filesystem at each stage, see what’s cached, and troubleshoot issues faster.

We Want Your Feedback!

Your feedback is critical in helping us improve the Docker DX extension and your overall container development experience.

If you encounter any issues or have ideas for enhancements you’d like to see, please let us know:

- Open an issue on the Docker DX VS Code extension GitHub repo

- Or submit feedback through the Docker feedback page

We’re listening and excited to keep making things better for you!

Docker Desktop 4.41: Docker Model Runner supports Windows, Compose, and Testcontainers integrations, Docker Desktop on the Microsoft Store

Big things are happening in Docker Desktop 4.41! Whether you’re building the next AI breakthrough or managing development environments at scale, this release is packed with tools to help you move faster and collaborate smarter. From bringing Docker Model Runner to Windows (with NVIDIA GPU acceleration!), Compose and Testcontainers, to new ways to manage models in Docker Desktop, we’re making AI development more accessible than ever. Plus, we’ve got fresh updates for your favorite workflows — like a new Docker DX Extension for Visual Studio Code, a speed boost for Mac users, and even a new location for Docker Desktop on the Microsoft Store. Also, we’re enabling ACH transfer as a payment option for self-serve customers. Let’s dive into what’s new!

Docker Model Runner now supports Windows, Compose & Testcontainers

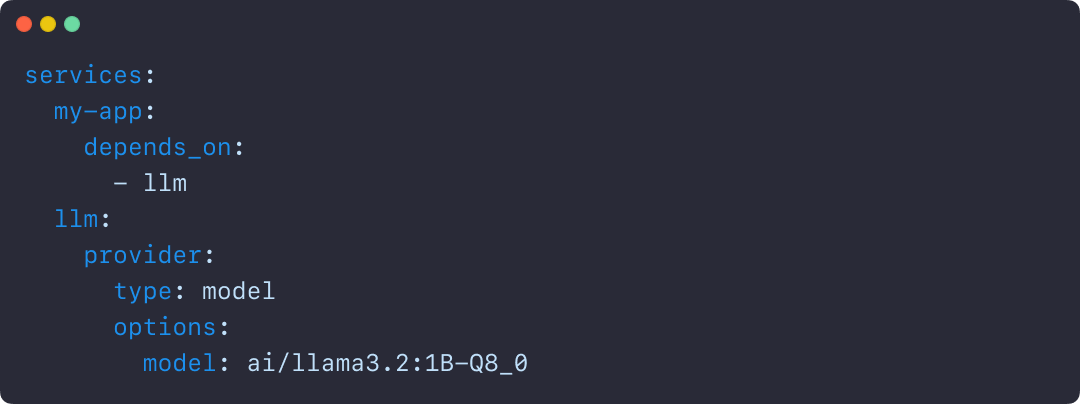

This release brings Docker Model Runner to Windows users with NVIDIA GPU support. We’ve also introduced improvements that make it easier to manage, push, and share models on Docker Hub and integrate with familiar tools like Docker Compose and Testcontainers. Docker Model Runner works with Docker Compose projects for orchestrating model pulls and injecting model runner services, and Testcontainers via its libraries. These updates continue our focus on helping developers build AI applications faster using existing tools and workflows.

In addition to CLI support for managing models, Docker Desktop now includes a dedicated “Models” section in the GUI. This gives developers more flexibility to browse, run, and manage models visually, right alongside their containers, volumes, and images.

Figure 1: Easily browse, run, and manage models from Docker Desktop

Further extending the developer experience, you can now push models directly to Docker Hub, just like you would with container images. This creates a consistent, unified workflow for storing, sharing, and collaborating on models across teams. With models treated as first-class artifacts, developers can version, distribute, and deploy them using the same trusted Docker tooling they already use for containers — no extra infrastructure or custom registries required.

docker model push <model>

The Docker Compose integration makes it easy to define, configure, and run AI applications alongside traditional microservices within a single Compose file. This removes the need for separate tools or custom configurations, so teams can treat models like any other service in their dev environment.

Figure 2: Using Docker Compose to declare services, including running AI models

Similarly, the Testcontainers integration extends testing to AI models, with initial support for Java and Go and more languages on the way. This allows developers to run applications and create automated tests using AI services powered by Docker Model Runner. By enabling full end-to-end testing with Large Language Models, teams can confidently validate application logic, their integration code, and drive high-quality releases.

String modelName = "ai/gemma3";

DockerModelRunnerContainer modelRunnerContainer = new DockerModelRunnerContainer()

.withModel(modelName);

modelRunnerContainer.start();

OpenAiChatModel model = OpenAiChatModel.builder()

.baseUrl(modelRunnerContainer.getOpenAIEndpoint())

.modelName(modelName)

.logRequests(true)

.logResponses(true)

.build();

String answer = model.chat("Give me a fact about Whales.");

System.out.println(answer);

Docker DX Extension in Visual Studio: Catch issues early, code with confidence

The Docker DX Extension is now live on the Visual Studio Marketplace. This extension streamlines your container development workflow with rich editing, linting features, and built-in vulnerability scanning. You’ll get inline warnings and best-practice recommendations for your Dockerfiles, powered by Build Check — a feature we introduced last year.

It also flags known vulnerabilities in container image references, helping you catch issues early in the dev cycle. For Bake files, it offers completion, variable navigation, and inline suggestions based on your Dockerfile stages. And for those managing complex Docker Compose setups, an outline view makes it easier to navigate and understand services at a glance.

Figure 3: Docker DX Extension in Visual Studio provides actionable recommendations for fixing vulnerabilities and optimizing Dockerfiles

Read more about this in our announcement blog and GitHub repo. Get started today by installing Docker DX – Visual Studio Marketplace

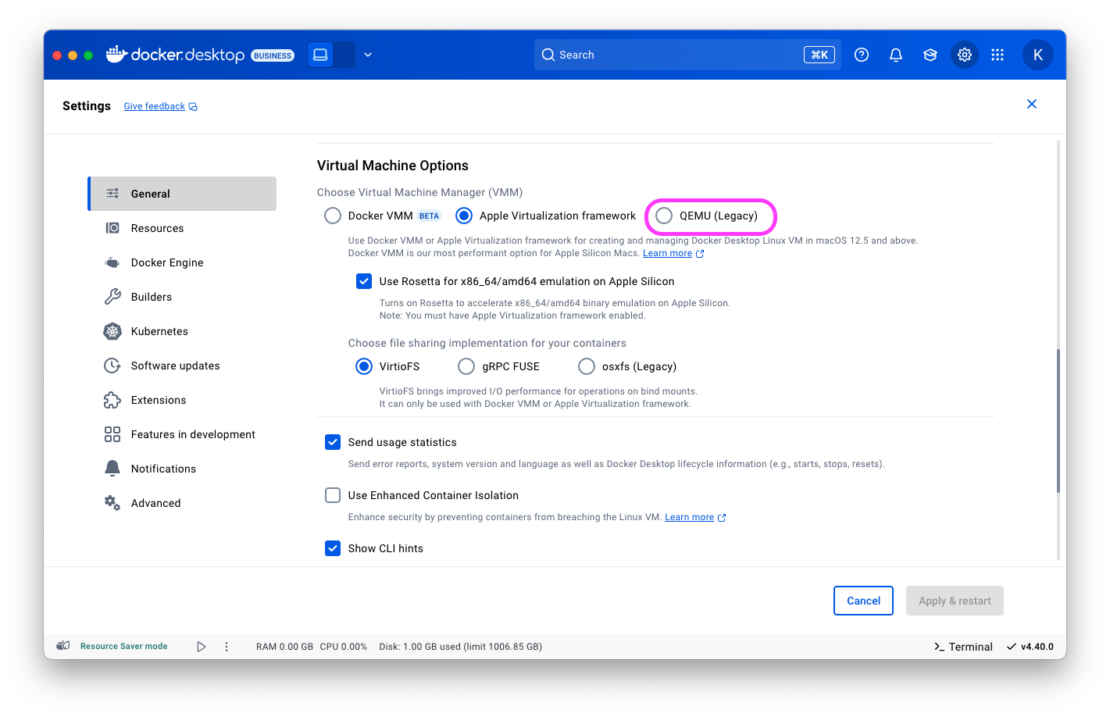

MacOS QEMU virtualization option deprecation

The QEMU virtualization option in Docker Desktop for Mac will be deprecated on July 14, 2025.

With the new Apple Virtualization Framework, you’ll experience improved performance, stability, and compatibility with macOS updates as well as tighter integration with Apple Silicon architecture.

What this means for you:

- If you’re using QEMU as your virtualization backend on macOS, you’ll need to switch to either Apple Virtualization Framework (default) or Docker VMM (beta) options.

- This does NOT affect QEMU’s role in emulating non-native architectures for multi-platform builds.

- Your multi-architecture builds will continue to work as before.

For complete details, please see our official announcement.

Introducing Docker Desktop in the Microsoft Store

Docker Desktop is now available for download from the Microsoft Store! We’re rolling out an EXE-based installer for Docker Desktop on Windows. This new distribution channel provides an enhanced installation and update experience for Windows users while simplifying deployment management for IT administrators across enterprise environments.

Key benefits

For developers:

- Automatic Updates: The Microsoft Store handles all update processes automatically, ensuring you’re always running the latest version without manual intervention.

- Streamlined Installation: Experience a more reliable setup process with fewer startup errors.

- Simplified Management: Manage Docker Desktop alongside your other applications in one familiar interface.

For IT admins:

- Native Intune MDM Integration: Deploy Docker Desktop across your organization with Microsoft’s native management tools.

- Centralized Deployment Control: Roll out Docker Desktop more easily through the Microsoft Store’s enterprise distribution channels.

- Automatic Updates Regardless of Security Settings: Updates are handled automatically by the Microsoft Store infrastructure, even in organizations where users don’t have direct store access.

- Familiar Process: The update mechanism maps to the widget command, providing consistency with other enterprise software management tools.

This new distribution option represents our commitment to improving the Docker experience for Windows users while providing enterprise IT teams with the management capabilities they need.

Unlock greater flexibility: Enable ACH transfer as a payment option for self-serve customers

We’re focused on making it easier for teams to scale, grow, and innovate. All on their own terms. That’s why we’re excited to announce an upgrade to the self-serve purchasing experience: customers can pay via ACH transfer starting on 4/30/25.

Historically, self-serve purchases were limited to credit card payments, forcing many customers who could not use credit cards into manual sales processes, even for small seat expansions. With the introduction of an ACH transfer payment option, customers can choose the payment method that works best for their business. Fewer delays and less unnecessary friction.

This payment option upgrade empowers customers to:

- Purchase more independently without engaging sales

- Choose between credit card or ACH transfer with a verified bank account

By empowering enterprises and developers, we’re freeing up your time, and ours, to focus on what matters most: building, scaling, and succeeding with Docker.

Visit our documentation to explore the new payment options, or log in to your Docker account to get started today!

Wrapping up

With Docker Desktop 4.41, we’re continuing to meet developers where they are — making it easier to build, test, and ship innovative apps, no matter your stack or setup. Whether you’re pushing AI models to Docker Hub, catching issues early with the Docker DX Extension, or enjoying faster virtualization on macOS, these updates are all about helping you do your best work with the tools you already know and love. We can’t wait to see what you build next!

Learn more

- Authenticate and update today to receive your subscription level’s newest Docker Desktop features.

- Subscribe to the Docker Navigator Newsletter.

- Learn about our sign-in enforcement options.

- New to Docker? Create an account.

- Have questions? The Docker community is here to help.

Docker Desktop for Mac: QEMU Virtualization Option to be Deprecated in 90 Days

We are announcing the upcoming deprecation of QEMU as a virtualization option for Docker Desktop on Apple Silicon Macs. After serving as our legacy virtualization solution during the early transition to Apple Silicon, QEMU will be fully deprecated 90 days from today, on July 14, 2025. This deprecation does not affect QEMU’s role in emulating non-native architectures for multi-platform builds. By moving to Apple Virtualization Framework or Docker VMM, you will ensure optimal performance.

Why We’re Making This Change

Our telemetry shows that a very small percentage of users are still using the QEMU option. We’ve maintained QEMU support for backward compatibility, but both Docker VMM and Apple Virtualization Framework now offer:

- Significantly better performance

- Improved stability

- Enhanced compatibility with macOS updates

- Better integration with Apple Silicon architecture

What This Means For You

If you’re currently using QEMU as your Virtual Machine Manager (VMM) on Docker Desktop for Mac:

- Your current installation will continue to work normally during the 90-day transition period

- After July 1, 2025, Docker Desktop releases will automatically migrate your environment to Apple Virtualization Framework

- You’ll experience improved performance and stability with the newer virtualization options

Migration Plan

The migration process will be smooth and straightforward:

- Users on the latest Docker Desktop release will be automatically migrated to Apple Virtualization Framework after the 90-day period

- During the transition period, you can manually switch to either Docker VMM (our fastest option for Apple Silicon Macs) or Apple Virtualization Framework through Settings > General > Virtual Machine Options

- For 30 days after the deprecation date, the QEMU option will remain available in settings for users who encounter migration issues

- After this extended period, the QEMU option will be fully removed

Note: This deprecation does not affect QEMU’s role in emulating non-native architectures for multi-platform builds.

What You Should Do Now

We recommend proactively switching to one of our newer VMM options before the automatic migration:

- Update to the latest version of Docker Desktop for Mac

- Open Docker Desktop Settings > General

- Under “Choose Virtual Machine Manager (VMM)” select either:

- Docker VMM (BETA) – Our fastest option for Apple Silicon Macs

- Apple Virtualization Framework – A mature, high-performance alternative

Questions or Concerns?

If you have questions or encounter any issues during migration, please:

- Visit our documentation

- Reach out to us via GitHub issues

- Join the conversation on the Docker Community Forums

We’re committed to making this transition as seamless as possible while delivering the best development experience on macOS.

New Docker Extension for Visual Studio Code

Today, we are excited to announce the release of a new, open-source Docker Language Server and Docker DX VS Code extension. In a joint collaboration between Docker and the Microsoft Container Tools team, this new integration enhances the existing Docker extension with improved Dockerfile linting, inline image vulnerability checks, Docker Bake file support, and outlines for Docker Compose files. By working directly with Microsoft, we’re ensuring a native, high-performance experience that complements the existing developer workflow. It’s the next evolution of Docker tooling in VS Code — built to help you move faster, catch issues earlier, and focus on what matters most: building great software.

What’s the Docker DX extension?

The Docker DX extension is focused on providing developers with faster feedback as they edit. Whether you’re authoring a complex Compose file or fine-tuning a Dockerfile, the extension surfaces relevant suggestions, validations, and warnings in real time.

Key features include:

- Dockerfile linting: Get build warnings and best-practice suggestions directly from BuildKit and Buildx.

- Image vulnerability remediation (experimental): Flags references to container images with known vulnerabilities directly in Dockerfiles.

- Bake file support: Includes code completion, variable navigation, and inline suggestions for generating targets based on your Dockerfile stages.

- Compose file outline: Easily navigate complex Compose files with an outline view in the editor.

If you’re already using the Docker VS Code extension, the new features are included — just update the extension and start using them!

Dockerfile linting and vulnerability remediation

The inline Dockerfile linting provides warnings and best-practice guidance for writing Dockerfiles from the experts at Docker, powered by Build Checks. Potential vulnerabilities are highlighted directly in the editor with context about their severity and impact, powered by Docker Scout.

Figure 1: Providing actionable recommendations for fixing vulnerabilities and optimizing Dockerfiles

Early feedback directly in Dockerfiles keeps you focused and saves you and your team time debugging and remediating later.

Docker Bake files

The Docker DX extension makes authoring and editing Docker Bake files quick and easy. It provides code completion, code navigation, and error reporting to make editing Bake files a breeze. The extension will also look at your Dockerfile and suggest Bake targets based on the build stages you have defined in your Dockerfile.

Figure 2: Editing Bake files is simple and intuitive with the rich language features that the Docker DX extension provides.

Figure 3: Creating new Bake files is straightforward as your Dockerfile’s build stages are analyzed and suggested as Bake targets.

Compose outlines

Quickly navigate complex Compose files with the extension’s support for outlines available directly through VS Code’s command palette.

Figure 4: Navigate complex Compose files with the outline panel.

Don’t use VS Code? Try the Language Server!

The features offered by the Docker DX extension are powered by the brand-new Docker Language Server, built on the Language Server Protocol (LSP). This means the same smart editing experience — like real-time feedback, validation, and suggestions for Dockerfiles, Compose, and Bake files — is available in your favorite editor.

Wrapping up

Install the extension from Docker DX – Visual Studio Marketplace today! The functionality is also automatically installed with the existing Docker VS Code extension from Microsoft.

Share your feedback on how it’s working for you, and share what features you’d like to see next. If you’d like to learn more or contribute to the project, check out our GitHub repo.

Learn more

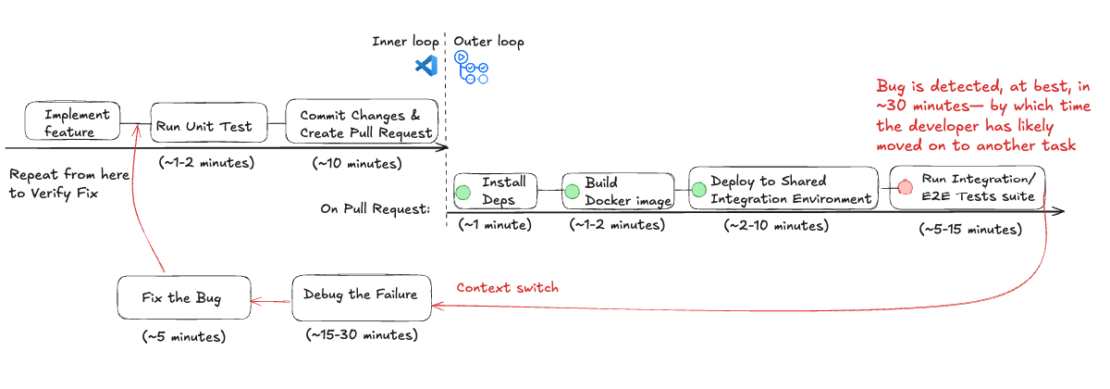

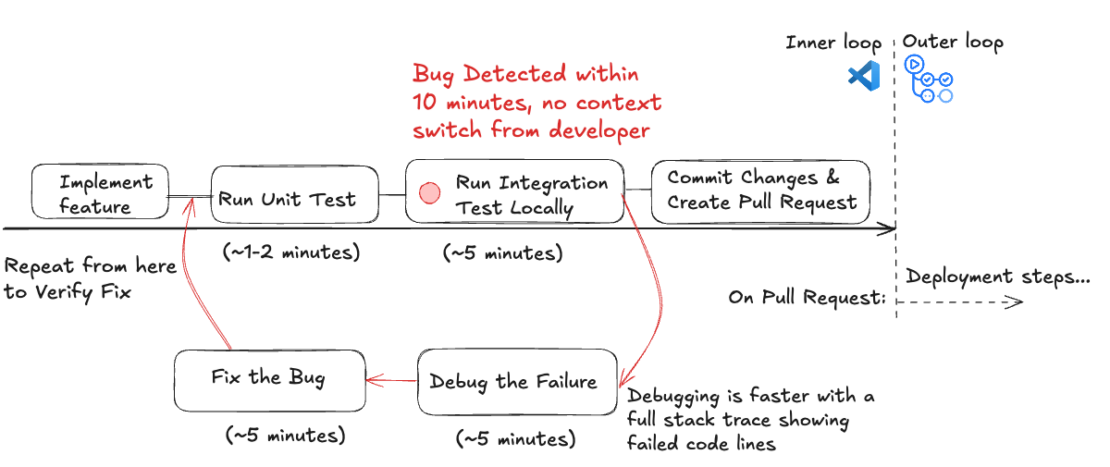

Docker Model Runner: The Missing Piece for Your GenAI Development Workflow

Master Docker and VS Code: Supercharge Your Dev Workflow

Hey there, fellow developers and DevOps champions! I’m excited to share a workflow that has transformed my teams’ development experience time and time again: pairing Docker and Visual Studio Code (VS Code). If you’re looking to speed up local development, minimize those “it works on my machine” excuses, and streamline your entire workflow, you’re in the right place.

I’ve personally encountered countless environment mismatches during my career, and every time, Docker plus VS Code saved the day — no more wasted hours debugging bizarre OS/library issues on a teammate’s machine.

In this post, I’ll walk you through how to get Docker and VS Code humming in perfect harmony, cover advanced debugging scenarios, and even drop some insider tips on security, performance, and ephemeral development environments. By the end, you’ll be equipped to tackle DevOps challenges confidently.

Let’s jump in!

Why use Docker with VS Code?

Whether you’re working solo or collaborating across teams, Docker and Visual Studio Code work together to ensure your development workflow remains smooth, scalable, and future-proof. Let’s take a look at some of the most notable benefits to using Docker with VS Code.

- Consistency Across Environments

Docker’s containers standardize everything from OS libraries to dependencies, creating a consistent dev environment. No more “it works on my machine!” fiascos. - Faster Development Feedback Loop

VS Code’s integrated terminal, built-in debugging, and Docker extension cut down on context-switching—making you more productive in real time. - Multi-Language, Multi-Framework

Whether you love Node.js, Python, .NET, or Ruby, Docker packages your app identically. Meanwhile, VS Code’s extension ecosystem handles language-specific linting and debugging. - Future-Proof

By 2025, ephemeral development environments, container-native CI/CD, and container security scanning (e.g., Docker Scout) will be table stakes for modern teams. Combining Docker and VS Code puts you on the fast track.

Together, Docker and VS Code create a streamlined, flexible, and reliable development environment.

How Docker transformed my development workflow

Let me tell you a quick anecdote:

A few years back, my team spent two days diagnosing a weird bug that only surfaced on one developer’s machine. It turned out they had an outdated system library that caused subtle conflicts. We wasted hours! In the very next sprint, we containerized everything using Docker. Suddenly, everyone’s environment was the same — no more “mismatch” drama. That was the day I became a Docker convert for life.

Since then, I’ve recommended Dockerized workflows to every team I’ve worked with, and thanks to VS Code, spinning up, managing, and debugging containers has become even more seamless.

How to set up Docker in Visual Studio Code

Setting up Docker in Visual Studio Code can be done in a matter of minutes. In two steps, you should be well on your way to leveraging the power of this dynamic duo. You can use the command line to manage containers and build images directly within VS Code. Also, you can create a new container with specific Docker run parameters to maintain configuration and settings.

But first, let’s make sure you’re using the right version!

Ensuring version compatibility

As of early 2025, here’s what I recommend to minimize friction:

- Docker Desktop: v4.37+ or newer (Windows, macOS, or Linux)

- Docker Engine: 27.5+ (if running Docker directly on Linux servers)

- Visual Studio Code: 1.96+

Lastly, check the Docker release notes and VS Code updates to stay aligned with the latest features and patches. Trust me, staying current prevents a lot of “uh-oh” moments later.

1. Docker Desktop (or Docker Engine on Linux)

- Download from Docker’s official site.

- Install and follow prompts.

2. Visual Studio Code

- Install from VS Code’s website.

- Docker Extension: Press

Ctrl+Shift+X(Windows/Linux) orCmd+Shift+X(macOS) in VS Code → search for “Docker” → Install.

- Explore: You’ll see a whale icon on the left toolbar, giving you a GUI-like interface for Docker containers, images, and registries.

Pro Tip: If you’re going fully DevOps, keep Docker Desktop and VS Code up to date. Each release often adds new features or performance/security enhancements.

Examples: Building an app with a Docker container

Node.js example

We’ll create a basic Node.js web server using Express, then build and run it in Docker. The server listens on port 3000 and returns a simple greeting.

Create a Project Folder:

mkdir node-docker-app cd node-docker-app

Initialize a Node.js Application:

npm init -y npm install express

- ‘npm init -y’ generates a default package.json.

- ‘npm install express’ pulls in the Express framework and saves it to ‘package.json’.

Create the Main App File (index.js):

cat <<EOF >index.js

const express = require('express');

const app = express();

const port = 3000;

app.get('/', (req, res) => {

res.send('Hello from Node + Docker + VS Code!');

});

app.listen(port, () => {

console.log(\`App running on port ${port}\`);

});

EOF

- This code starts a server on port 3000. When a user hits http://localhost:3000, they see a “Hello from Node + Docker + VS Code!” message.

Add an Annotated Dockerfile:

# Use a lightweight Node.js 23.x image based on Alpine Linux FROM node:23.6.1-alpine # Set the working directory inside the container WORKDIR /usr/src/app # Copy only the package files first (for efficient layer caching) COPY package*.json ./ # Install Node.js dependencies RUN npm install # Copy the entire project (including index.js) into the container COPY . . # Expose port 3000 for the Node.js server (metadata) EXPOSE 3000 # The default command to run your app CMD ["node", "index.js"]

Build and run the container image:

docker build -t node-docker-app .

Run the container, mapping port 3000 on the container to port 3000 locally:

docker run -p 3000:3000 node-docker-app

- Open http://localhost:3000 in your browser. You’ll see “Hello from Node + Docker + VS Code!”

Python example

Here, we’ll build a simple Flask application that listens on port 3001 and displays a greeting. We’ll then package it into a Docker container.

Create a Project Folder:

mkdir python-docker-app cd python-docker-app

Create a Basic Flask App (app.py):

cat <<EOF >app.py

from flask import Flask

app = Flask(__name__)

@app.route('/')

def hello():

return 'Hello from Python + Docker!'

if __name__ == '__main__':

app.run(host='0.0.0.0', port=3001)

EOF

- This code defines a minimal Flask server that responds to requests on port 3001.

Add Dependencies:

echo "Flask==2.3.0" > requirements.txt

- Lists your Python dependencies. In this case, just Flask.

Create an Annotated Dockerfile:

# Use Python 3.11 on Alpine for a smaller base image FROM python:3.11-alpine # Set the container's working directory WORKDIR /app # Copy only the requirements file first to leverage caching COPY requirements.txt . # Install Python libraries RUN pip install -r requirements.txt # Copy the rest of the application code COPY . . # Expose port 3001, where Flask will listen EXPOSE 3001 # Run the Flask app CMD ["python", "app.py"]

Build and Run:

docker build -t python-docker-app . docker run -p 3001:3001 python-docker-app

- Visit http://localhost:3001 and you’ll see “Hello from Python + Docker!”

Manage containers in VS Code with the Docker Extension

Once you install the Docker extension in Visual Studio Code, you can easily manage containers, images, and registries — bringing the full power of Docker into VS Code. You can also open a code terminal within VS Code to execute commands and manage applications seamlessly through the container’s isolated filesystem. And the context menu can be used to perform actions like starting, stopping, and removing containers.

With the Docker extension installed, open VS Code and click the Docker icon:

- Containers: View running/stopped containers, view logs, stop, or remove them at a click. Each container is associated with a specific container name, which helps you identify and manage them effectively.

- Images: Inspect your local images, tag them, or push to Docker Hub/other registries.

- Registries: Quickly pull from Docker Hub or private repos.

No more memorizing container IDs or retyping long CLI commands. This is especially handy for visual folks or those new to Docker.

Advanced Debugging (Single & Multi-Service)

Debugging containerized applications can be challenging, but with Docker and Visual Studio Code, you can streamline the process. Here’s some advanced debugging that you can try for yourself!

- Containerized Debug Ports

- For Node, expose port

9229(EXPOSE 9229) and run with--inspect. - In VS Code, create a “Docker: Attach to Node” debug config to step through code in real time.

- For Node, expose port

- Microservices / Docker Compose

- For multiple services, define them in

compose.yml. - Spin them up with

docker compose up. - Configure VS Code to attach debuggers to each service’s port (e.g., microservice A on

9229, microservice B on9230).

- For multiple services, define them in

- Remote – Containers (Dev Containers)

- Use VS Code’s Dev Containers extension for a fully containerized development environment.

- Perfect for large teams: Everyone codes in the same environment with preinstalled tools/libraries. Onboarding new devs is a breeze.

Insider Trick: If you’re juggling multiple services, label your containers meaningfully (--name web-service, --name auth-service) so they’re easy to spot in VS Code’s Docker panel.

Pro Tips: Security & Performance

Security

- Use Trusted Base Images

Official images likenode:23.6.1-alpine, python:3.11-alpine, etc., reduce the risk of hidden vulnerabilities. - Scan Images

Tools like Docker Scout or Trivy spot vulnerabilities. Integrate into CI/CD to catch them early. - Secrets Management

Never hardcode tokens or credentials—use Docker secrets, environment variables, or external vaults. - Encryption & SSL

For production apps, consider a reverse proxy (Nginx, Traefik) or in-container SSL termination.

Performance

- Resource Allocation

Docker Desktop on Windows/macOS allows you to tweak CPU/RAM usage. Don’t starve your containers if you run multiple services. - Multi-Stage Builds &

.dockerignore

Keep images lean and build times short by ignoring unneeded files (node_modules,.git). - Dev Containers

Offload heavy dependencies to a container, freeing your host machine. - Docker Compose v2

It’s the new standard—faster, more intuitive commands. Great for orchestrating multi-service setups on your dev box.

Real-World Impact: In a recent project, containerizing builds and using ephemeral agents slashed our development environment setup time by 80%. That’s more time coding, and less time wrangling dependencies!

Going Further: CI/CD and Ephemeral Dev Environments

CI/CD Integration:

Automate Docker builds in Jenkins, GitHub Actions, or Azure DevOps. Run your tests within containers for consistent results.

# Example GitHub Actions snippet

jobs:

build-and-test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Build Docker Image

run: docker build -t myorg/myapp:${{ github.sha }} .

- name: Run Tests

run: docker run --rm myorg/myapp:${{ github.sha }} npm test

- name: Push Image

run: |

docker login -u $USER -p $TOKEN

docker push myorg/myapp:${{ github.sha }}

- Ephemeral Build Agents:

Use Docker containers as throwaway build agents. Each CI job spins up a clean environment—no leftover caches or config drift. - Docker in Kubernetes:

If you need to scale horizontally, deploying containers to Kubernetes (K8s) can handle higher traffic or more complex microservice architectures. - Dev Containers & Codespaces:

Tools like GitHub Codespaces or the “Remote – Containers” extension let you develop in ephemeral containers in the cloud. Perfect for distributed teams—everyone codes in the same environment, minus the “It’s fine on my laptop!” tension.

Conclusion

Congrats! You’ve just stepped through how Docker and Visual Studio Code can supercharge your development:

- Consistent Environments: Docker ensures no more environment mismatch nightmares.

- Speedy Debugging & Management: VS Code’s integrated Docker extension and robust debug tools keep you in the zone.

- Security & Performance: Multi-stage builds, scans, ephemeral dev containers—these are the building blocks of a healthy, future-proofed DevOps pipeline.

- CI/CD & Beyond: Extend the same principles to your CI/CD flows, ensuring smooth releases and easy rollbacks.

Once you see the difference in speed, reliability, and consistency, you’ll never want to go back to old-school local setups.

And that’s it! We’ve packed in real-world anecdotes, pro tips, stories, and future-proof best practices to impress seasoned IT pros on Docker.com. Now go forth, embrace containers, and code with confidence — happy shipping!

Learn more

- Install or upgrade Docker Desktop & VS Code to the latest versions.

- Try containerizing an app from scratch — your future self (and your teammates) will thank you.

- Check out the Docker DX extension for VS Code.

- Experiment with ephemeral dev containers, advanced debugging, or Docker security scanning (Docker Scout).

- Join the Docker community on Slack or the forums. Swap stories or pick up fresh tips!

Docker Desktop 4.40: Model Runner to run LLMs locally, more powerful Docker AI Agent, and expanded AI Tools Catalog

At Docker, we’re focused on making life easier for developers and teams building high-quality applications, including those powered by generative AI. That’s why, in the Docker Desktop 4.40 release, we’re introducing new tools that simplify GenAI app development and support secure, scalable development.

Keep reading to find updates on new tooling like Model Runner and a more powerful Docker AI Agent with MCP capabilities. Plus, with the AI Tool Catalog, teams can now easily build smarter AI-powered applications and agents with MCPs. And with Docker Desktop Setting Reporting, admins now get greater visibility into compliance and policy enforcement.

Docker Model Runner (Beta): Bringing local AI model execution to developers

Now in beta with Docker Desktop 4.40, Docker Model Runner makes it easier for developers to run AI models locally. No extra setup, no jumping between tools, and no need to wrangle infrastructure. This first iteration is all about helping developers quickly experiment and iterate on models right from their local machines.

The beta includes three core capabilities:

- Local model execution, right out of the box

- GPU acceleration on Apple Silicon for faster performance

- Standardized model packaging using OCI Artifacts

Powered by llama.cpp and accessible via the OpenAI API, the built-in inference engine makes running models feel as simple as running a container. On Mac, Model Runner uses host-based execution to tap directly into your hardware — speeding things up with zero extra effort.

Models are also packaged as OCI Artifacts, so you can version, store, and ship them using the same trusted registries and CI/CD workflows you already use. Check out our docs for more detailed info!

Figure 1: Using Docker Model Runner and CLI commands to experiment with models locally

This release lays the groundwork for what’s ahead: support for additional platforms like Windows with GPU, the ability to customize and publish your own models, and deeper integration into the development loop. We’re just getting started with Docker Model Runner and look forward to sharing even more updates and enhancements in the coming weeks.

Docker AI Agent: Smarter and more powerful with MCP integration + AI Tool Catalog

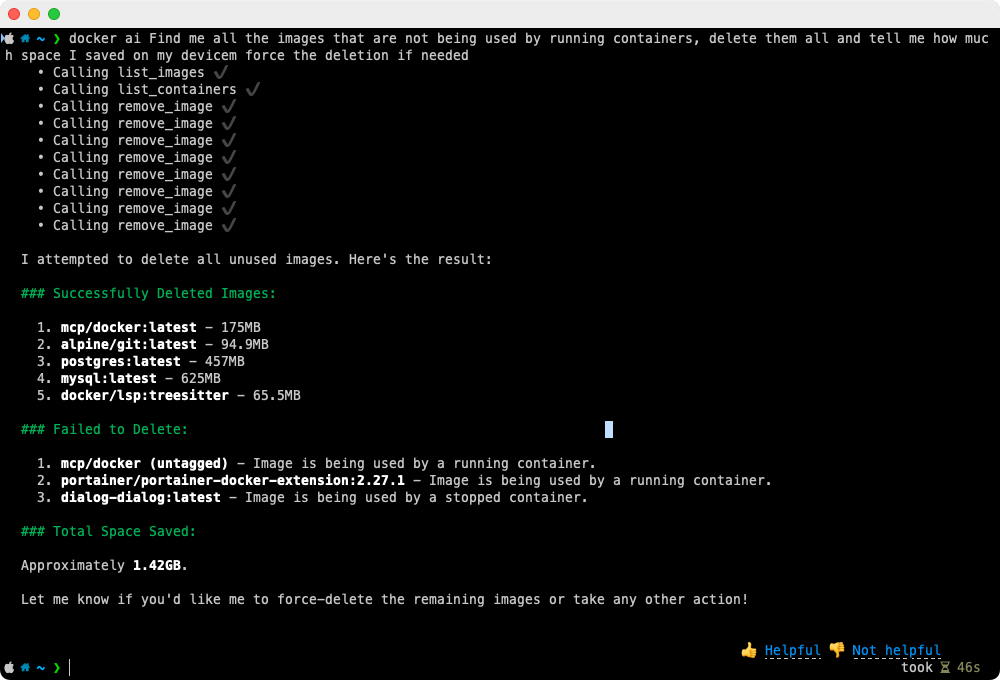

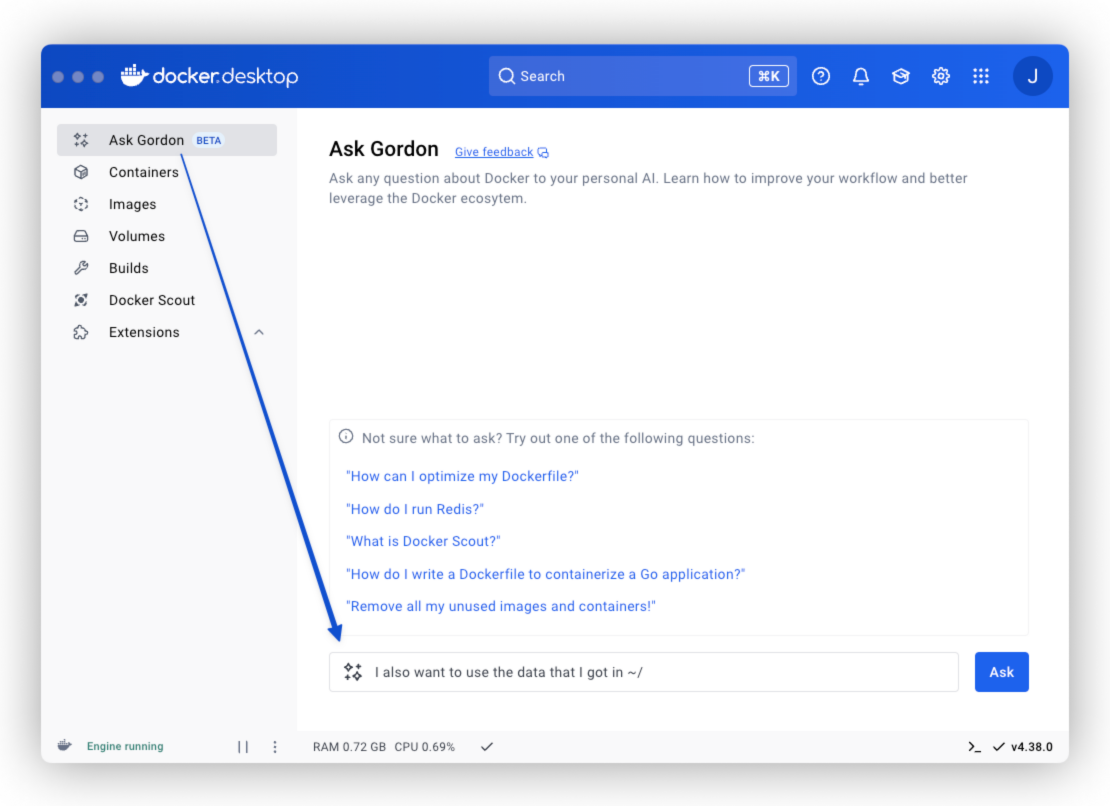

Our vision for the Docker AI Agent is simple: be context-aware, deeply knowledgeable, and available wherever developers build. With this release, we’re one step closer! The Docker AI Agent is now even more capable, making it easier for developers to tap into the Docker ecosystem and streamline their workflows beyond Docker.

Your trusted AI Agent for all things Docker

The Docker AI agent now has built-in support for many new popular developer capabilities like:

- Running shell commands

- Performing Git operations

- Downloading resources

- Managing local files

Thanks to a Docker Scout integration, we also now support other tools from the Docker ecosystem, such as performing security analysis on your Dockerfiles or images.

Expanding the Docker AI Agent beyond Docker

The Docker AI Agent now fully embraces the Model Context Protocol (MCP). This new standard for connecting AI agents and models to external data and tools makes them more powerful and tailored to specific needs. In addition to acting as an MCP client, many of Docker AI Agent’s capabilities are now exposed as MCP Servers. This means you can interact with the agent in Docker Desktop GUI or CLI or your favorite client, such as Claude Desktop and Cursor.

Figure 2: Extending Docker AI Agent’s capabilities with many tools, including the MCP Catalog.

AI Tool Catalog: Your launchpad for experimenting with MCP servers

Thanks to the AI Tool Catalog extension in Docker Desktop, you can explore different MCP servers and seamlessly connect the Docker AI agent to other tools or other LLMs to the Docker ecosystem. No more manually configuring multiple MCP servers! We’ve also added secure handling and injection of MPC servers’ secrets, such as API keys, to simplify log-ins and credential management.

The AI Tool Catalog includes containerized servers that have been pushed to Docker Hub, and we’ll continue to expand them. If you’re working in this space or have an MCP server that you’d like to distribute, please reach out in our public GitHub repo. To install the AI Tool Catalog, go to the extensions menu of Docker Desktop or use this for installation.

Figure 3: Explore and discover MCP servers in the AI Tools Catalog extension in Docker Desktop

Bring compliance into focus with Docker Desktop Setting Reporting

Building on the Desktop Settings Management capabilities introduced in Docker Desktop 4.36, Docker Desktop 4.40 brings robust compliance reporting for Docker Business customers. This new powerful feature gives administrators comprehensive visibility into user compliance with assigned settings policies across the organization.

Key benefits

- Real-time compliance tracking: Easily monitor which users are compliant with their assigned settings policies. This allows administrators to quickly identify and address non-compliant systems and users.

- Streamlined troubleshooting: Detailed compliance status information helps administrators diagnose why certain users might be non-compliant, reducing resolution time and IT overhead.

Figure 4: Desktop settings reporting provides an overview of policy assignment and compliance status, helping organizations stay compliant.

Get started with Docker Desktop Setting Reporting

The Desktop Setting Reporting dashboard is currently being rolled out through Early Access. Administrators can see which settings policies are assigned to each user and whether those policies are being correctly applied.